Quantum machine learning (QML) promises powerful new algorithms, but its vulnerability to adversarial attacks remains largely unknown. Saeefa Rubaiyet Nowmi, Jesus Lopez, and Md Mahmudul Alam Imon, from the University of Texas at El Paso, alongside Shahrooz Pouryousef from the University of Massachusetts Amherst and Mohammad Saidur Rahman, systematically evaluate the robustness of QML systems against a range of threats. Their research integrates conceptual analysis with practical testing, employing three distinct attack models against quantum neural networks trained on both image and malware datasets. The team demonstrates that while amplitude encoding achieves higher accuracy in ideal conditions, it suffers significant performance drops under attack, whereas angle encoding proves more resilient in noisy environments, revealing a crucial trade-off between capacity and robustness. These findings offer vital guidance for building secure and reliable QML systems suitable for deployment on near-term, noisy quantum computers.

Quantum Machine Learning Under Label Flipping Attacks

This research investigates the vulnerability of quantum machine learning platforms (QMLPs) to label-flipping attacks, where training data labels are intentionally altered. Scientists assessed performance on both the AZ-Class dataset, representing Android malware, and the MNIST dataset of handwritten digits, under both clean and noisy conditions. The study reveals that accuracy generally decreases as the proportion of flipped labels increases, though deeper circuits sometimes demonstrate greater resilience. Label smoothing, a regularization technique, occasionally improves performance, but its effect is inconsistent.

Notably, the AZ-Class dataset consistently exhibits lower accuracy than MNIST under these attacks, suggesting it presents a greater challenge for classification. Further experiments explored the impact of depolarization noise, simulating realistic limitations of quantum hardware. The addition of noise significantly reduced accuracy compared to clean conditions, diminishing the performance differences between various encoding schemes and circuit depths. In highly noisy environments, accuracy often fell below 10%, highlighting the critical need for noise mitigation strategies. The team also examined the performance of quantum neural networks (QNNs) under Quantum Indiscriminate Data Poisoning (QUID) attacks, employing a Q-detection defense mechanism.

Results demonstrate that Q-detection can mitigate the impact of QUID attacks, though its effectiveness varies depending on the proportion of poisoned data. Overall, the study demonstrates that both label-flipping and QUID attacks reduce model accuracy, and that defenses, while helpful, are limited, particularly in noisy environments. The consistent vulnerability of the AZ-Class dataset underscores the need for tailored security measures for specific data types. These findings emphasize the importance of developing robust quantum machine learning models capable of withstanding adversarial attacks and operating reliably in the presence of noise.

Adversarial Attacks Reveal Quantum Model Robustness

This research systematically evaluates the adversarial robustness of quantum machine learning (QML) models, employing a comprehensive experimental framework across multiple threat models and quantum circuit configurations. Scientists engineered a rigorous testing environment, implementing representative attacks including label-flipping, Quantum Indiscriminate Data Poisoning (QUID), and gradient-based methods. These attacks were applied to Quantum Neural Networks (QNNs) trained on the MNIST dataset of handwritten digits and the AZ-Class dataset representing Android malware. The study meticulously varied key parameters to assess their impact on model robustness, including circuit depth and encoding schemes.

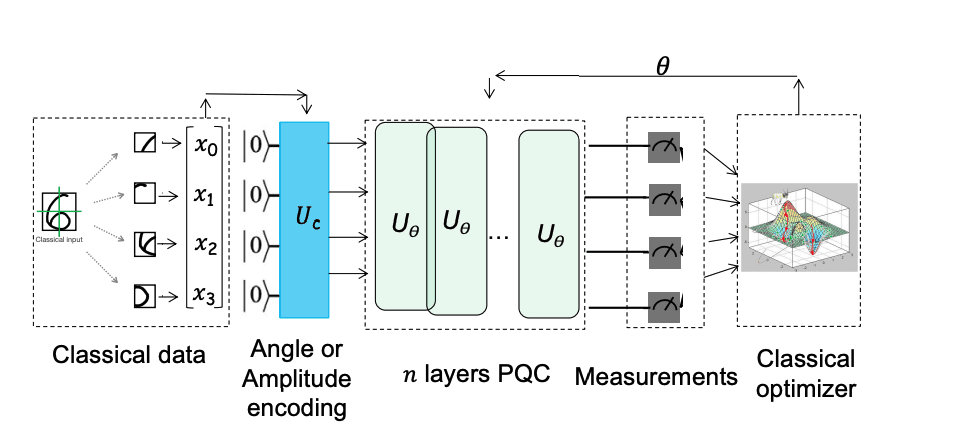

Researchers trained and evaluated models under both noiseless conditions and with depolarization noise, simulating realistic limitations of current quantum hardware. To ensure comparability, the team adapted existing attacks and defenses from classical machine learning, tailoring them to the quantum domain. The experimental setup involved encoding data using angle and amplitude schemes, then processing it through parameterized quantum circuits (PQCs). Measurement outcomes were fed into a classical optimizer, which iteratively updated circuit parameters to minimize a loss function, forming a variational quantum circuit (VQC). This hybrid quantum-classical approach allowed scientists to systematically analyze the interplay between quantum circuit design, data encoding, and adversarial robustness. The team also conducted comparative analyses against classical multilayer perceptrons (CMLPs) to highlight unique vulnerabilities and motivate the development of quantum-specific defenses.

Adversarial Robustness Limits Quantum Machine Learning Performance

This work presents a systematic evaluation of adversarial robustness in quantum machine learning (QML), investigating how different encoding schemes, circuit depths, and quantum noise impact model vulnerability. Researchers implemented and tested representative attacks, including label-flipping, QUID data poisoning, and gradient-based methods, across black-box, gray-box, and white-box threat models, using neural networks trained on both the MNIST and AZ-Class (Android malware) datasets. The study reveals a crucial trade-off between representational capacity and robustness, demonstrating that amplitude encoding achieves the highest clean accuracy, but suffers a sharp decline under adversarial perturbations and depolarizing noise. In contrast, angle encoding proves more stable in shallow, noisy regimes.

Experiments show that baseline performance is jointly determined by encoding and circuit depth, with angle encoding favoring shallow circuits and amplitude encoding benefiting from deeper ones. Researchers also found that the QUID attack, a gray-box method, achieves higher attack success rates, though its effectiveness is diminished by noise channels. This suggests that noise can act as a natural defense mechanism in noisy intermediate-scale quantum (NISQ) systems. Classical multi-layer perceptrons (CMLPs) achieved high accuracy on both datasets, establishing performance upper bounds. The results demonstrate that label-flipping attacks reduce CMLP accuracy, while angle-encoded QMLPs maintain higher accuracy under the same attack. These findings guide the development of secure and resilient QML architectures for practical deployment in real-world, noisy environments.

Encoding, Depth, and Noise Impact QML Robustness

This work presents a systematic investigation of adversarial threats to quantum machine learning (QML) systems, exploring both classical and quantum-native attack strategies. The research demonstrates that the robustness of QML is significantly influenced by the chosen encoding method, the depth of the quantum circuit, and the presence of noise, revealing a trade-off between representational capacity and stability. Specifically, amplitude encoding achieves higher accuracy in deep circuits but is vulnerable to even small perturbations, while angle encoding proves more resilient in shallower, noisy environments. The team implemented and evaluated representative attacks, including label-flipping, data poisoning, and gradient-based methods, across different threat models. Their findings suggest that noise can act as a natural defense mechanism, disrupting attacks that rely on precise Hilbert-space correlations. While current defenses are limited, this research highlights the critical need for developing robust QML architectures.

👉 More information

🗞 Critical Evaluation of Quantum Machine Learning for Adversarial Robustness

🧠 ArXiv: https://arxiv.org/abs/2511.14989