The increasing demand for artificial intelligence at the edge of networks requires new computing hardware that moves beyond traditional designs, and researchers are now exploring brain-inspired systems based on spiking neural networks. Zhu Wang, Song Wang, and Zhiyuan Du, along with colleagues from The University of Hong Kong including Ruibin Mao, Yu Xiao, and Hayden Kwok-Hay So, present a fully integrated memristive spiking neural network that overcomes limitations in speed, scalability, and accuracy found in previous designs. Their system, built with a compact array of memristors and custom-designed analog neurons, processes event-based data with remarkable efficiency and fidelity, achieving a 50,000-fold acceleration of complex signals. This advance stems from a novel approach to time-scaling within the neurons, allowing for direct training on real-world data and establishing a promising pathway towards ultra-fast, energy-efficient processors for applications ranging from rapid sensor analysis to in-sensor machine vision.

RRAM Synapses and Spiking Neural Networks

Scientists have engineered a highly efficient Spiking Neural Network (SNN) system integrating Resistive Random-Access Memory (RRAM) synapses with conventional CMOS neurons on a single chip. This fully integrated design reduces energy consumption and increases processing speed, achieving state-of-the-art accuracy on the DVS128 Gesture dataset. The system prioritizes low latency and high energy efficiency, leveraging the inherent advantages of spiking computation, a method inspired by the brain’s processing techniques. Researchers employed backpropagation with surrogate gradients to directly optimize the SNN, avoiding the limitations of converting artificial neural networks or relying on biologically-inspired learning rules.

This direct training approach optimizes the SNN in the spiking domain, allowing the network to learn from temporal spike streams and achieve high accuracy with short processing times. The system utilizes RRAM for synaptic weights, offering high density and low power consumption, while CMOS neurons implement the neuronal dynamics. Integrating RRAM and CMOS on a single chip reduces communication overhead and improves efficiency, resulting in a 1. 37x enhancement in energy efficiency compared to previous designs. Scalability analysis demonstrates that further reductions in neuronal time constants can lead to improved performance. Researchers identified potential for further optimization through on-chip spike capture, which could reduce energy consumption and enable faster processing. The system’s scalable design allows for the creation of larger and more complex SNNs, paving the way for advanced neuromorphic computing applications.

Integrated Memristor Array for Spiking Neural Networks

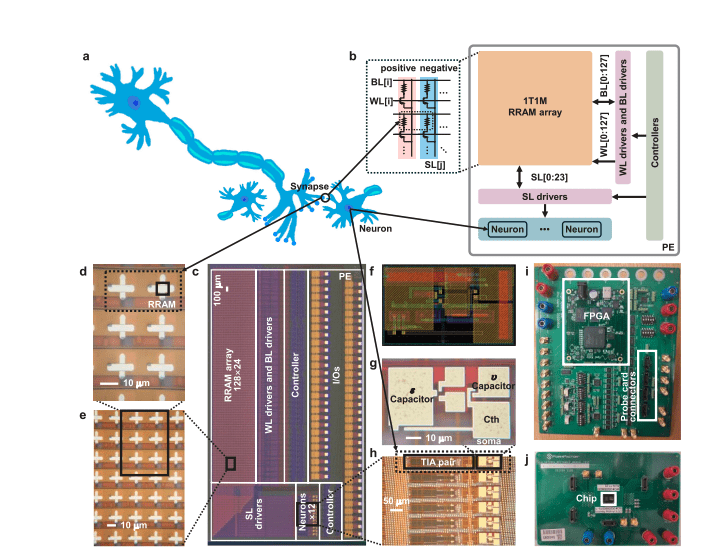

Scientists have developed a novel hardware system for artificial intelligence, centered around a 128×24 memristor array integrated onto a custom CMOS chip, to achieve high-speed, energy-efficient processing of event-based data. The study pioneered a fully integrated approach, preserving temporal information within spiking neural networks. Experiments delivered high-speed event streams to the chip using an arbitrary waveform generator, while an onboard field-programmable gate array controlled memristor programming and managed communication. The core innovation lies in custom-designed spiking neurons, specifically a spike response model (SRM) implemented using analog circuits.

Researchers leveraged simple resistor-capacitor (RC) filters to realize computationally expensive temporal convolution kernels, addressing the limitations of digital implementations. Crucially, the neuron’s kernel was engineered to maintain proportionality between time constants and input spike stream scales, enabling the use of compact on-chip capacitors and overcoming a key bottleneck in analog neuron scalability. Furthermore, the synapse filter was integrated directly into the neuron design, reducing energy consumption by applying short voltage pulses to the memristors. This approach enables the system to process accelerated event data with minimal hardware requirements, while maintaining a continuous membrane potential trace.

The SRM neurons utilize two kernels, each with distinct time constants, to generate a rich repertoire of spiking patterns, including bursting and phasic spiking, and an adaptive threshold that selectively retains recent information. The system achieves an experimental accuracy of 93. 06% with a measured energy efficiency of 101. 05 TSOPS/W, demonstrating the potential for high-throughput analysis critical for rapid-response edge applications.

Memristive Spiking Network Accelerates Gesture Recognition

Scientists have developed a fully integrated memristive spiking neural network (SNN) that achieves high-speed, energy-efficient processing of complex, time-sensitive data. The system incorporates a 128×24 memristor array integrated onto a CMOS chip alongside custom-designed analog neurons, enabling accelerated spatiotemporal spike signal processing with high fidelity. A key breakthrough lies in the analog neurons’ proportional time-scaling property, which allows the use of compact on-chip capacitors and direct training on spatiotemporal data. Experiments using the DVS128 Gesture dataset demonstrate the system’s capabilities, accelerating each sample 50,000-fold to a duration of just 30 microseconds.

The hardware achieves an impressive experimental accuracy of 93. 06% while maintaining a measured energy efficiency of 101. 05 TSOPS/W, significantly outperforming conventional systems. This efficiency stems from the use of short voltage pulses applied to the memristors. The team’s innovative spike response model (SRM) neurons, implemented using analog circuits, are central to this performance.

By leveraging passive resistor-capacitor filters to realize temporal convolution kernels, the researchers addressed the computational complexity typically associated with SRM neurons in digital hardware. The design maintains proportionality between input spike stream timescales and neuron time constants, enabling the use of compact capacitors. Scientists project further efficiency gains through the use of picosecond-width spikes and advanced fabrication techniques, paving the way for high-throughput analysis critical for rapid-response edge applications like high-speed sensor data analysis and ultra-fast in-sensor machine vision.

Spatiotemporal Processing with Memristive Neural Networks

This research demonstrates a fully integrated memristive spiking neural network designed for rapid, energy-efficient processing of spatiotemporal data, a crucial step towards advanced edge computing. The system, built using a 180-nanometer technology, achieves high-fidelity processing of accelerated data streams, specifically the DVS128 Gesture dataset, within 44. 31 microseconds, attaining an accuracy of 93. 06%. This represents a 2. 80-fold reduction in latency and a 1. 35-fold improvement in energy efficiency compared to existing state-of-the-art implementations.

👉 More information

🗞 Fully Integrated Memristive Spiking Neural Network with Analog Neurons for High-Speed Event-Based Data Processing

🧠 ArXiv: https://arxiv.org/abs/2509.04960