Reinforcement learning from verifiable rewards struggles with complex reasoning tasks, particularly in areas like theorem proving where intermediate steps are vital but final answers offer limited feedback. Zhen Wang, Zhifeng Gao, and Guolin Ke, all from DP Technology, address this challenge with a novel approach called Masked-and-Reordered Self-Supervision, or MR-RLVR. Inspired by techniques used in language modelling, their method extracts learning signals from the reasoning process itself, using masked prediction and step reordering to create self-supervised rewards. The team demonstrates that this process-aware training significantly enhances performance on challenging mathematical benchmarks, achieving average relative gains of almost ten percent on key metrics when applied to large language models, and paving the way for more robust and scalable reasoning systems.

Reasoning Enhancement via Self-Supervised Reinforcement Learning

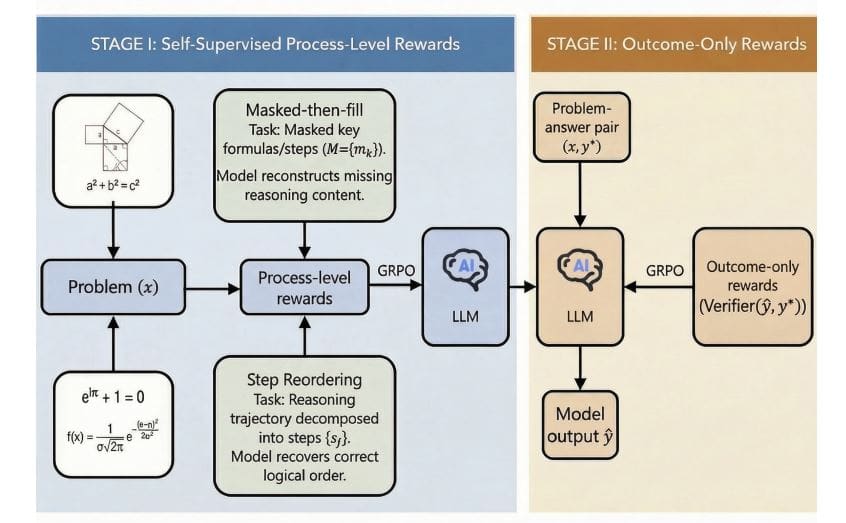

This study introduces MR-RLVR, a novel methodology to enhance mathematical reasoning in large language models, addressing limitations in existing reinforcement learning approaches. Researchers engineered a two-stage training pipeline, beginning with self-supervised learning on sampled mathematical calculation and proof data. This initial phase constructs process-level rewards through two innovative tasks: “masked-then-fill” and “step reordering”. The masked reconstruction task obscures portions of existing reasoning, challenging the model to accurately recover the missing information, while step permutation randomly reorders steps, requiring the model to identify and correct logical inconsistencies.

Following self-supervised training, the team conducted reinforcement learning via RLVR fine-tuning, focusing on mathematical calculation datasets where outcomes are verifiable. This stage leverages a reward function that automatically assesses the logical or factual consistency of generated solutions, employing numerical tolerance scoring, symbolic equivalence, or code-level tests. The core of the RLVR objective maximizes this verifiable reward while simultaneously minimizing divergence from a reference policy using a Kullback-Leibler regularization term, ensuring stable training and preventing excessive policy drift. To quantify performance, the team implemented MR-RLVR on Qwen2.

Masked Reordering Boosts Mathematical Reasoning Performance

Process-level rewards are then calculated based on the accuracy of these reconstructions and reorderings, providing dense feedback during training. The team implemented MR-RLVR on both Qwen2. 5-3B and DeepSeek-R1-Distill-Qwen-1. 5B models, demonstrating the method’s versatility and effectiveness. These results confirm that incorporating process-aware self-supervision effectively enhances RLVR’s scalability and performance in scenarios where only final outcomes are verifiable.

Reasoning Patterns Improve Mathematical Problem Solving

This research presents MR-RLVR, a new framework that enhances reinforcement learning with process-level self-supervision to improve mathematical reasoning in large language models. Unlike traditional methods that rely solely on verifying final answers, MR-RLVR constructs training signals from the reasoning process itself, using tasks such as masked-then-fill and step reordering. These tasks encourage models to learn reusable reasoning patterns and structures, rather than simply memorizing solutions. Implementation and evaluation on models including Qwen2. 5-3B and DeepSeek-R1-Distill-Qwen-1.

5B, across benchmarks like AIME24, AIME25, AMC23, and MATH500, demonstrate that MR-RLVR consistently outperforms standard reinforcement learning from verifiable rewards. The results indicate that incorporating process-level self-supervision is particularly beneficial for complex problems requiring multiple reasoning steps, and also improves data efficiency. The authors acknowledge that the current implementation uses fixed masking positions and shuffling schemes, and future work will explore dynamically adapting these strategies during training. They also plan to extend MR-RLVR to other structured reasoning domains, such as program synthesis and theorem proving, and to multimodal reasoning tasks. Further research will focus on designing more diverse process-level tasks, including error correction, and integrating MR-RLVR with explicit process reward models and test-time scaling techniques to further improve reasoning capabilities.

👉 More information

🗞 Masked-and-Reordered Self-Supervision for Reinforcement Learning from Verifiable Rewards

🧠 ArXiv: https://arxiv.org/abs/2511.17473