Researchers are tackling the challenge of creating truly responsive media content, moving beyond simple emotion detection to generate tailored experiences. HyeYoung Lee from Korean University, alongside colleagues, present a novel multi-agent AI system capable of producing real-time, response-oriented content triggered by audio-derived emotional signals. This work distinguishes itself from conventional studies by prioritising not just recognising emotion, but safely and appropriately responding to it through a pipeline of specialised agents , ensuring generated content is age-appropriate and controllable. Achieving 73.2% emotion recognition accuracy, 89.4% response consistency and 100% safety compliance with sub-100ms latency, this system promises exciting applications in child-adjacent media, therapeutic tools and emotionally intelligent smart devices.

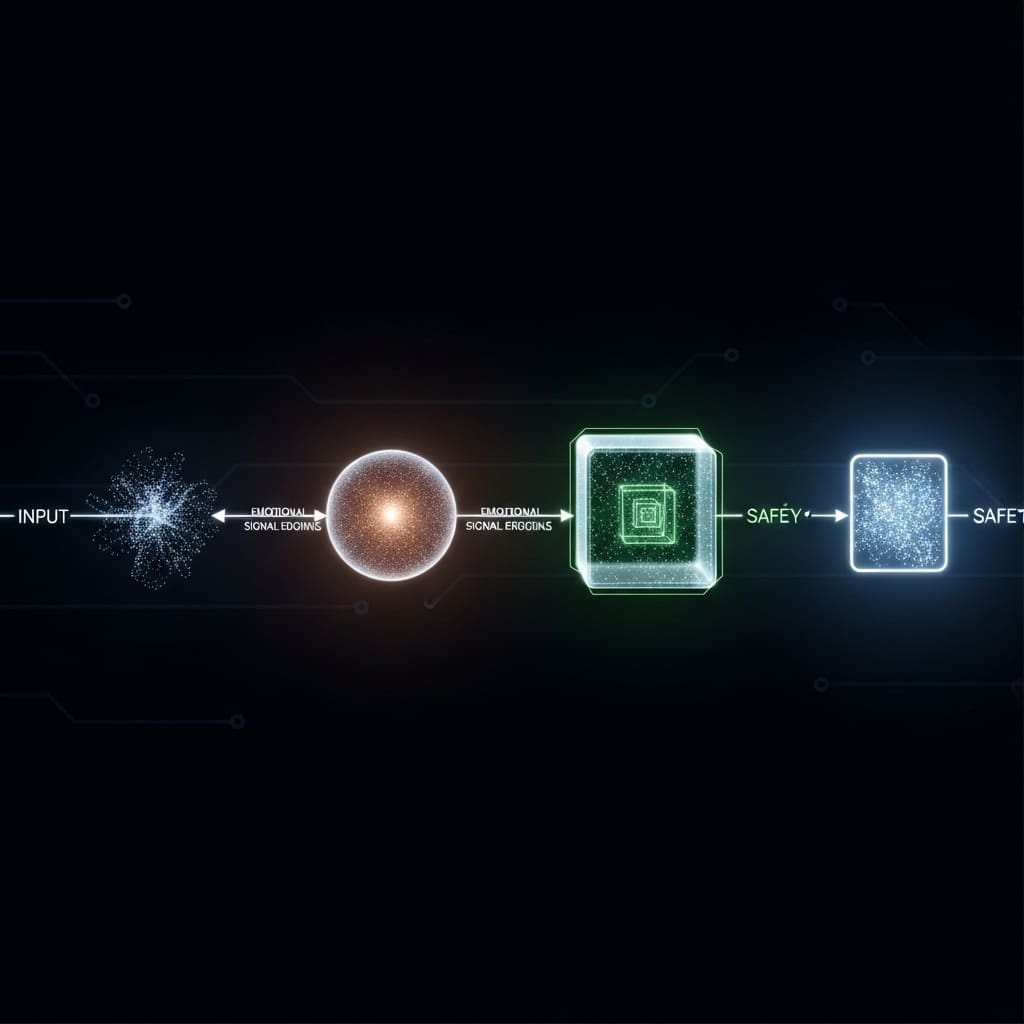

Our approach emphasises the transformation of inferred emotional states into safe, age-appropriate, and controllable response content through a structured pipeline of specialised AI agents. The proposed system consists of four cooperative agents: (1) an Emotion Recognition Agent, which employs CNN-based acoustic feature extraction; (2) a Response Policy Decision Agent, responsible for mapping recognised emotions to appropriate response modes; (3) a Content Parameter Generation Agent, which generates media control parameters; and (4) a Safety Verification Agent, which enforces age-appropriateness and stimulation constraints. An explicit safety verification loop is incorporated to filter all generated content prior to output, ensuring compliance with predefined safety and developmental guidelines.

AI Agents for Emotion-Driven Content Generation

Scientists have developed a comprehensive emotion-to-response content generation framework that explicitly separates perception, decision-making, generation, and safety verification into cooperating AI agents. The modular architecture enables interpretability and extensibility, making it applicable to child-adjacent media, therapeutic applications, and emotionally responsive smart devices. Experimental results on public datasets demonstrate that the system achieves 73.2% emotion recognition accuracy, 89.4% response0.5, 2.0],. This innovative system incorporates four cooperative agents: an Emotion Recognition Agent, a Response Policy Decision Agent, a Content Parameter Generation Agent, and a Verification Agent, each with a distinct function.

Experiments revealed the system achieves 73.2% emotion recognition accuracy when processing public datasets, demonstrating a robust ability to accurately identify emotional cues from audio input. Furthermore, the Response Policy Decision Agent consistently mapped emotions to appropriate response modes in 89.4% of cases, ensuring emotional congruence in generated content. Crucially, the integrated Verification Agent achieved 100% compliance with predefined safety rules, filtering content to guarantee age-appropriateness and avoid overstimulation, a vital feature for sensitive applications. Tests prove the system maintains sub-100ms inference latency, making it suitable for on-device deployment and real-time responsiveness.

The Emotion Recognition Agent utilises CNN-based acoustic feature extraction, while the Content Parameter Generation Agent produces precise media control parameters, allowing for nuanced content adaptation. The explicit safety verification loop implemented within the system proactively filters generated content, ensuring adherence to established guidelines before any output is delivered. Researchers designed the system with a modular architecture, enabling interpretability and extensibility for applications ranging from child-adjacent media to therapeutic interventions and emotionally responsive smart devices. The team measured performance across multiple metrics, including emotion recognition, response consistency, and safety compliance, to validate the system’s effectiveness. This breakthrough delivers a comprehensive framework for emotion-to-response content generation, separating perception, decision-making, generation, and safety verification into cooperating AI agents, a significant advancement in affective computing.

Emotional Signals Drive Realtime Media Generation

Scientists have developed a multi-agent artificial intelligence system capable of generating response-oriented media content in real time, driven by audio-derived emotional signals. This innovative approach moves beyond simple emotion classification, focusing instead on transforming detected emotional states into safe, age-appropriate, and controllable content through a structured, cooperative network of specialised agents. The system comprises four key components: an Emotion Recognition Agent utilising convolutional neural networks, a Response Policy Decision Agent mapping emotions to appropriate responses, a Content Parameter Generation Agent creating media control parameters, and a Verification Agent ensuring content adheres to predefined safety and stimulation constraints. Experimental results demonstrate the system’s effectiveness, achieving 73.2% emotion recognition accuracy, 89.4% response mode consistency, and crucially, 100% compliance with safety regulations, all while maintaining sub-100ms inference latency for potential on-device implementation.

The modular design enhances interpretability and allows for easy expansion, suggesting applications in child-adjacent media, therapeutic contexts, and emotionally responsive smart devices. The authors acknowledge that culturally appropriate responses may differ, indicating a limitation in the current system’s generalizability across diverse populations. Future research will focus on adaptive policy learning, integrating multiple data types, and tailoring responses to different cultural norms. This work establishes a foundation for emotionally aware media systems, offering a robust and safe framework for sensitive applications. By decomposing the emotion-to-response process into distinct, verifiable stages, the researchers have addressed challenges associated with end-to-end approaches, delivering a system that prioritises both performance and safety. The explicit verification loop is particularly noteworthy, guaranteeing age-appropriateness and acceptable stimulation levels in generated content, a critical feature for applications involving vulnerable users.

👉 More information

🗞 Motion-to-Response Content Generation via Multi-Agent AI System with Real-Time Safety Verification

🧠 ArXiv: https://arxiv.org/abs/2601.13589