The demand for energy-efficient artificial intelligence is driving innovation in neural network architectures, and researchers are now exploring spiking neural networks as a promising alternative to conventional systems. Tamoghno Das, Khanh Phan Vu, and Hanning Chen, all from the University of California, Irvine, alongside Hyunwoo Oh and Mohsen Imani, have developed a novel hardware accelerator, named ASTER, designed to efficiently run spiking transformers. This work overcomes key limitations of previous designs by addressing the challenges posed by the sparse and event-driven nature of spiking networks, enabling significant reductions in energy consumption. The team’s accelerator achieves up to 467times greater energy efficiency compared to existing edge GPUs and previous processing-in-memory accelerators, while maintaining competitive accuracy on standard image and event-based datasets, paving the way for truly ubiquitous, low-power artificial intelligence at the edge.

Spiking Transformers and Efficient Hardware Acceleration

This research details ASTER, a novel architecture designed to efficiently execute spiking transformers for event-driven vision tasks. The core achievement lies in co-optimizing the system with the sparse, binary nature of spiking neural networks, reducing memory access and maximizing data reuse. This work addresses a significant challenge: combining the power efficiency of spiking networks with the high performance of transformers, a leading architecture in computer vision. Spiking neural networks offer potential for low-power computation, particularly when paired with event-driven sensors like event cameras.

ASTER is designed to work directly with event-based sensors, eliminating the need for traditional frame-based processing. The architecture employs algorithm-hardware co-design, meaning the hardware is specifically engineered to complement the spiking transformer algorithm, utilizing versatile processing elements that can perform multiple computational layers, reducing hardware complexity and improving efficiency. Evaluations on visual reasoning tasks demonstrate that ASTER achieves up to a 467x reduction in energy consumption compared to edge GPUs. Furthermore, the architecture delivers a 1. 86x improvement over prior PIM-based spiking transformer accelerators while maintaining competitive accuracy.

These results highlight the potential of ASTER for deploying spiking transformers on edge devices. This research demonstrates that spiking transformers are a promising approach for low-power vision. Algorithm-hardware co-design proves crucial for achieving optimal performance. ASTER represents a viable architecture for deploying spiking transformers on edge devices, paving the way for a new generation of intelligent sensors and systems. Future work will explore more advanced spiking transformer architectures, develop more efficient memory access schemes, and investigate the use of 3D integration to further reduce power consumption. The team also plans to expand the application of ASTER to other event-driven tasks.

Sparse Spiking Transformer Acceleration with RRAM PIM

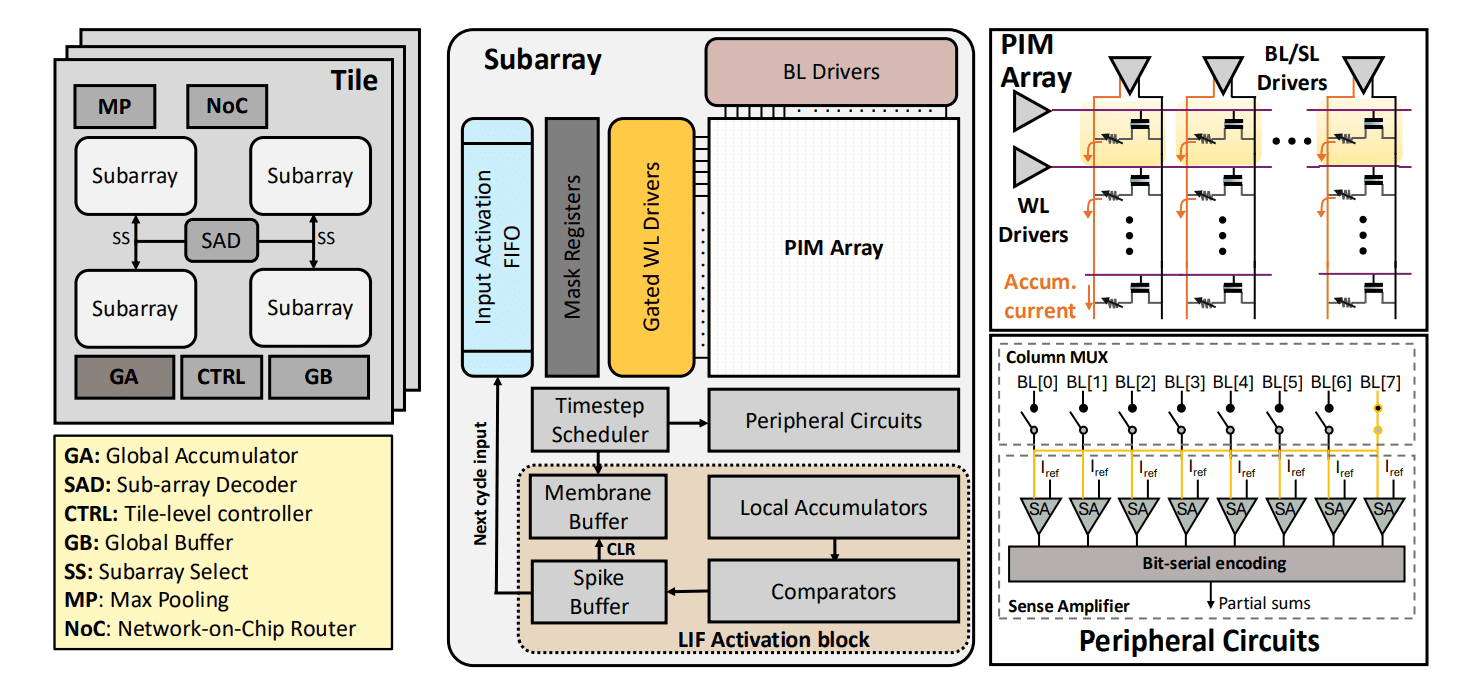

Scientists engineered ASTER, a novel hardware accelerator for spiking transformers, addressing limitations of conventional digital hardware when processing sparse, event-driven data. Recognizing the energy inefficiency of traditional processing, they developed a memory-centric architecture leveraging Processing-in-Memory (PIM) with RRAM-based crossbars. This design confines energy-intensive computation to the subarray level while sharing low-duty-cycle digital logic at the tile level, creating an efficient pipeline that fully exploits input sparsity. The team implemented a programmable wordline activation scheme, selectively activating only those subarrays containing relevant input spikes.

This significantly improves upon prior methods that relied on coarse bank gating or large AER buffers. Complementing this hardware innovation, the researchers developed software optimizations to enhance the efficiency of spiking transformers by exploiting inherent sparsity in the data, including a task-aware adaptive layer skipping technique and a confidence-based dynamic timestep reduction technique. Scientists introduced a task-aware adaptive layer skipping technique, profiling spike activity across layers to identify and bypass those with consistently low firing rates, effectively eliminating unnecessary computations during inference. This method utilizes a Task-Aware Firing Threshold (TAFT) to determine which layers to skip, ensuring compatibility with pre-trained architectures and minimizing runtime overhead.

They also developed a confidence-based dynamic timestep reduction technique, dynamically controlling the number of timesteps based on prediction confidence. To optimize the selection of TAFT and a Confidence-Based Exit Threshold (CBET), the research team formulated a bi-objective optimization problem, seeking Pareto-optimal pairs that maximize both task accuracy and energy efficiency. Utilizing Bayesian Optimization with a Gaussian Process surrogate model, scientists efficiently explored the design space, identifying configurations that lie on the Pareto frontier, providing deployment-ready thresholds tailored to specific task constraints and priorities. The team demonstrated substantial performance gains, achieving up to 467x and 1. 86x energy reduction compared to edge GPUs and previous PIM accelerators, while maintaining competitive accuracy on image and event-based classification tasks.

Aster Accelerates Spiking Transformer Efficiency Dramatically

The research team developed ASTER, a novel hardware accelerator designed to dramatically improve the efficiency of spiking transformers, a promising architecture for low-power visual processing. This work addresses a key limitation of spiking neural networks, their architectural mismatch with conventional digital hardware, by creating a memory-centric accelerator optimized for event-driven frameworks. The core of ASTER is a hybrid analog-digital Processing-in-Memory (PIM) architecture that natively executes spike-driven attention and MLP layers, capitalizing on temporal sparsity and binary encoding for efficient computation. Experiments demonstrate that ASTER achieves up to a 467x reduction in energy consumption compared to edge GPUs, specifically the Jetson Orin Nano, when running image classification tasks on the ImageNet dataset.

Furthermore, the accelerator delivers a 1. 86x energy reduction compared to existing PIM accelerators designed for spiking transformers, while maintaining competitive accuracy on ImageNet. These gains are achieved through a combination of hardware innovations and software optimizations, including sparsity-aware model optimizations such as task-aware adaptive layer skipping and confidence-based dynamic timestep reduction. Layer skipping selectively bypasses attention blocks with low spike activity, identified during a preliminary validation phase, eliminating unnecessary computations. Confidence-based timestep reduction dynamically adjusts the number of processing steps based on prediction confidence, allowing simpler inputs to be processed more quickly.

Analysis of the SDT-8-512 model revealed that deeper self-attention layers exhibit high sparsity, and the team demonstrated that layers with firing rates below 5% contribute negligibly to classification. Per-class analysis on the CIFAR10-DVS dataset showed that the number of timesteps required for accurate classification varies significantly, enabling application-specific threshold tuning. The team utilized Bayesian Optimization to co-design the algorithmic and microarchitectural aspects of the system, maximizing efficiency under tight constraints. These combined innovations enable a new class of intelligent, ubiquitous edge AI capable of real-time visual processing at the extreme edge.

Aster Accelerates Spiking Transformers for Efficient Vision

This work presents ASTER, a novel hardware accelerator designed to efficiently execute spiking transformers for event-driven vision tasks. The research addresses the challenges of deploying these bio-inspired networks on conventional hardware by leveraging a hybrid analog-digital Processing-in-Memory (PIM) architecture.

👉 More information

🗞 ASTER: Attention-based Spiking Transformer Engine for Event-driven Reasoning

🧠 ArXiv: https://arxiv.org/abs/2511.06770