Knowledge distillation offers a powerful way to train smaller, more efficient artificial intelligence models by leveraging the knowledge of larger, more complex “teacher” models, but finding the best teacher for a specific task traditionally demands extensive and costly experimentation. Abhishek Panigrahi from Princeton University, Bingbin Liu from Harvard University, Sadhika Malladi from Microsoft Research, and colleagues now present a new method, GRACE, which efficiently predicts how well a teacher model will perform in transferring knowledge to a “student”. GRACE assesses the characteristics of the student’s learning process without requiring access to external data or internal details of the teacher, instead focusing on the student’s gradients during training. This approach demonstrates a strong correlation with student performance on challenging mathematical reasoning tasks, and can improve results by over seven percent compared to simply selecting the best-performing teacher, offering valuable guidance for optimising the distillation process and informing key design choices.

Teacher Quality Drives Student Mathematical Reasoning

This research investigates how to best train a smaller language model, known as the “student,” by learning from a larger, more capable “teacher” model, with a specific focus on improving mathematical problem-solving abilities. The study demonstrates that simply using a larger teacher model does not guarantee better results for the student; the quality of the teacher’s reasoning is paramount. Researchers found a correlation between the length of the teacher’s responses and performance, with more detailed explanations often indicating a better teacher. To quantify teacher quality, the team developed new metrics, G-Norm and GRACE, which assess the consistency and correctness of the reasoning steps provided by the teacher. The research also reveals that the optimal teacher model can vary depending on the specific mathematical dataset used, highlighting the importance of tailoring teacher selection to the task at hand. Through qualitative analysis of responses, scientists observed differences in reasoning styles and explanation clarity between various teacher models.

Gradients Reveal Teacher-Student Alignment Efficiency

This study introduces GRACE, a novel score designed to efficiently identify the most effective teacher model for knowledge distillation in large language models. Researchers hypothesized that a strong-performing teacher does not always yield the best student performance, necessitating a method to evaluate teacher-student compatibility. To achieve this, the team developed GRACE, which analyzes the distributional properties of the student model’s gradients without requiring access to external verification data, teacher internals, or test datasets. The core of the method involves calculating gradients from a small set of teacher-generated data and then employing a cross-validation structure to assess data diversity and teacher-student alignment.

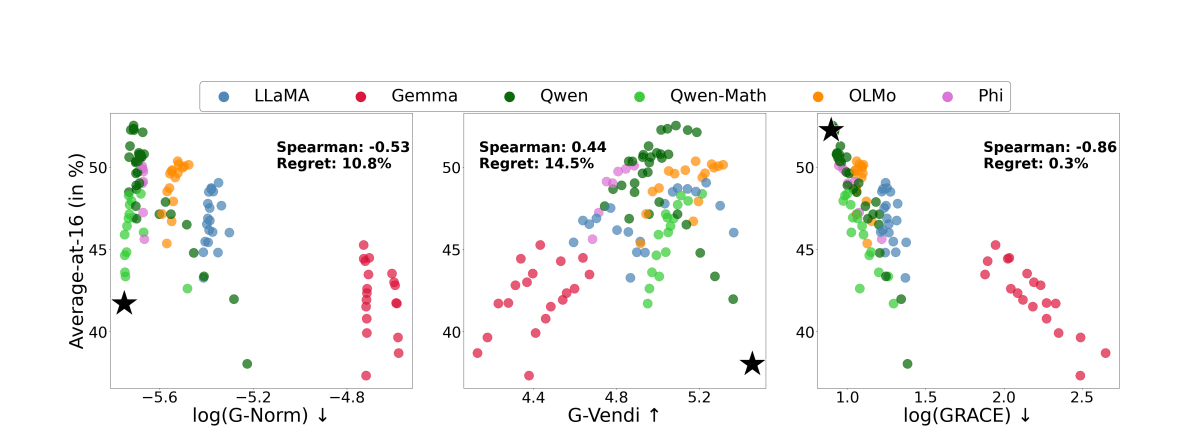

This process allows GRACE to quantify how well a teacher’s output facilitates the student’s learning process. The team demonstrates a theoretical connection between GRACE and conditional mutual information-based generalization bounds, providing insight into its effectiveness. Experiments focused on mathematical reasoning tasks using the GSM8K and MATH datasets. Researchers trained LLaMA-1B-Base, OLMo-1B-Base, Gemma-2B-Base, and LLaMA-3B-Base as student models, evaluating them with 15 candidate teachers from the LLaMA, OLMo, Qwen, Gemma, and Phi families. Results demonstrate a strong correlation between GRACE scores and student performance, with GRACE outperforming baseline methods like G-Vendi.

Notably, selecting teachers using GRACE improved student performance by up to 7. 4% on GSM8K and 2% on MATH compared to using the best-performing teacher directly. Furthermore, the study reveals that GRACE provides actionable guidance on crucial distillation hyperparameters, including optimal generation temperature, teacher selection under size constraints, and identifying the best teacher within a specific model family.

Gradient Alignment Predicts Distillation Performance Accurately

Scientists have developed a new method, GRACE, to efficiently identify the most effective “teacher” language model for training a smaller “student” model, significantly improving distillation performance. This work addresses the challenge of selecting the optimal teacher from a range of available options, a process traditionally requiring extensive and costly trial-and-error. GRACE quantifies teacher effectiveness by analyzing the distributional properties of the student’s gradients, without requiring access to verification data, teacher internals, or test datasets. Experiments demonstrate a strong correlation, up to 86% Spearman correlation, between GRACE scores and the performance of distilled LLaMA and OLMo student models on challenging tasks like GSM8K and MATH.

Utilizing a teacher selected by GRACE improved student performance by up to 7. 4% on GSM8K and 2% on MATH, compared to simply choosing the best-performing teacher. The team trained LLaMA-1B-Base, OLMo-1B-Base, Gemma-2B-Base, and LLaMA-3B-Base using generations from 15 candidate teachers across the LLaMA, OLMo, Qwen, Gemma, and Phi families. GRACE also provides actionable insights for optimizing the distillation process, including identifying the optimal generation temperature for a given teacher, selecting the best teacher within a size constraint, and choosing the most suitable teacher within a specific model family. The method operates by analyzing gradients, processing them with a low-dimensional projection and rescaling based on response length, and then calculating a score based on the mean and second moment of these gradients. This approach effectively balances data diversity and teacher-student alignment, offering a robust and efficient solution for language model distillation.

GRACE Accurately Predicts Distillation Performance Gains

This research introduces GRACE, a novel score that efficiently evaluates the suitability of a “teacher” model for knowledge distillation, a process used to train smaller, more efficient “student” models. The team demonstrates that GRACE accurately predicts distillation performance, correlating strongly with improvements of up to 7. 4% over naive teacher selection methods on challenging tasks. GRACE operates by analyzing the distributional properties of the student’s gradients, requiring no access to verification data, teacher internals, or test data, making it a computationally lightweight solution. The significance of this work lies in its ability to guide crucial decisions within the distillation process, including optimal temperature settings and teacher selection under size constraints. Importantly, the researchers establish a theoretical connection between GRACE and leave-one-out conditional mutual information, a concept linked to generalization performance, suggesting that GRACE captures the stability of the optimization process.

👉 More information

🗞 In Good GRACEs: Principled Teacher Selection for Knowledge Distillation

🧠 ArXiv: https://arxiv.org/abs/2511.02833