Evaluating the effectiveness of large language models (LLMs) in education requires rigorous testing, and a new study addresses this critical need. Langdon Holmes from Vanderbilt University, Adam Coscia from Georgia Institute of Technology, and Scott Crossley from Vanderbilt University, alongside colleagues including Joon Suh Choi and Wesley Morris , all affiliated with the ASPIRE program , present a systematic method for assessing LLM prompt quality. Their research, detailed through an analysis of LLM-generated questions during structured learning dialogues, establishes a tournament-style evaluation framework utilising the Glicko2 rating system and human judges. Significantly, the team discovered that a template focused on strategic reading consistently outperformed others, achieving win rates between 81% and 100%, and demonstrating the potential to foster metacognitive skills like self-directed learning , offering a pathway towards evidence-based design in educational technology.

This work moves beyond simply documenting prompt text, instead focusing on a replicable and resource-efficient evaluation framework to determine which prompting strategies are most effective for educational contexts. The team employed a tournament-style evaluation, utilising the Glicko2 rating system, with eight judges assessing question pairs across three key dimensions: format, dialogue support, and appropriateness for learners.

The study leveraged data from 120 authentic user interactions across three distinct educational deployments to ensure the findings were grounded in real-world application. Six prompt templates, each incorporating established prompt engineering patterns and distinct pedagogical strategies, were rigorously compared. This innovative methodology allows educational technology researchers to systematically evaluate and refine prompt designs, moving the field towards evidence-based development for AI-powered educational applications. Results revealed that a single prompt, focused on strategic reading, significantly outperformed all other templates, achieving win probabilities ranging from 81% to 100% in pairwise comparisons.

This high-performing prompt combined persona and context manager patterns, specifically designed to support metacognitive learning strategies such as self-directed learning. The research establishes that carefully crafted prompts can substantially enhance the quality of LLM-generated educational content, fostering more effective and personalised learning experiences. By systematically evaluating prompt performance, the team provides a generalisable approach applicable to a wide range of educational applications, including chatbots, automated feedback systems, and interactive learning materials. This breakthrough reveals a pathway for optimising LLM interactions to better align with pedagogical principles and improve student outcome.

Furthermore, the study highlights the importance of moving beyond simple prompt documentation towards a formal evaluation process, enabling researchers to build upon previous work and identify the most effective prompting strategies for diverse educational contexts. The methodology presented offers a valuable resource for the educational technology community, providing a framework for systematically improving prompt designs and ensuring that AI-powered interventions are both effective and pedagogically sound. This work opens exciting possibilities for creating more intelligent and adaptive educational tools that cater to individual learner needs and promote deeper understanding. . This work moves beyond ad-hoc prompt engineering towards evidence-based development for educational technology, offering a replicable methodology for improving LLM performance.

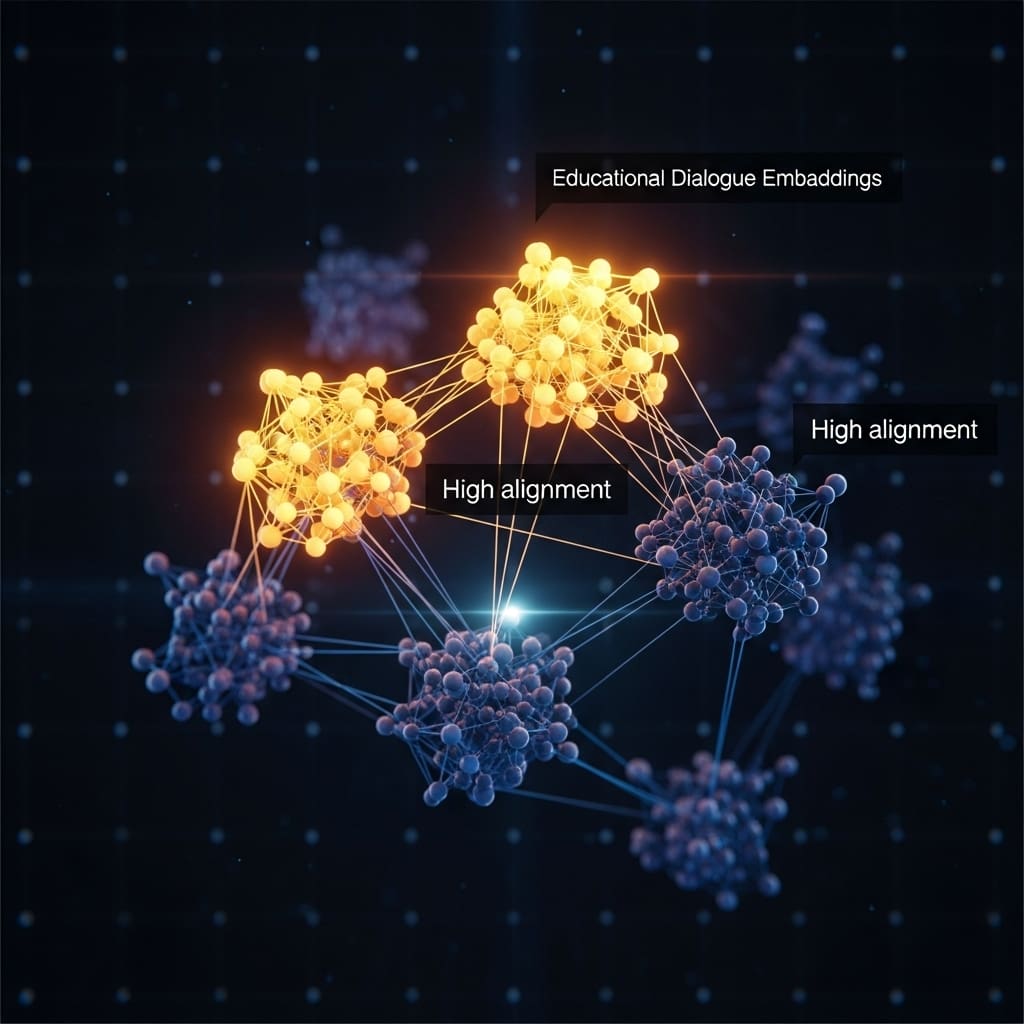

Data was collected from 120 authentic user interactions across three separate educational deployments, providing a robust foundation for analysis. Experiments revealed that a single prompt template, focused on strategic reading, consistently outperformed all others in pairwise comparisons. Win probabilities for this template ranged from 81% to 100%, demonstrating a substantial advantage over alternative designs. The successful template combined persona and context manager patterns, specifically engineered to support metacognitive learning strategies like self-directed learning. Researchers meticulously evaluated question pairs using the Glicko2 rating system, with eight judges assessing format, dialogue support, and learner appropriateness, ensuring a comprehensive and nuanced evaluation.

The team measured performance across three key dimensions: format, dialogue support, and appropriateness for learners, utilising a tournament-style evaluation framework. Judges consistently favoured the strategic reading prompt, indicating its superior ability to generate effective and pedagogically sound follow-up questions. Data analysis confirmed the robustness of these findings, with the winning template maintaining high win probabilities across all tested scenarios. This systematic evaluation methodology allows educational technology researchers to rigorously assess and refine prompt designs, moving beyond subjective judgements.

Results demonstrate the power of combining persona and context management within LLM prompts to enhance metacognitive learning. The strategic reading template’s success highlights the importance of aligning prompt design with specific pedagogical goals. Measurements confirm that this approach can significantly improve the quality and effectiveness of LLM-generated educational content. Tests prove that the Glicko2 rating system, coupled with expert judges, provides a reliable and valid method for evaluating prompt performance in educational contexts. This breakthrough delivers a generalizable framework for optimising LLMs in educational.

👉 More information

🗞 LLM Prompt Evaluation for Educational Applications

🧠 ArXiv: https://arxiv.org/abs/2601.16134