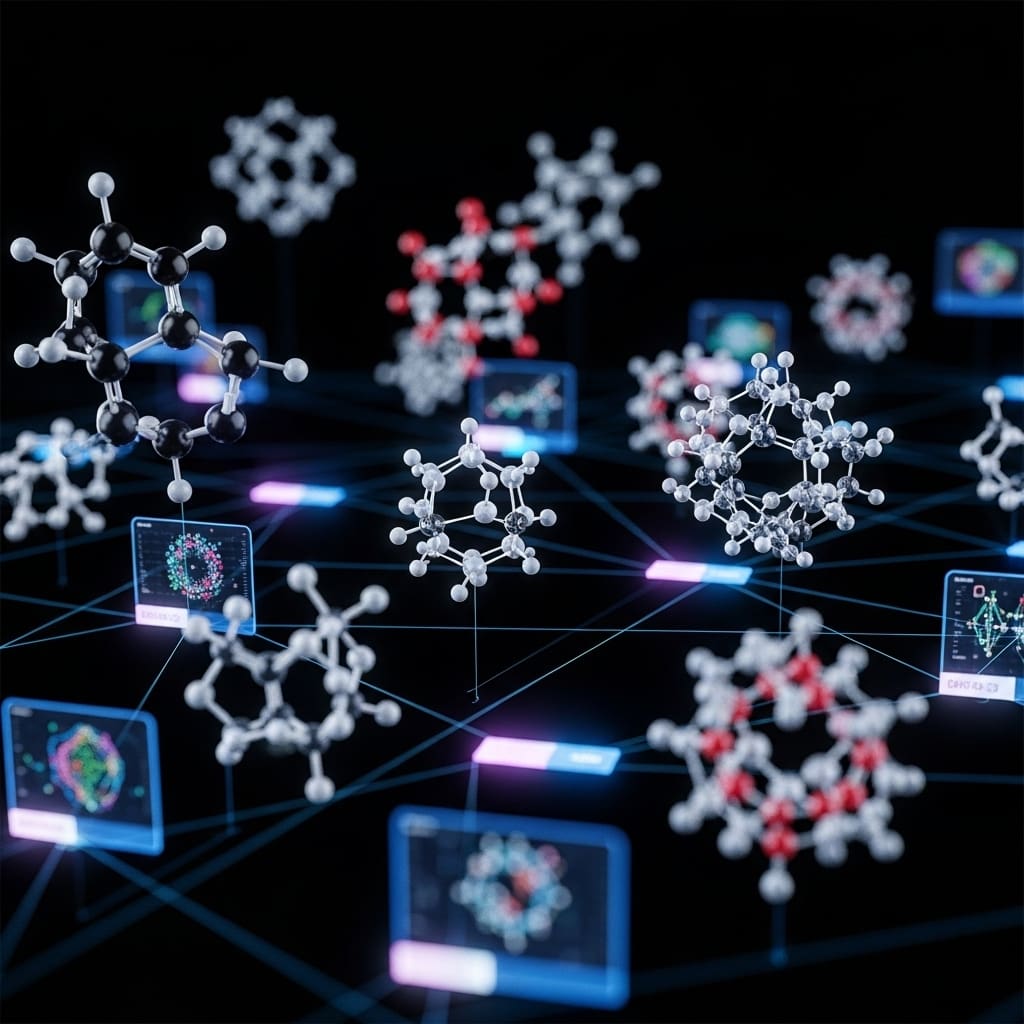

The challenge of accurately answering complex biomedical questions demands innovative approaches to information retrieval and knowledge integration, and researchers are now investigating how best to incorporate visual data into these systems. Primož Kocbek from University of Maribor, Azra Frkatović-Hodžić and Dora Lalić from Genos Ltd, along with Vivian Hui from The Hong Kong Polytechnic University, Gordan Lauc from University of Zagreb and Genos Ltd, and Gregor Štiglic from University of Maribor and Usher Institute University of Edinburgh, have explored different strategies for combining text and images in a technique called multi-modal retrieval-augmented generation. Their work focuses on glycobiology, a field rich in visual data, and demonstrates that converting figures and tables into text improves accuracy for smaller models, while advanced models benefit from directly processing visual information without conversion. This research establishes a benchmark for evaluating these methods and identifies ColFlor as a particularly efficient visual retriever, offering a pathway to more effective and accessible biomedical question answering.

Visual Information Integration for Biomedical Question Answering

Multi-modal retrieval-augmented generation, which combines text and images, offers a promising approach to biomedical question answering, but a key question remains regarding how best to incorporate visual information. Researchers are investigating whether it is more effective to convert figures and tables into textual descriptions or to employ direct visual retrieval, which returns page images and allows the generative model to interpret them. This work explores this critical decision point, seeking to determine the conditions under which each approach yields superior performance and ultimately enhances biomedical question answering capabilities.

Model Performance and Cost Across Difficulty Levels

The research team evaluated several models, ranging in size from smaller to larger capacities, and assessed their performance and cost across varying question difficulties. Generally, larger models demonstrate higher accuracy but require more computational resources, resulting in increased cost. The most cost-effective models exhibit lower accuracy, while the choice of retrieval method has a relatively small impact on both performance and cost compared to model size. As question difficulty increases, the cost per correct answer also increases for all models, as more computational power is needed to arrive at the correct solution.

Detailed analysis reveals that larger models consistently generate more tokens and take longer to run, aligning with the increased computational demands. However, when considering price-per-correct answer, a more nuanced picture emerges. The team found that a combination of a larger model and the ColFlor retrieval method consistently offers the best balance between cost and accuracy across all difficulty levels. This highlights a clear trade-off between cost and accuracy, where higher accuracy requires greater investment.

Glycobiology RAG Reveals Accuracy Versus Complexity Trade-off

This work presents a comprehensive evaluation of multi-modal retrieval-augmented generation systems in the visually dense domain of glycobiology. Researchers constructed a benchmark of multiple-choice questions derived from scientific papers, categorizing retrieval difficulty based on the complexity of the required evidence. They compared four augmentation strategies, ranging from no augmentation to text retrieval, multi-modal conversion, and late-interaction visual retrieval using ColPali. Experiments demonstrated that both text and multi-modal augmentation significantly outperformed direct visual retrieval, achieving comparable accuracy with the Gemma-3-27B-IT model.

Utilizing the more powerful GPT-4o model, multi-modal augmentation reached even higher accuracy, closely followed by text retrieval and ColPali. Further testing with the GPT-5 family of models showed additional improvements, with ColPali and ColFlor achieving peak accuracy. Notably, across the GPT-5 family, ColPali, ColQwen, and ColFlor demonstrated statistically indistinguishable performance, with ColFlor matching ColPali’s results while maintaining a smaller computational footprint. These results indicate that converting visuals to text is more reliable for mid-size models, while direct image retrieval becomes competitive when paired with frontier models. This work establishes a baseline for developing trustworthy multi-modal RAG systems in specialized biomedical domains, highlighting the importance of aligning pipeline complexity with model reasoning capacity.

Visual Retrieval Boosts Large Language Models

This study investigated strategies for multi-modal retrieval-augmented generation, specifically comparing methods of incorporating visual information into biomedical question answering systems. Researchers constructed a benchmark of multiple-choice questions focused on glycobiology to evaluate different approaches using both mid-size and state-of-the-art large language models. The findings demonstrate a clear relationship between model capacity and optimal retrieval strategy; converting visuals to text proves more reliable for smaller models, while direct image retrieval becomes competitive when using more powerful, frontier models. Notably, the team found that among visual retrieval methods, ColFlor achieved performance comparable to more computationally intensive options while maintaining a smaller footprint, offering an efficient balance between accuracy and resource use. These results suggest that a capacity-aware design is crucial for effective multi-modal systems, adapting the retrieval method to the capabilities of the underlying language model.

👉 More information

🗞 Exploration of Augmentation Strategies in Multi-modal Retrieval-Augmented Generation for the Biomedical Domain: A Case Study Evaluating Question Answering in Glycobiology

🧠 ArXiv: https://arxiv.org/abs/2512.16802