The increasing demands of modern applications, such as big data analytics and machine learning, are rapidly exceeding the capacity of conventional main memory systems. Manel Lurbe Gomez, Miguel Avargues, and Salvador Petit, from Universitat Politècnica de València, along with Rui Yang, Guanhao Wang, and colleagues at Huawei Technologies, investigate how prefetching techniques can overcome these limitations when utilising newer, high-capacity memory technologies. Their research focuses on optimising data access across deep memory hierarchies, including both on-chip caches and off-chip memory controllers, to minimise the impact of increasing memory latency. The team demonstrates that a multi-level prefetching engine significantly enhances system performance, with on-chip cache prefetchers proving crucial for maximising the benefits of off-chip prefetching. Results show that combining these techniques achieves coverage rates exceeding 90%, leading to a performance increase of up to 12% and paving the way for more efficient and scalable memory systems.

Hybrid Memory Exploiting DRAM and NVM

Research into improving memory system performance focuses on hybrid memory architectures that combine DRAM and Non-Volatile Memory (NVM), such as Phase Change Memory (PCM) or Optane. This approach overcomes the limitations of traditional DRAM, which is expensive and has density constraints, and NVM, which suffers from higher latency and limited write endurance, by leveraging the strengths of both. This research encompasses both hardware and software techniques, including advanced memory controllers, caching policies, and comprehensive benchmarking. The increasing demands of big data and modern workloads necessitate larger and faster memory systems.

While DRAM remains a cornerstone, its cost and density limitations are becoming increasingly problematic. NVM offers higher density and data persistence, but its inherent latency and limited write endurance present significant challenges. Researchers are exploring various hybrid memory configurations and management techniques to optimize performance, endurance, and cost, including innovative approaches like hybrid DRAM/PCM configurations and intelligent caching policies that strategically place data in either DRAM or NVM based on access patterns. The development of memory controllers capable of managing both DRAM and NVM simultaneously is a key area of investigation.

These controllers aim to minimize latency and maximize bandwidth by intelligently scheduling requests to the appropriate memory type. Furthermore, researchers are developing caching policies that consider row buffer locality to reduce access latency. Realistic benchmarking is crucial for evaluating memory system performance, and researchers are employing a variety of workloads, including Facebook MySQL, Redis, and the Yahoo! Cloud Serving Benchmark (YCSB). Key contributions include novel memory controller designs, advanced caching policies optimized for hybrid memory systems, realistic benchmarking using real-world workloads, and enhancements to endurance and reliability. The research encompasses a range of technologies, including DRAM, NVM (PCM, Optane), ARM architecture, Intel architecture, Redis, MySQL, and YCSB. Ultimately, this work presents a comprehensive exploration of hybrid memory systems, covering a wide range of research areas and techniques aimed at improving memory performance, capacity, and endurance to meet the demands of modern workloads.

Hybrid Memory Prefetching with HMC and HMC+L1

Researchers are addressing the increasing memory demands of applications like big data analytics and machine learning by investigating hybrid memory systems composed of SRAM, DRAM, and non-volatile RAM (NVRAM). Recognizing that NVRAM offers high density but suffers from longer access times compared to DRAM, the study focuses on mitigating these latencies through multi-level prefetching techniques. The core of the research involves implementing and comparing two distinct prefetching approaches, designated HMC and HMC+L1. The HMC approach integrates a prefetcher directly within the off-chip hybrid memory controller, aiming to proactively retrieve data before it is needed by the processor.

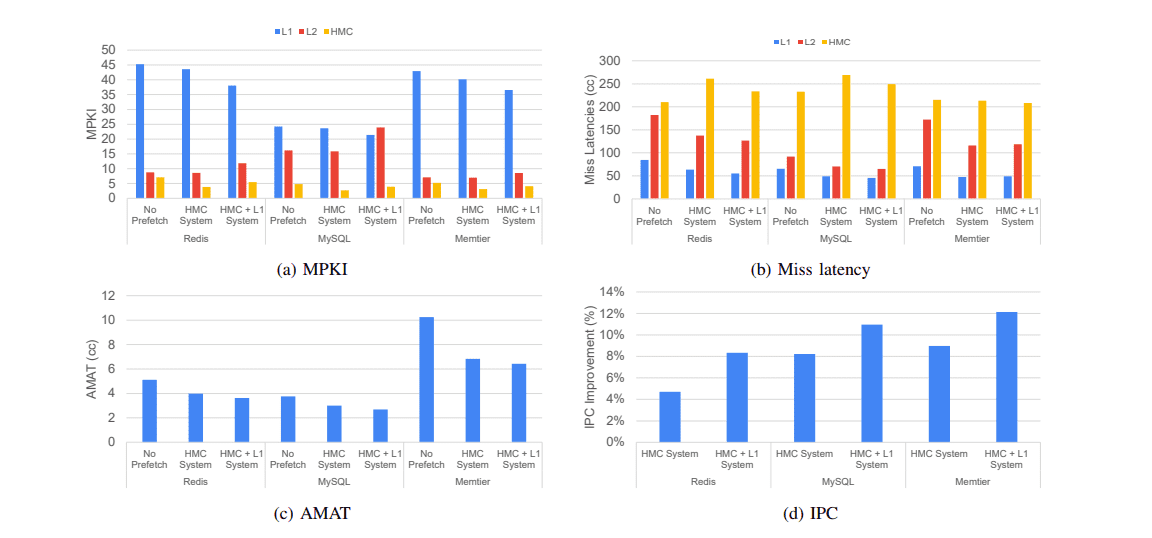

To further enhance performance, the HMC+L1 approach augments this off-chip prefetching with additional prefetchers located within the L1 cache of the processor. This two-tiered system seeks to minimize the impact of NVRAM latencies by anticipating data requirements at both the memory controller and processor levels. The team meticulously measured the performance of each approach, demonstrating that the off-chip HMC prefetcher achieves coverage and accuracy rates exceeding 60%, reaching up to 80%. Critically, the addition of L1 prefetching in the HMC+L1 configuration significantly boosts off-chip prefetcher coverage, achieving rates as high as 92%. Consequently, overall system performance increased from 9% with the HMC approach to 12% when L1 prefetching was also employed, demonstrating the synergistic benefits of a multi-level prefetching strategy.

Prefetching Optimizes Hybrid Memory Performance

This research delivers a significant advancement in hybrid memory systems, addressing the challenge of increasing memory demands in applications like big data analytics and machine learning. Scientists investigated multi-level prefetching techniques to mitigate the extended latencies inherent in combining different memory technologies, specifically SRAM, DRAM, and non-volatile RAM (NVRAM). Experiments revealed that a prefetcher integrated within the off-chip hybrid memory controller (HMC) achieves impressive coverage and accuracy rates, exceeding 60% and reaching up to 80%. However, the team discovered that maximizing the benefits of off-chip prefetching requires on-chip support.

By combining the HMC prefetcher with additional prefetchers at the L1 cache level (HMC+L1), scientists boosted off-chip prefetcher coverage to as much as 92%. This two-level prefetching system demonstrably improves L1 miss latencies and consequently increases overall system performance from 9% with the HMC approach to 12% when L1 prefetching was also employed. The work provides a detailed analysis of prefetching impacts across the entire hybrid memory hierarchy, establishing a foundation for future advancements in memory system design.

👉 More information

🗞 Prefetching in Deep Memory Hierarchies with NVRAM as Main Memory

🧠 ArXiv: https://arxiv.org/abs/2509.17388