Scientists are tackling the persistent problem of generating accurate 3D models from everyday images and videos! Yawar Siddiqui, Duncan Frost, and Samir Aroudj, from their respective institutions, alongside Avetisyan et al, introduce ShapeR , a new technique that creates robust 3D shapes even from messy, real-world footage, unlike current methods needing pristine input data. This research is significant because it bridges the gap between laboratory-perfect 3D reconstruction and the chaotic nature of casually captured content, utilising visual-inertial SLAM, 3D detection and language models to achieve high-fidelity results! Through innovative techniques like compositional augmentations and a new in-the-wild evaluation benchmark of 178 objects, ShapeR demonstrably outperforms existing approaches, achieving a 2.7x improvement in Chamfer distance , paving the way for more accessible and practical 3D modelling applications.

Multimodal Shape Reconstruction from Image Sequences

Scientists have unveiled ShapeR, a groundbreaking approach to generating accurate 3D object shapes from casually captured image sequences, overcoming limitations inherent in existing 3D reconstruction methods! The research team achieved this by ingeniously combining off-the-shelf visual-inertial SLAM, 3D detection algorithms, and vision-language models to extract crucial information, sparse SLAM points, posed multi-view images, and descriptive captions, for each object within a scene. A rectified flow transformer, specifically trained to leverage these multimodal inputs, then generates high-fidelity, metric 3D shapes, effectively reconstructing objects even from imperfect, real-world data. This innovative pipeline addresses a critical gap in the field, as current techniques typically demand clean, unoccluded, and well-segmented inputs, conditions rarely met in everyday scenarios.

The study introduces a robust system designed to withstand the challenges of casually captured data, employing techniques such as on-the-fly compositional augmentations to enhance data variability and a curriculum training scheme spanning both object- and scene-level datasets. Furthermore, ShapeR incorporates strategies to effectively handle background clutter, a common obstacle in real-world 3D reconstruction. To rigorously evaluate their approach, the researchers introduced a new benchmark dataset comprising 178 in-the-wild objects across seven real-world scenes, complete with detailed geometry annotations, a resource previously lacking in the field. Experiments demonstrate that ShapeR significantly outperforms state-of-the-art methods in this challenging setting, achieving an impressive 2.7x improvement in Chamfer distance, a key metric for evaluating 3D shape accuracy.

This breakthrough establishes a pathway towards more realistic and accessible 3D reconstruction, moving beyond controlled laboratory settings to embrace the complexities of real-world data acquisition. Unlike scene-centric methods that produce monolithic representations, ShapeR focuses on individual objects, enabling more detailed and complete results. The research proves that by intelligently integrating multiple data modalities and employing robust training strategies, it is possible to generate high-fidelity 3D shapes from even the most casually captured sequences. The work opens exciting possibilities for applications in augmented reality, robotics, and virtual environment creation, where accurate and detailed 3D models are essential for seamless interaction with the physical world.

Multimodal ShapeR for 3D Object Reconstruction leverages

Scientists developed ShapeR, a novel approach for conditional 3D object shape generation from casually captured image sequences! The research team harnessed off-the-shelf visual-inertial SLAM, 3D detection algorithms, and vision-language models to extract crucial data for each object within a sequence, sparse SLAM points, posed multi-view images, and machine-generated captions, facilitating detailed 3D reconstruction. A rectified flow transformer was then trained to generate high-fidelity metric 3D shapes conditioned on these multimodal inputs, effectively bridging the gap between raw capture data and complete object models. To address the inherent challenges of real-world data, the study pioneered on-the-fly compositional augmentations across all input modalities during training.

This technique robustly handles occlusions, noise, and varying viewpoints commonly found in casual captures, ensuring the model’s resilience. Furthermore, researchers implemented a curriculum training scheme, initially utilising large object-centric datasets and subsequently transitioning to synthetic scene data, to progressively enhance the model’s ability to generalise to complex environments. This two-stage approach tackles the limitations of isolated object datasets by incorporating diverse object combinations found in realistic scenarios. The team innovatively bypassed the need for explicit 2D segmentation by leveraging 3D instance points, enabling ShapeR to implicitly segment objects within images.

Experiments employed a VecSet latent space, decoded into complete 3D shapes, allowing for efficient and detailed representation of object geometry. A new evaluation benchmark was introduced, comprising 178 in-the-wild objects across 7 real-world scenes, each with geometry annotations, providing a rigorous testbed for assessing performance. Notably, ShapeR significantly outperformed state-of-the-art methods, achieving a 2.7x improvement in Chamfer distance, a key metric for evaluating 3D shape similarity! This substantial performance gain demonstrates the effectiveness of the proposed methodology in generating accurate and robust 3D reconstructions from challenging, casually captured data, paving the way for more accessible and realistic 3D modelling applications.

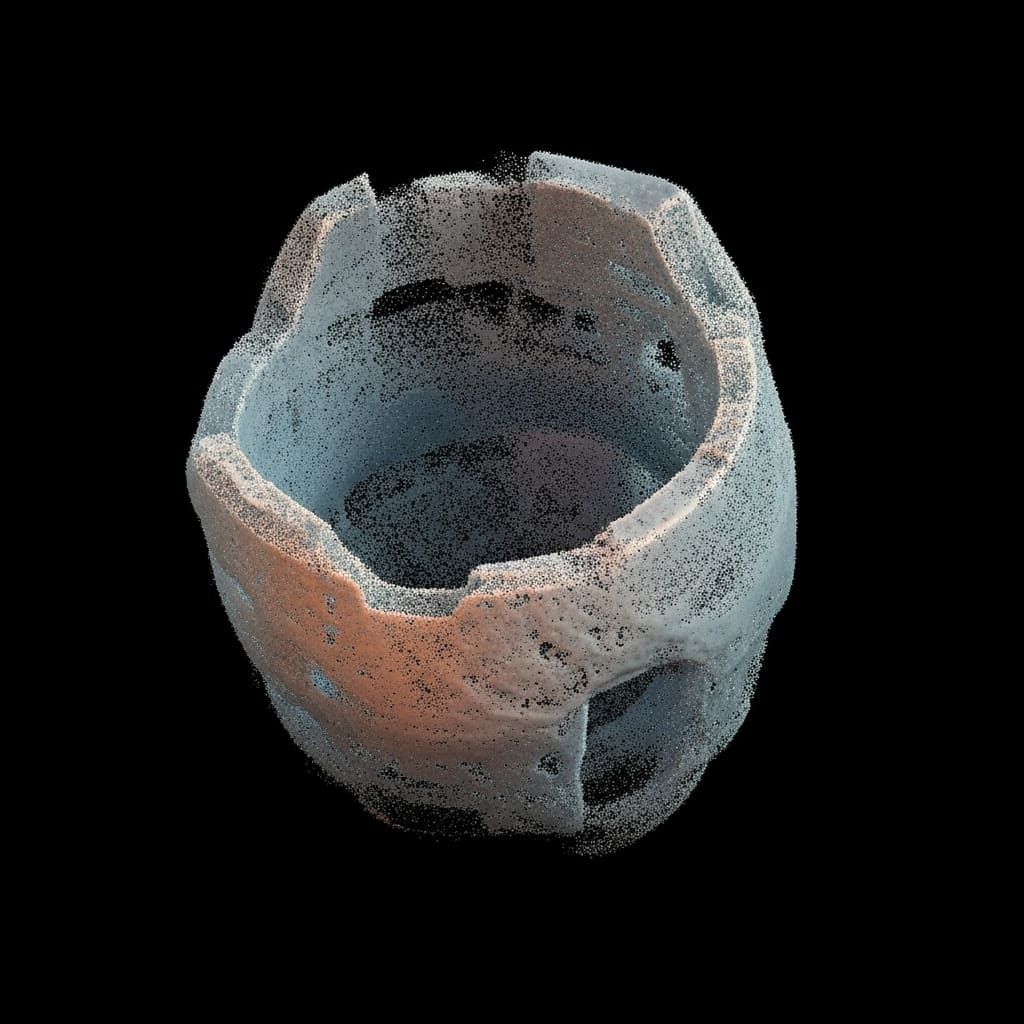

ShapeR reconstructs 3D objects from real footage

Scientists have developed ShapeR, a novel approach for generating 3D object shapes from casually captured image sequences! The research addresses a significant limitation of current 3D shape generation methods, which typically require clean, unoccluded, and well-segmented input data, conditions rarely found in real-world scenarios. ShapeR leverages off-the-shelf visual-inertial SLAM, 3D detection algorithms, and vision-language models to extract sparse SLAM points, posed multi-view images, and machine-generated captions for each object within a sequence. These modalities then condition a rectified flow transformer, ultimately generating high-fidelity metric 3D shapes.

Experiments revealed that ShapeR significantly outperforms existing approaches in challenging, real-world settings, achieving an impressive 2.7x improvement in Chamfer distance compared to the state of the art! The team measured performance using a newly introduced evaluation benchmark comprising 178 in-the-wild objects across 7 real-world scenes, all with detailed geometry annotations. This benchmark was specifically designed to capture the complexities of casual capture, including occlusions, clutter, and varying resolutions and viewpoints, providing a realistic test environment. To ensure robustness, researchers employed on-the-fly compositional augmentations, a curriculum training scheme spanning both object- and scene-level datasets, and strategies to mitigate the impact of background clutter.

The work utilizes a two-stage curriculum learning setup, initially training on large, diverse object-centric datasets and then refining performance with synthetic scene data to capture complex object combinations. Data shows that this approach allows ShapeR to implicitly segment objects within images, eliminating the need for explicit 2D segmentation often required by prior methods. The breakthrough delivers complete, high-fidelity object shapes with appropriate levels of detail, while maintaining real-world metric consistency. Measurements confirm that ShapeR effectively combines sparse point clouds, posed images, and text captions to reconstruct objects accurately from casually captured sequences. Scientists will release all code, model weights, and the ShapeR evaluation dataset to facilitate further research and development in this exciting field.

ShapeR generates 3D from real image sequences

Scientists have developed ShapeR, a new method for generating 3D object shapes from casually captured image sequences! This approach overcomes limitations in existing 3D shape generation techniques, which typically require clean and well-segmented input data, conditions rarely found in real-world settings! ShapeR leverages visual-inertial SLAM, 3D detection algorithms, and language models to extract sparse SLAM points, multi-view images, and captions for each object in a sequence, then employs a rectified flow to generate high-fidelity 3D shapes. The research team enhanced ShapeR’s robustness through compositional augmentations, a curriculum training scheme, and strategies for handling background clutter.

Furthermore, they introduced the ShapeR Evaluation Dataset, a benchmark comprising 178 in-the-wild objects across seven real-world scenes with geometry annotations, to facilitate further research in this area! Experiments demonstrate that ShapeR significantly outperforms current methods, achieving a 2.7x improvement in Chamfer distance, a measure of shape similarity, compared to the state of the art. Acknowledging limitations, the authors note that performance can be affected by significant occlusions or extremely poor image quality! Future work could explore extending the method to handle more complex scenes and dynamic objects, as well as investigating the potential of incorporating additional sensory data! This advancement enables more scalable and automatic 3D reconstruction in natural environments, offering potential applications in robotics, augmented reality, and virtual reality.

👉 More information

🗞 ShapeR: Robust Conditional 3D Shape Generation from Casual Captures

🧠 ArXiv: https://arxiv.org/abs/2601.11514