Large language models typically require separate training for each desired size and application, a process that demands enormous computational resources, but researchers are now demonstrating a more efficient approach. Ali Taghibakhshi, Sharath Turuvekere Sreenivas, Saurav Muralidharan, and colleagues at NVIDIA have developed Nemotron Elastic, a framework that builds reasoning-oriented language models with multiple nested sub-models within a single parent model. This innovative system allows for the creation of smaller, optimized versions that can be deployed without additional training, significantly reducing computational costs, achieving over 360times reduction compared to training models from scratch. The team’s work, applied to the Nemotron Nano V2 12B model, simultaneously produces 9B and 6B versions using only 110 billion training tokens, while maintaining or improving accuracy, and importantly, offers constant deployment memory regardless of the number of models in the family.

Efficiently Deriving Multiple Reasoning Language Models

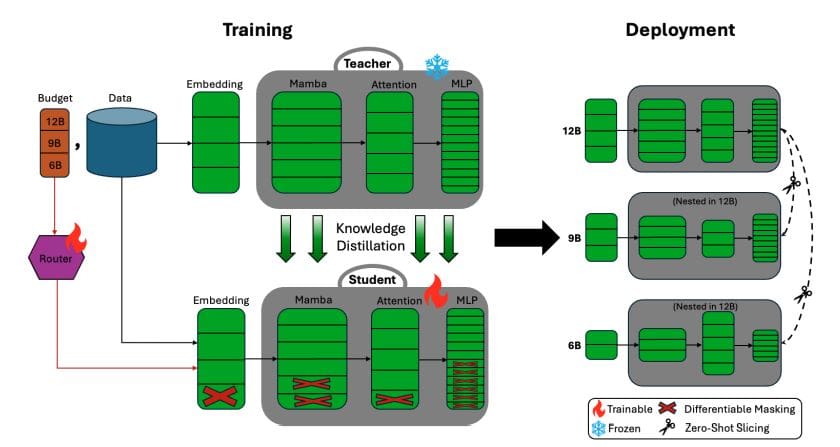

This research introduces Nemotron Elastic, a novel framework for efficiently training and deploying a family of reasoning-capable Large Language Models (LLMs) with varying sizes. The core innovation lies in elastic training, which derives multiple model sizes from a single 12 billion parameter parent model, significantly reducing the number of training tokens needed compared to training each model from scratch or using sequential compression methods. The team addresses a critical challenge: the high computational cost of maintaining multiple LLMs for different applications, offering a versatile model adaptable to various scales. Key to this approach is a hybrid Mamba-Transformer architecture and a focus on extended-context training.

Researchers discovered that extended-context training, utilizing sequences of 49,000 tokens, is essential for maintaining reasoning performance in the compressed, smaller models. This distinguishes Nemotron Elastic from standard LLM compression techniques. The results demonstrate a substantial reduction in training tokens, requiring only 110 billion tokens for the entire model family, a 360-fold reduction compared to training from scratch and a seven-fold improvement over sequential compression. Importantly, the smaller models maintain strong reasoning capabilities, and the nested models can be extracted from the largest model on-the-fly without additional overhead, making it feasible for organizations with limited resources to train and deploy a family of reasoning LLMs tailored to different needs and hardware constraints.

Nested Models Trained From Single Base LLM

Scientists have developed Nemotron Elastic, a groundbreaking framework for training large language models (LLMs) that efficiently produces multiple models tailored to different computational budgets. This innovative approach overcomes the prohibitive costs associated with training separate LLM families, each requiring independent training runs and vast token counts. This represents a remarkable 360-fold reduction in training costs compared to traditional methods and a seven-fold improvement over state-of-the-art compression techniques.

The core of Nemotron Elastic lies in its ability to create nested sub-networks within a single parent model, allowing for zero-shot extraction of smaller models without additional training or fine-tuning. Experiments demonstrate that these nested models achieve accuracy on par with, or exceeding, independently trained state-of-the-art models across key reasoning and mathematical benchmarks. A crucial element of the work is a two-stage training curriculum specifically designed for reasoning tasks, prioritizing long-context capability and enabling the models to process extended chains of thought. Researchers achieved this efficiency through several key innovations, including importance-based component ranking to establish architectural priorities, frozen teacher knowledge distillation for joint sub-network optimization, and an end-to-end trained router that dynamically adjusts architecture decisions based on task difficulty. Notably, the framework maintains constant deployment memory regardless of the number of models within the family, offering a significant advantage over approaches where memory scales linearly with family size. This breakthrough delivers a pathway toward democratizing access to high-performance reasoning models across diverse deployment scenarios and resource constraints.

Elastic Training Yields Efficient Language Models

Nemotron Elastic represents a significant advance in the training of large language models, particularly those designed for complex reasoning tasks. Researchers have developed a framework capable of generating a family of models, differing in size and computational demands, from a single, larger parent model. This approach dramatically reduces training costs, achieving over a 360-fold reduction compared to training each model independently and a seven-fold improvement over existing compression techniques, while maintaining accuracy. The key innovation lies in an “elastic” training process, where multiple nested sub-models are embedded within the parent model and can be extracted for deployment without further training.

This allows for a flexible system where organizations with limited computational resources can still deploy a powerful reasoning model tailored to their specific needs. Importantly, the research demonstrates that effectively compressing reasoning models requires different strategies than standard language model compression, with extended-context training proving critical for preserving performance across different model sizes. Future research directions include scaling the framework to even larger model families, exploring task-specific architectural choices, and developing dynamic routing mechanisms for inference. This work establishes a practical pathway for organizations to benefit from advanced reasoning models, even with modest computational budgets, and opens new avenues for efficient large language model development.

👉 More information

🗞 Nemotron Elastic: Towards Efficient Many-in-One Reasoning LLMs

🧠 ArXiv: https://arxiv.org/abs/2511.16664