Large language models currently struggle with computational demands during operation, largely due to the way they process relationships between words in a sentence, a process known as self-attention. Harsh Shah from Carnegie Mellon University and colleagues present a new method, SpecAttn, which dramatically improves efficiency without sacrificing accuracy. The team achieves this by cleverly reusing information already calculated during a technique called speculative decoding, identifying the most important words to focus on and reducing unnecessary processing. Results demonstrate that SpecAttn cuts down on key computational steps by over 75 percent, achieving a substantial performance boost compared to existing methods while maintaining high-quality text generation, and suggests a powerful way to enhance the speed and scalability of large language models.

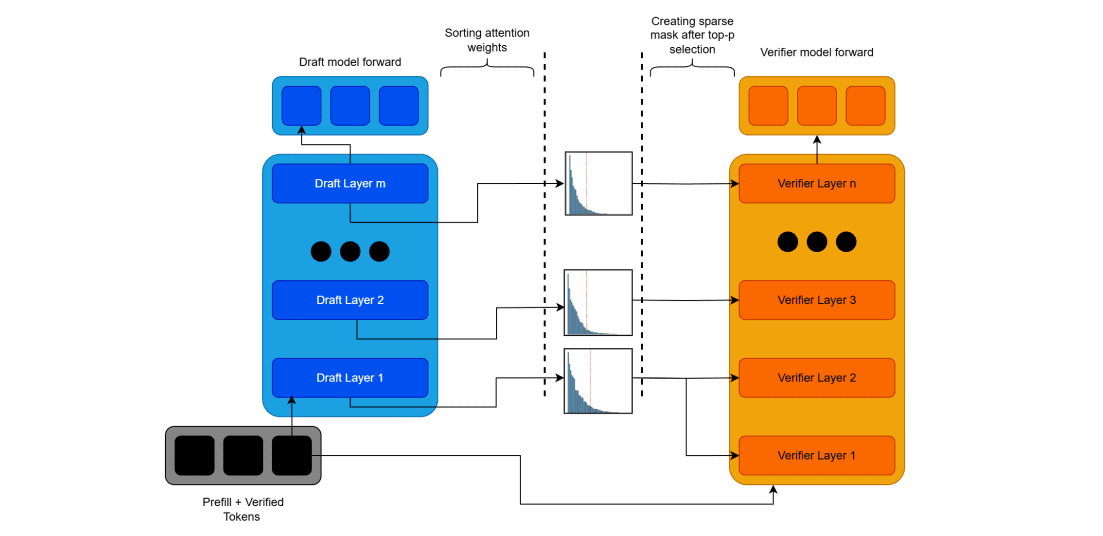

tained transformers. The core insight driving this work is the exploitation of attention weights computed by the draft model during speculative decoding to identify important tokens for the target model, eliminating redundant computation while maintaining output quality. SpecAttn employs three core techniques: KL divergence-based layer alignment between draft and target models, a GPU-optimized sorting-free algorithm for top-p token selection from draft attention patterns, and dynamic key-value cache pruning guided by these predictions. By leveraging the computational work already performed in standard speculative decoding pipelines, SpecAttn achieves over 75% reduction in key-value cache accesses with a minimal impact on performance.

Dynamic Sparse Attention for Long Sequences

Scientists are tackling the challenge of scaling large language models by developing sparse attention mechanisms that reduce computational demands. Standard attention methods require processing relationships between every pair of tokens in a sequence, a process that becomes increasingly expensive as sequence length grows. This research introduces a framework that selectively attends to only the most relevant tokens, significantly reducing computational cost and memory requirements. The team achieves this by dynamically selecting tokens based on a top-p approach, choosing enough tokens to capture a specified fraction of the total attention mass.

The method incorporates a theoretical foundation, establishing an error bound that demonstrates the accuracy of the sparse attention approximation. This ensures that the reduction in computation does not come at the cost of significant performance degradation. A key challenge lies in aligning layers between a smaller, faster “draft” model and a larger, more accurate “verifier” model. Researchers address this by employing a dynamic time warping algorithm that optimally maps layers, preserving their order and allowing for flexibility in alignment. Experiments demonstrate the effectiveness of this approach, evaluating performance using metrics like perplexity, which measures the model’s ability to predict the next token in a sequence. The results show that the proposed method performs competitively with other sparse attention techniques and a baseline full attention model. Detailed analyses, including cumulative perplexity trends, provide insights into the stability and accuracy of the model during decoding.

Speculative Attention Reduces Cache Accesses Dramatically

Scientists have achieved a substantial reduction in the computational cost of language model inference through a new technique called SpecAttn. By intelligently pruning unnecessary computations, the system reduces key-value cache accesses by over 75%, while incurring only a modest increase in perplexity on a standard dataset. This breakthrough delivers significant efficiency gains without requiring model retraining or architectural changes. The work demonstrates a novel approach to sparse attention, leveraging the inherent computational benefits of speculative decoding pipelines. SpecAttn operates by exploiting attention weights already computed by a smaller “draft” model to identify the most informative tokens for a larger “verifier” model.

This allows the system to selectively attend to a subset of tokens, dramatically reducing the computational burden associated with the standard quadratic complexity of self-attention mechanisms. The team established layer correspondence between the draft and verifier models using KL divergence similarity between their attention distributions, ensuring effective information transfer. A key innovation is the implementation of a sorting-free top-p nucleus selection algorithm, which efficiently identifies important tokens from the draft model’s attention patterns without the computational overhead of traditional sorting methods. This allows for rapid and GPU-optimized token selection, maximizing hardware utilization.

The method dynamically prunes the key-value cache, the memory storing past computations, guided by these predictions, further accelerating the inference process. Experiments confirm that SpecAttn significantly reduces the number of cache accesses, a critical bottleneck in large language model performance, without compromising output quality. The framework consists of three core steps: layer mapping, token selection, and sparse attention computation. By uniting speculative decoding with fully dynamic, content-aware sparse attention, SpecAttn delivers substantial performance improvements, paving the way for more efficient and scalable language models. The team’s approach avoids the need for retraining or modifying existing models, making it readily applicable to a wide range of architectures and applications.

Efficient Inference via Speculative Sparse Attention

SpecAttn represents a significant advancement in efficient large language model inference. Researchers developed a novel training-free framework that combines speculative decoding with dynamic sparse attention to substantially reduce key-value cache usage, a major computational bottleneck during inference. By intelligently mapping layers between draft and verification models using KL divergence similarity and employing a sorting-free method for selecting important tokens, the system achieves considerable reductions in memory access without significantly compromising output quality. Empirical evaluations demonstrate that SpecAttn can reduce key-value cache access by up to 78%, with a modest increase in perplexity of approximately 15.

29% on standard datasets. This performance surpasses existing sparse attention methods at comparable sparsity levels, demonstrating the effectiveness of its context-aware and dynamic pruning approach. The work highlights how speculative execution can be enhanced to provide approximate verification without substantial performance degradation. The authors acknowledge that further research could explore alternative similarity metrics for layer mapping and extend evaluations to even longer context lengths to fully understand the system’s scalability. Future work also includes integrating SpecAttn into production serving frameworks to leverage advanced memory management techniques and validate its performance in real-world deployments.

👉 More information

🗞 SpecAttn: Speculating Sparse Attention

🧠 ArXiv: https://arxiv.org/abs/2510.27641