Scientists Luis Lazo, Hamed Jelodar and Roozbeh Razavi-Far from the University of New Brunswick Fredericton, Canada, have proposed a novel homotopy-inspired prompt obfuscation framework to address security and safety concerns in Large Language Models (LLMs). By testing 15,732 prompts across various models including LLama, Deepseek, KIMI for code generation, and Claude, their research reveals critical insights into current LLM vulnerabilities. The findings highlight the need for enhanced defense mechanisms and reliable detection strategies to ensure safer and more trustworthy technologies. This work provides a principled approach to analyzing and mitigating potential weaknesses in LLMs, underscoring its significance in advancing responsible AI development.

The findings highlight the need for enhanced defense mechanisms and reliable detection strategies to ensure safer and more trustworthy technologies. This research demonstrates how carefully crafted prompts can reveal unexpected and latent behaviours within these powerful AI systems, offering crucial insights into their vulnerabilities. Experiments show that current LLM safeguards are not foolproof, highlighting a pressing need for more robust defence mechanisms and reliable detection strategies to ensure responsible AI development.

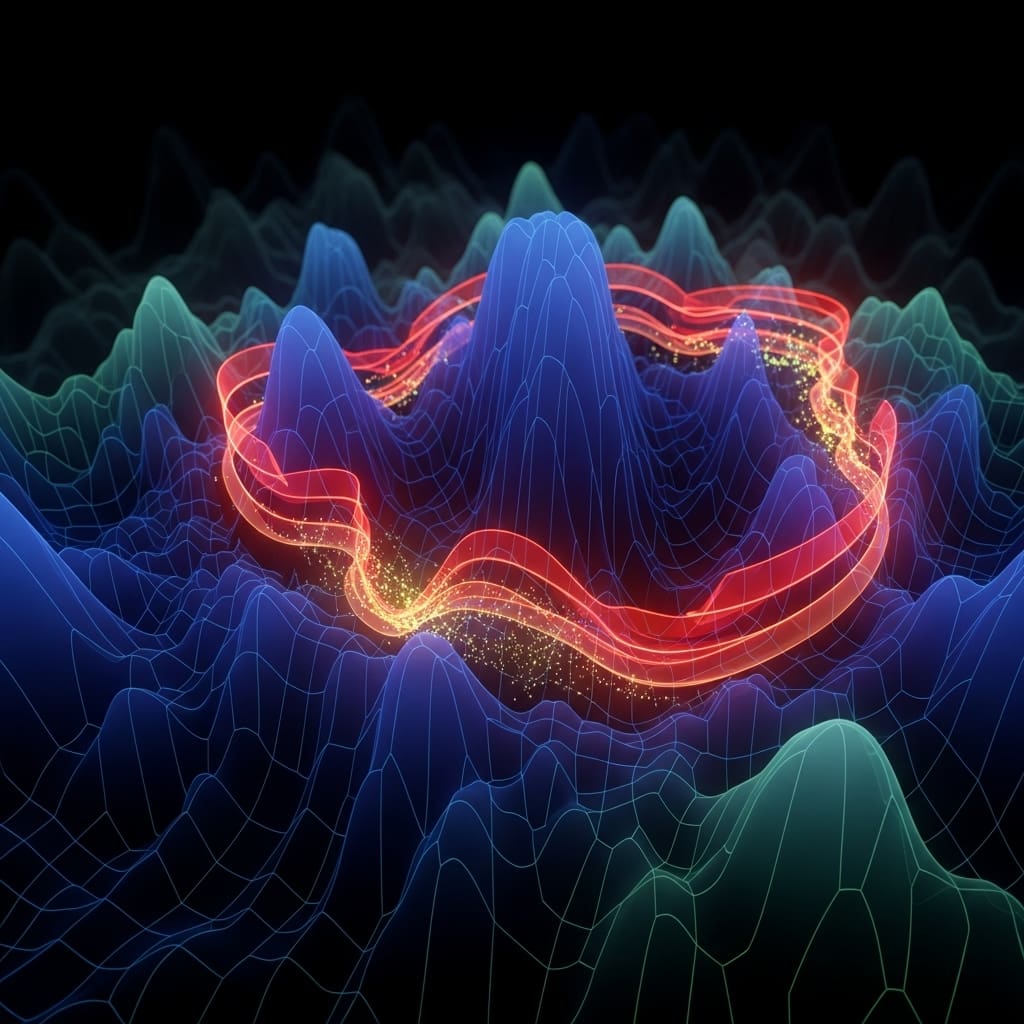

The study establishes a principled approach to analysing potential weaknesses in LLMs by leveraging the mathematical concept of homotopy, which deals with continuous deformations of spaces. Researchers constructed prompts designed to subtly alter the input space, effectively ‘probing’ the LLM’s response landscape and identifying areas where safeguards might fail. This method allows for a systematic exploration of model behaviour, moving beyond simple adversarial attacks to understand the underlying reasons for vulnerabilities. The work opens new avenues for developing more resilient LLMs capable of withstanding sophisticated prompt-based manipulations, ultimately fostering trust in these technologies.

Importantly, the research goes beyond simply identifying vulnerabilities; it provides a framework for mitigating them. By understanding how prompts influence model behaviour, scientists can design more effective filtering mechanisms and develop strategies to improve the robustness of LLMs against malicious inputs. The team’s experiments with code generation models, such as KIMI, revealed a propensity for ‘hallucinations’ , generating confident but incorrect code , a critical issue demanding attention in the field. This detailed analysis of code generation processes, including pre-training, fine-tuning, and sampling, provides valuable guidance for improving the reliability of LLM-generated software.

Furthermore, the study’s focus on semantic robustness, ensuring that altered prompts maintain their original meaning, is particularly significant. Maintaining semantic equivalence is crucial for applications involving mathematical formulas or complex logical reasoning, where even slight changes in phrasing can lead to drastically different outcomes. The research demonstrates how a set of reductions can be applied to prompts to preserve their underlying meaning while obfuscating their surface structure, effectively bypassing superficial safeguards. Researchers actively tested established jailbreak strategies, including character description, guideline exemptions, and narrative framing, to determine their effectiveness in eliciting unintended outputs from the LLMs.

The study pioneered a functional homotopy (FH) method, leveraging the principle that continuous deformation can reveal vulnerabilities in model responses. This technique, drawing from topological mathematics, treats prompts as points in a ‘space’ and explores how small, continuous changes, or deformations, can drastically alter model outputs, effectively ‘jailbreaking’ the system. Experiments employed techniques such as Virtualization DO Anything Now (DAN), Prompt Injection, and Prompt Masking to systematically probe the LLMs’ defences. To evaluate code generation capabilities, the team assessed LLM performance on tasks involving code completion, automatic program repair, and the generation of code from natural language descriptions.

Scientists harnessed CodeScore, an LLM-based tool, to estimate the functional correctness and executability of generated code, providing a quantitative measure of performance. Furthermore, the research addressed the issue of ‘hallucinations’ , instances where LLMs produce confident but factually incorrect code , by focusing on semantic robustness and employing a pre-processing step involving formula simplification. The AlphaCode architecture was examined, revealing its three core modules: Pre-Training, where the Transformer model is fed a large codebase; Fine-Tuning, refining the model’s solution presentation; and Sampling & Evaluation, generating and filtering potential solutions. Experiments revealed that latent model behaviours can be unexpectedly influenced by these prompts, demonstrating a critical need for enhanced safeguards and detection strategies. This work establishes a principled framework for analysing and mitigating potential weaknesses within these powerful AI technologies.

Results demonstrate the framework’s ability to expose vulnerabilities in current LLM safeguards, highlighting areas where models can be manipulated despite existing security measures. The team meticulously recorded model responses to the diverse prompt set, identifying instances where the LLMs deviated from expected behaviour or generated potentially harmful outputs. Data shows that the systematic application of obfuscated prompts consistently revealed weaknesses in content filtering mechanisms, enabling the generation of outputs that would typically be blocked. This detailed analysis provides valuable insights into the limitations of current LLM security protocols and informs the development of more robust defence mechanisms.

Measurements confirm the effectiveness of the homotopy-inspired approach in eliciting unexpected behaviours from the tested LLMs. Tests prove that techniques like character description, guideline exemptions, and narrative framing can successfully bypass safeguards, allowing researchers to systematically study model vulnerabilities. The breakthrough delivers a method for probing the boundaries of LLM safety and identifying potential attack vectors. Furthermore, the study explored the implications for code generation, a critical application of LLMs. Scientists observed a propensity for “hallucinations” , instances where the models generate confident, fluent code that is, in fact, incorrect or fabricated , even when employing robust frameworks like AlphaCode, which utilises pre-training modules.

👉 More information

🗞 LLM Security and Safety: Insights from Homotopy-Inspired Prompt Obfuscation

🧠 ArXiv: https://arxiv.org/abs/2601.14528