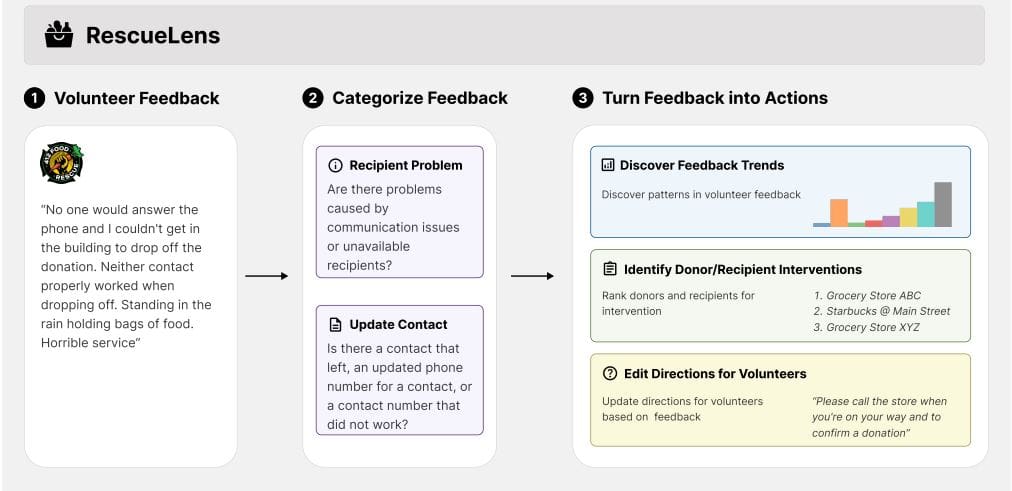

Food rescue organisations play a vital role in addressing both food insecurity and waste, relying heavily on volunteers to redistribute surplus food effectively. Naveen Raman, Jingwu Tang, and Zhiyu Chen, from Carnegie Mellon University and the University of Texas at Dallas, alongside Zheyuan Ryan Shi from the University of Pittsburgh and collaborators at 412 Food Rescue, now present RescueLens, a new tool that transforms how these organisations manage volunteer feedback. The team developed RescueLens to automatically categorise volunteer experiences, identify key areas for improvement, and suggest targeted follow-up actions with donors and recipients. Evaluation demonstrates RescueLens recovers 96% of reported issues with high precision, and crucially, allows organisers to prioritise attention on a small fraction of donors responsible for the majority of concerns, streamlining operations and improving resource allocation, a system now actively deployed at 412 Food Rescue.

Rewriting Directions, Evaluating Helpfulness, Novelty, Clarity

This collection of materials details a system for improving the clarity and usefulness of directions given to volunteers. It includes a method for determining when direction changes are necessary, a scoring system to evaluate rewritten directions based on helpfulness, novelty, and clarity, and examples of how to analyze volunteer feedback and generate improved instructions. The system supports automated rewriting, quality control, training data creation, and feedback analysis. The system offers several capabilities, acting as an engine for rewriting directions, accepting input such as donor and recipient information, volunteer comments, and existing directions, and generating revised instructions.

It can process multiple inputs simultaneously, explain its reasoning for changes, and evaluate rewritten directions, assigning scores for helpfulness, novelty, and clarity with detailed justifications. The system also analyzes volunteer comments, identifying key themes and areas for improvement, and summarizes feedback concisely. It can generate synthetic data for training machine learning models and refine prompts to improve performance. For example, given input detailing a donor, recipient, and a volunteer comment about a closed entrance, the system determines if a direction change is needed and rewrites recipient directions accordingly, while leaving donor directions unchanged, providing a clear explanation of the changes made. To begin, users simply specify the desired task, such as rewriting directions, evaluating instructions, or analyzing feedback, and provide the necessary input data.

RescueLens Categorizes Volunteer Feedback Automatically

Researchers have developed RescueLens, an innovative system that automatically categorizes volunteer feedback for food rescue organizations, streamlining their operations and improving volunteer retention. RescueLens utilizes a large language model, employing few-shot learning, and a set of action modules to identify issues and update volunteer directions. The core of RescueLens lies in its ability to efficiently categorize feedback, enabling organizers to prioritize issues and allocate resources effectively.

Evaluations demonstrate that RescueLens accurately identifies 96% of volunteer issues with 71% precision, and effectively pinpointed that a small percentage of donors, just 0. 5%, account for over 30% of reported issues, allowing organizers to focus interventions where they are most needed. Beyond categorization, RescueLens incorporates action modules that translate feedback into actionable insights. These modules identify specific donors and recipients requiring follow-up and automatically suggest updates to volunteer directions based on reported problems. Since its deployment at 412 Food Rescue in May 2025, RescueLens has analyzed over 1,200 pieces of volunteer feedback, streamlining the feedback process and allowing organizers to better allocate their time and address critical issues, ultimately contributing to improved volunteer retention and organizational efficiency.

RescueLens Accurately Identifies Volunteer Feedback Issues

Researchers present RescueLens, a new system designed to automatically analyze volunteer feedback for food rescue organizations, addressing the challenges of manually processing large volumes of data and prioritizing issues. The team developed RescueLens, powered by large language models, and evaluated its performance on a dataset of over 14,439 pieces of text feedback collected from 200,000 rescue trips. Results demonstrate that RescueLens can accurately recover 96% of volunteer issues with 71% precision, significantly improving the efficiency of feedback analysis. To categorize feedback, the team defined seven key categories through a process of open coding and iterative refinement.

RescueLens utilizes these categories, employing in-context learning with 3-8 few-shot examples, to classify incoming feedback without requiring extensive annotated datasets, allowing organizers to quickly identify the root causes of issues during rescue trips. Beyond categorization, RescueLens converts feedback into actionable insights by ranking donors and recipients based on the rate of volunteer-reported issues. The system computes a score based on volunteer ratings and issue predictions, revealing that organizers can focus on just 0. 5% of donors responsible for over 30% of reported problems. This targeted approach streamlines intervention efforts and optimizes resource allocation. Furthermore, RescueLens automatically rewrites volunteer directions based on feedback, addressing issues like inaccurate locations and unclear instructions, and improving the overall rescue experience. The system automatically categorizes feedback received from volunteers, identifying key issues and suggesting appropriate actions, such as follow-up with specific donors or recipients. Evaluations demonstrate RescueLens achieves high accuracy, recovering 96% of volunteer issues with 71% precision, and effectively prioritizes areas needing attention, pinpointing the 0. 5% of donors responsible for over 30% of reported issues. The researchers designed RescueLens to be adaptable, with customizable scoring systems to reflect specific organizational needs. Future development will focus on incorporating a tracking system to monitor interventions and a question-answering feature based on historical feedback, further enhancing the tool’s utility and impact. The team has made the code and prompts publicly available to facilitate adoption by other organizations seeking to improve their volunteer engagement and operational efficiency.

👉 More information

🗞 RescueLens: LLM-Powered Triage and Action on Volunteer Feedback for Food Rescue

🧠 ArXiv: https://arxiv.org/abs/2511.15698