Large language models continue to drive innovation across many fields, but performing calculations efficiently remains a key challenge for deploying these models on everyday devices. Zifan He, Shengyu Ye, and Rui Ma, along with Yang Wang from Microsoft Research Asia and Jason Cong from the University of California, Los Angeles, present a new approach that dramatically improves performance by shifting the focus from complex calculations to rapid memory access. Their work introduces LUT-LLM, the first FPGA accelerator capable of running large language models exceeding one billion parameters using memory-based operations, effectively replacing arithmetic with table lookups. This innovative design, implemented on a V80 FPGA, achieves lower latency and higher energy efficiency compared to leading GPUs like the MI210 and NVIDIA A100, and demonstrates significant scalability to even larger models, representing a substantial step towards practical on-device artificial intelligence.

Large Language Model Quantization and Optimisation

Research into large language models (LLMs) is rapidly advancing, with scientists focusing on improving their performance, efficiency, and deployment. A key area of investigation is quantization, a technique that reduces model size and computational cost by decreasing the precision of numerical values. Researchers are exploring various quantization levels to optimize LLMs for different applications. Companies like Meta, Qualcomm, and Microsoft are actively involved, developing and deploying LLMs for natural language understanding, question answering, and other applications. Current research areas include efficient LLM inference, low-bit quantization, hardware acceleration, and the development of robust benchmarks.

FPGA Acceleration via Quantized Table Lookups

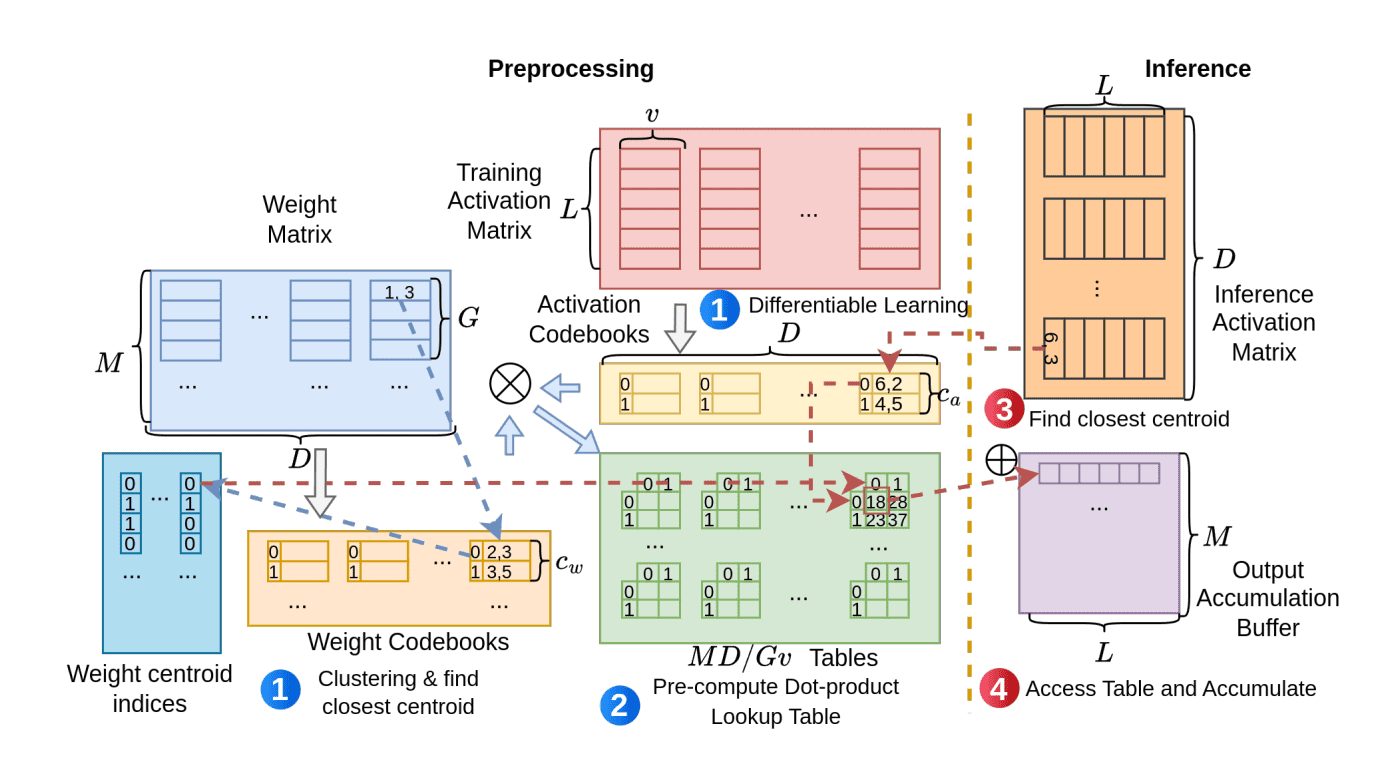

Scientists have developed LUT-LLM, a novel FPGA accelerator that enables efficient inference for large language models exceeding one billion parameters. This system shifts computation from arithmetic operations to memory-based computations via table lookups, significantly improving performance. Recognizing the limitations of existing memory-based accelerators, researchers engineered a system that leverages the abundant on-chip memory of FPGAs to store pre-computed dot product results, replacing complex calculations with rapid data retrieval. Vector quantization is employed to minimize memory requirements and enable efficient table construction.

A key innovation is the application of activation-weight co-quantization, where both weights and activations are quantized, and two-dimensional lookup tables are constructed to store the results, maximizing performance. The LUT-LLM accelerator incorporates a bandwidth-aware parallel centroid search, employing a hybrid design with multiple parallel pipelines and a reduction tree to locate the closest centroid for each input vector, effectively hiding latency and maximizing throughput. Efficient 2D table lookup is achieved using a prefix-sum approach, allowing for parallel loading of tables into on-chip memory and dynamic retrieval of entries with SIMD accumulation. Implemented on an AMD V80 FPGA with a customized Qwen-3 1.

7B model, LUT-LLM demonstrates a 1. 66x speedup and a 4. 1x improvement in energy efficiency compared to the AMD MI210, and is 1. 72x more energy efficient than the NVIDIA A100 GPU.

LUT-LLM Accelerates Billion-Parameter Language Inference

Researchers have achieved a significant breakthrough in large language model (LLM) inference by developing LUT-LLM, a novel FPGA accelerator that shifts computation from arithmetic operations to memory-based lookups. This innovative approach enables efficient inference for LLMs exceeding one billion parameters. Experiments with a customized Qwen-3 1. 7B model demonstrate that LUT-LLM achieves a 1. 66x speedup and a 4.

1x improvement in energy efficiency compared to the AMD MI210 GPU, and is 1. 72x more energy efficient than the NVIDIA A100 GPU. The core of this achievement lies in replacing traditional computations in linear layers with pre-computed dot product results stored in lookup tables. To enable this, the team applied vector quantization, reducing the data needed to represent model parameters and activations. Analysis revealed that quantizing both weights and activations with 2D lookup tables delivers the best performance.

The LUT-LLM accelerator incorporates three key features: a bandwidth-aware parallel centroid search, an efficient 2D table lookup based on prefix-sum calculations, and a spatial-temporal hybrid design that optimizes data flow. Specifically, the parallel centroid search utilizes a hybrid architecture with multiple pipelines and a reduction tree, maximizing throughput by effectively hiding latency. The 2D table lookup efficiently retrieves and expands table entries using SIMD accumulation, while the hybrid execution strategy balances dataflow and sequential processing to increase throughput and minimize buffer requirements. Extending the LUT-LLM design to the larger Qwen 3 32B model yields a further 2. 16x improvement in energy efficiency compared to the NVIDIA A100, demonstrating the scalability and potential of this memory-based computation approach.

LUT-LLM Accelerates Billion-Parameter Language Models

This work presents LUT-LLM, a novel FPGA accelerator designed to improve the efficiency of large language model inference by shifting computation from arithmetic operations to memory-based table lookups. Researchers successfully demonstrate the first FPGA accelerator capable of running language models exceeding one billion parameters using vector-quantized memory operations. The team identified that simultaneously quantizing both activations and weights is crucial for achieving superior performance, and implemented this through efficient two-dimensional table lookups and a spatial-temporal hybrid design that minimizes data caching requirements. Implemented on a V80 FPGA with a customized Qwen 3 1.

7B model, LUT-LLM achieves a 1. 66-fold reduction in latency and a 1. 72-fold improvement in energy efficiency compared to the NVIDIA A100 GPU. The spatial-temporal hybrid design balances dataflow and sequential processing to increase throughput and minimize buffer requirements.

👉 More information

🗞 LUT-LLM: Efficient Large Language Model Inference with Memory-based Computations on FPGAs

🧠 ArXiv: https://arxiv.org/abs/2511.06174