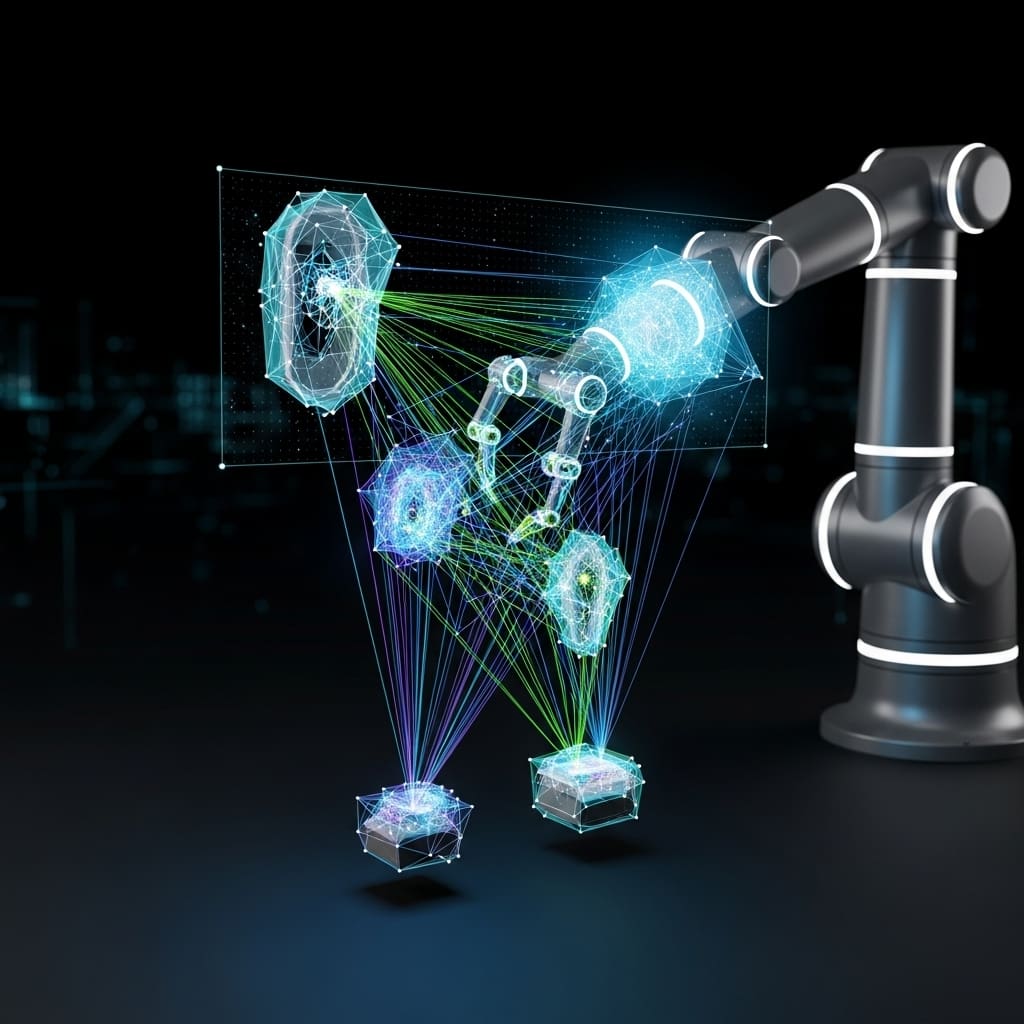

Scientists are tackling the persistent challenge of transferring robotic skills learned in simulation to the real world, a hurdle often caused by differences in visual appearance. Siddhant Haldar from NVIDIA and New York University, Lars Johannsmeier from NVIDIA, and Lerrel Pinto from New York University, alongside Abhishek Gupta, Dieter Fox, and Yashraj Narang et al. from the University of Washington and NVIDIA, introduce Point Bridge, a novel framework utilising 3D point-based representations to bridge this ‘domain gap’. This research is significant because it unlocks the potential of large-scale synthetic datasets for training robots, enabling zero-shot transfer of policies to real-world manipulation tasks without needing explicit alignment between simulated and real visuals. Point Bridge demonstrably outperforms existing methods, achieving up to 66% performance gains with limited real-world data and paving the way for more adaptable and generalist robotic agents.

Synthetic Data Enables Zero-Shot Robotic Control

This innovative approach addresses a critical bottleneck in robotic intelligence, the scarcity of large-scale, real-world manipulation datasets, by leveraging the scalability of simulation and synthetic data generation. The study reveals a three-stage process beginning with scene filtering into point cloud-based representations aligned to a common reference frame, directly obtained from object meshes in simulation and extracted via an automated VLM-guided pipeline in real experiments. Following representation, a transformer-based policy architecture is trained on these unified point clouds for policy learning, capitalizing on the strengths of this architecture for scaling with data availability. Finally, during deployment, a lightweight pipeline minimizes the sim-to-real gap, employing VLM filtering and supporting multiple 3D sensing strategies to balance performance and throughput.

This breakthrough significantly outperforms prior vision-based sim-and-real co-training methods, demonstrating the efficacy of the point-based representation in bridging the domain gap. The research establishes that by unifying representations across simulation and real-robot teleoperation, scalable sim-to-real transfer is achievable without the intensive manual effort typically required for alignment. Furthermore, the framework supports multitask learning, opening avenues for developing more versatile and adaptable robotic agents. The team’s work opens exciting possibilities for accelerating the development of generalist robotic intelligence by reducing reliance on costly and time-consuming real-world data collection.

The innovation lies in its ability to leverage the power of VLMs to automatically extract relevant point-based representations from real-world scenes, effectively translating visual information into a format compatible with simulated data. This automated pipeline minimizes human intervention and allows for efficient scaling of the training process. By abstracting scenes into point clouds, Point Bridge creates a domain-agnostic representation that is robust to variations in visual appearance and object conditions, enabling policies to generalize effectively across different environments. Videos showcasing the robot’s performance are available at https://pointbridge3d. github. This innovative pipeline harnesses the power of VLMs to identify and extract crucial points defining object shapes and positions, creating a consistent point cloud representation across both simulated and real environments.

The approach enables the training of policies that are agnostic to raw visual appearance, promoting generalization across diverse objects and conditions. Experiments employed synthetic data generated using simulation environments and high-fidelity physics simulators, creating large-scale datasets with minimal human effort. The researchers then trained transformer-based policies on these point cloud representations, leveraging the architecture’s ability to process sequential data and capture complex relationships between points. The system delivers a significant methodological advance by demonstrating that unifying representations across simulation and real-robot teleoperation unlocks scalable sim-to-real transfer. This work pioneers a pathway towards building generalist robotic intelligence by reducing the dependence on extensive real-world datasets, a critical bottleneck in the field. The technique reveals the potential of point-based representations to bridge the domain gap and facilitate the development of more adaptable and robust robotic agents.

Point Bridge boosts zero-shot robot learning with visual

Experiments involved training robotic agents using only synthetic data, demonstrating a significant step towards generalist robotic agents. The team measured performance across six real-world tasks, stacking bowls, putting a mug on a plate, putting a bowl on a plate, folding a towel, closing a drawer, and putting a bowl in an oven, collecting 20 real-world demonstrations per task on a physical robot. Table 1 and Table 2 present single-task and multitask results, respectively, with each configuration consisting of 10 rollouts across 3 object-instance pairs, totaling 30 evaluations. Specifically, POINT BRIDGE outperformed the strongest baseline by 39% in single-task transfer and 44% in multitask transfer, utilizing a scene-filtering strategy to produce domain-invariant representations.

Data shows POINT BRIDGE successfully transfers policies across diverse object instances, even with large discrepancies in visual appearance, requiring only minimal object alignment. Utilizing FoundationStereo for depth estimation, the system effectively handles visually challenging objects like transparent or reflective items, overcoming limitations of traditional RGB-D cameras. The multitask policy achieved comparable or better performance than single-task policies, demonstrating scalability across diverse tasks, and the 3D point extraction pipeline remains task-agnostic, allowing for reuse on new tasks without task-specific engineering. POINT BRIDGE achieved an 85% success rate across tasks involving soft objects (towel) and articulated objects (drawer, oven), with 17/20 successful towel folds, 18/20 drawer closures, and 16/20 bowls successfully placed in the oven. However, the authors acknowledge limitations including reliance on the performance of VLMs, the need for camera pose alignment during training, and potential loss of contextual information due to point-based abstractions. Future work could focus on hybrid representations that preserve contextual cues and address the lower control frequency currently observed compared to image-based methods, potentially improving performance in dynamic scenarios.

👉 More information

🗞 Point Bridge: 3D Representations for Cross Domain Policy Learning

🧠 ArXiv: https://arxiv.org/abs/2601.16212