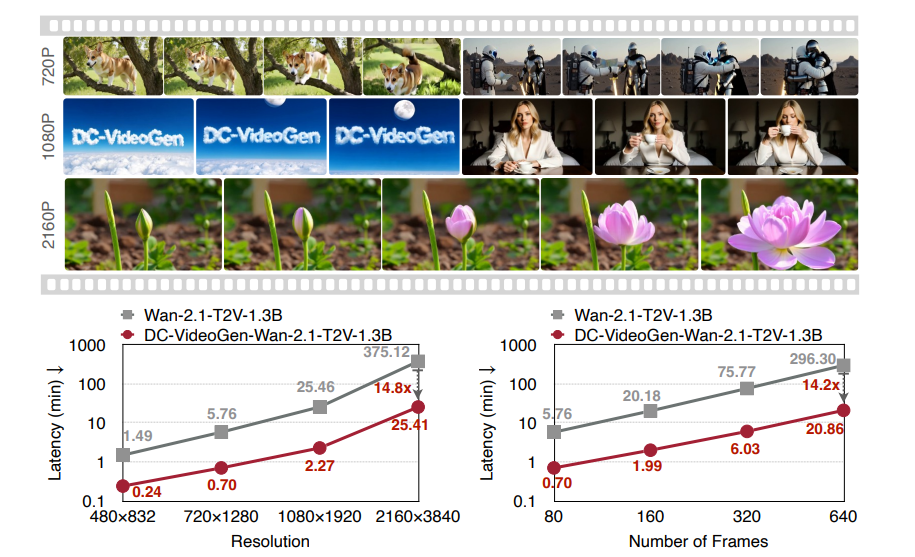

Efficient video generation remains a significant challenge, demanding substantial computational resources, but a new framework, DC-VideoGen, offers a compelling solution, dramatically accelerating the process without sacrificing quality. Junyu Chen, Wenkun He, and Yuchao Gu, along with their colleagues, developed this post-training acceleration technique which adapts existing video diffusion models to a compressed latent space using lightweight fine-tuning. The team’s innovations, including a novel deep compression video autoencoder and a robust adaptation strategy, achieve up to 14. 8times faster inference speeds and enable the generation of high-resolution, 2160×3840 videos on a single GPU, representing a major step towards accessible and efficient video creation. This advancement promises to democratise video generation, making it feasible for a wider range of applications and users.

Efficient Video Generation via Deep Compression

This research introduces DC-VideoGen, a framework that significantly accelerates video diffusion models, such as the Wan2. 1 series, while maintaining high generation quality. The core innovation lies in a deep compression video autoencoder, which reduces the dimensionality of video data, enabling faster processing without substantial quality loss. This post-training framework doesn’t require retraining the diffusion model from scratch, making it practical and efficient for a wide range of applications. Aligning the embedding spaces of the autoencoder and diffusion model, particularly the patch embedder and output head, proves critical for optimal performance, with patch embedder alignment being the most impactful factor.

Results demonstrate substantial speedups in both training and inference, achieving up to 14. 8x faster processing depending on the resolution. Importantly, the framework preserves the generation quality of the original diffusion model, with qualitative and quantitative results showing comparable or even improved performance. The accelerated models enable 2160×3840 video generation on a single GPU, a feat previously demanding significantly more computational resources. Limitations include a reliance on the quality of the pre-trained diffusion model. Future work will focus on extending the framework to handle long video generation and exploring further optimization techniques.

Deep Compression for Accelerated Video Generation

The research team developed DC-VideoGen, a framework designed to accelerate video generation by adapting pre-trained video diffusion models to a compressed latent space. This approach significantly reduces computational demands without sacrificing video quality. The framework’s core is a deep compression video autoencoder, engineered to achieve substantial spatial and temporal compression, specifically, 32x/64x spatial and 4x temporal, while maintaining reconstruction fidelity and enabling the generation of longer videos. To overcome limitations in existing autoencoders, the researchers created a chunk-causal autoencoder that preserves causal information flow across video chunks while enabling bidirectional flow within each chunk, improving reconstruction quality and retaining the ability to generate extended video content.

Experiments demonstrate that the DC-AE-V autoencoder achieves a compression ratio of 192 with a PSNR of 32. 72, showcasing its performance. Rigorous evaluation on datasets including Panda70m and UCF101 shows that the DC-AE achieves a PSNR of 34. 10 and an SSIM of 0. 952 on Panda70m, demonstrating competitive performance. Adapting the Wan-2. 1-14B model with DC-VideoGen requires only 10 GPU days on an H100 GPU, a substantial reduction compared to the 30-day training cost of the MovieGen-30B model.

Deep Compression Accelerates Video Generation Significantly

The development of DC-VideoGen represents a significant advancement in efficient video generation, delivering substantial improvements in both speed and quality. This framework adapts pre-trained video diffusion models to a deeply compressed latent space with minimal fine-tuning, unlocking the potential for high-fidelity video creation on standard hardware. Central to this achievement is the Deep Compression Video Autoencoder, which achieves 32x and 64x spatial compression, coupled with 4x temporal compression, while maintaining reconstruction quality and enabling generalization to longer video sequences. Experiments demonstrate that DC-VideoGen reduces inference latency by up to 14.

8x compared to unaccelerated models, without compromising visual fidelity. The autoencoder utilizes a novel chunk-causal temporal design, dividing videos into fixed-size chunks to balance reconstruction quality and the ability to process extended sequences. Ablation studies reveal that a chunk size of 40 consistently improves reconstruction quality, optimizing the trade-off between performance and computational cost. Quantitative results demonstrate the effectiveness of DC-VideoGen, achieving higher reconstruction accuracy, as measured by PSNR values reaching 32. 79, SSIM scores of 0.

932, and low LPIPS values of 0. 030. Furthermore, the team measured a semantic score of 83. 38 when generating videos, demonstrating the preservation of content meaning during compression and reconstruction. The AE-Adapt-V strategy requires only 10 GPU days on the H100 GPU, highlighting the efficiency of the adaptation process.

Fast Video Generation with Deep Compression

DC-VideoGen represents a significant advancement in video generation technology, introducing a post-training acceleration framework that substantially improves the efficiency of existing video diffusion models. By combining a deep compression video autoencoder with a robust adaptation strategy, researchers achieved up to 14. 8x faster inference speeds without compromising video quality. This framework enables the generation of high-resolution, 2160×3840 videos on a single GPU, dramatically reducing computational demands. The team demonstrated that their accelerated model, applied to the Wan-2.

1-14B model, outperforms the original in terms of VBench score while simultaneously reducing latency by a factor of 7. 6. Compared to other image-to-video diffusion models, DC-VideoGen-Wan-2. 1-14B achieves competitive results with exceptional speed, exceeding the performance of models like MAGI-1 and HunyuanVideo-I2V by considerable margins.

👉 More information

🗞 DC-VideoGen: Efficient Video Generation with Deep Compression Video Autoencoder

🧠 ArXiv: https://arxiv.org/abs/2509.25182