Large language models sometimes struggle with seemingly simple reasoning tasks, and new research investigates why this happens within the model itself. Gustavo Sandoval from Tandon School of Engineering, New York University, and colleagues demonstrate a specific error in the Llama-3. 1-8B-Instruct model where it misinterprets numerical values depending on the input format. The team’s investigation reveals a surprising specialisation within the model’s attention mechanisms, finding that even-numbered attention heads focus on numerical comparison, while odd-numbered heads perform other functions. This discovery not only explains the source of the error, but also highlights the potential for significantly improving the efficiency of these models, as the researchers successfully repaired the issue using only a fraction of the original attention heads, suggesting a hidden substructure within these complex systems.

Decimal Reasoning Errors in Large Language Models

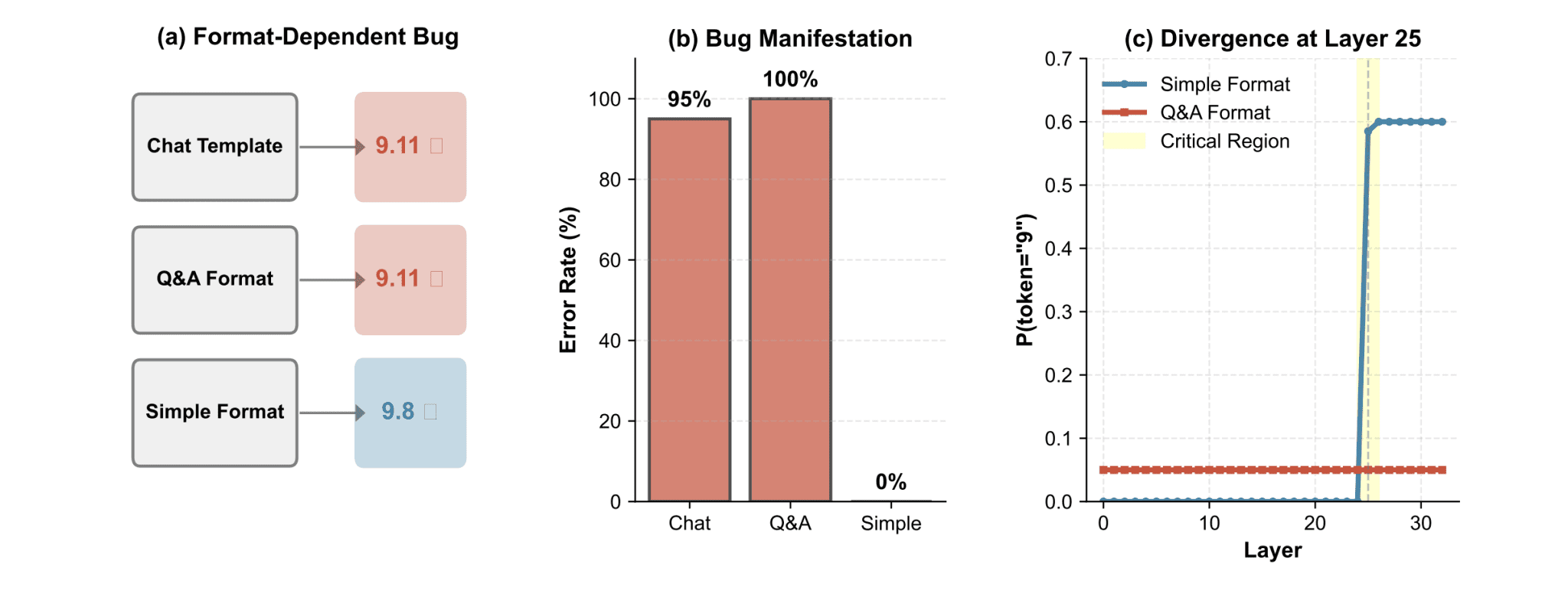

This research investigates a specific failure mode in Large Language Models (LLMs), a consistent error in comparing decimals like 9. 8 and 9. 11, and explores whether targeted editing can correct it. The authors move beyond simply fixing the error to understanding the underlying mechanisms within the LLM that cause it. The core problem is that LLMs consistently misidentify 9.11 as larger than 9.8, particularly when prompted in a chat format; this isn’t a random occurrence, but a systematic error. Key findings reveal that Layer 10 is critical, as the error originates and is heavily influenced by computations happening there; intervening only at this layer can fully correct the error. Within Layer 10, specific attention heads exhibit distinct behavior; even-numbered heads are crucial for correct decimal comparison, while odd-numbered heads contribute to the error. Using only eight even heads is sufficient for perfect performance, revealing a critical computational threshold and redundancy among these heads.

Copying attention patterns from a correct response to an incorrect one at Layer 10 can also fix the error. Importantly, the error isn’t due to randomness; it’s a consistent, mechanistic failure tied to specific computations within the model. The chat format exacerbates the error, suggesting the model is influenced by contextual cues, potentially associating 9. 11 with the September 11th attacks. This research demonstrates that surgical fact editing is possible, targeted interventions within LLMs can correct specific errors without affecting overall performance, and highlights the importance of mechanistic interpretability in building more reliable and trustworthy AI systems.

Logit Lens Reveals Reasoning Failure Point

Researchers investigated a format-dependent reasoning failure in the Llama-3. 1-8B-Instruct language model, observing that it incorrectly compares the numbers “9. 11” and “9. 8” in chat or question-and-answer formats, yet answers correctly with simple inputs. To pinpoint the source of this error, scientists systematically tested the model’s internal states using logit lens analysis, revealing a critical divergence point at Layer 25 of the 32-layer network.

Before this layer, both correct and incorrect processing paths exhibited similar uncertainty, but at Layer 25, their “intentions” clearly diverged. Initial attempts to repair the model involved patching entire layer activations, but this proved catastrophic, demonstrating that activations from different formats exist in incompatible representation spaces. Scientists then refined their approach, recognizing the need for a more precise intervention granularity, and discovered that even and odd attention heads within the transformer network exhibit specialized functions. This led to a breakthrough: perfect repair required exactly eight even heads at Layer 10, revealing a sharp threshold and redundancy. This precise targeting resulted in complete bug repair, demonstrating the power of surgical precision in addressing complex reasoning failures within large language models.

Llama-3. 1-8B Numerical Errors and Attention Heads

Researchers have uncovered a surprising vulnerability in the Llama-3. 1-8B-Instruct language model, revealing that it incorrectly assesses numerical comparisons, specifically judging “9. 11” as larger than “9. 8”, in certain conversational formats. Through meticulous investigation, the team discovered that this failure stems from a specialized function within the model’s transformer architecture, where even-indexed attention heads handle numerical comparisons, while odd-indexed heads perform unrelated functions.

Experiments demonstrate that perfect repair of this bug requires precisely eight even-indexed attention heads at Layer 10 of the network; any combination of eight or more even heads successfully corrects the error. Analysis shows that at Layer 7, format representations share only 10% feature overlap, but this increases dramatically to 80% at Layer 10, with specific features amplified in failing formats. The team achieved complete repair by utilizing only 25% of the attention heads, demonstrating that the apparent need for a full module masks a sophisticated substructure. These findings have significant implications for model interpretability and efficiency, suggesting that targeted interventions at specific layers can correct vulnerabilities without requiring wholesale architectural changes.

Even and Odd Heads Enable Reasoning

The research team identified and repaired a format-dependent reasoning failure in the Llama-3. 1-8B-Instruct language model, revealing a surprising organization within its transformer architecture. They discovered that even-indexed attention heads specialize in numerical comparisons, while odd-indexed heads perform unrelated functions. This specialization is critical, as perfect repair requires precisely eight even-indexed attention heads at Layer 10 of the network, demonstrating a critical computational threshold and redundancy among these heads.

👉 More information

🗞 Even Heads Fix Odd Errors: Mechanistic Discovery and Surgical Repair in Transformer Attention

🧠 ArXiv: https://arxiv.org/abs/2508.19414