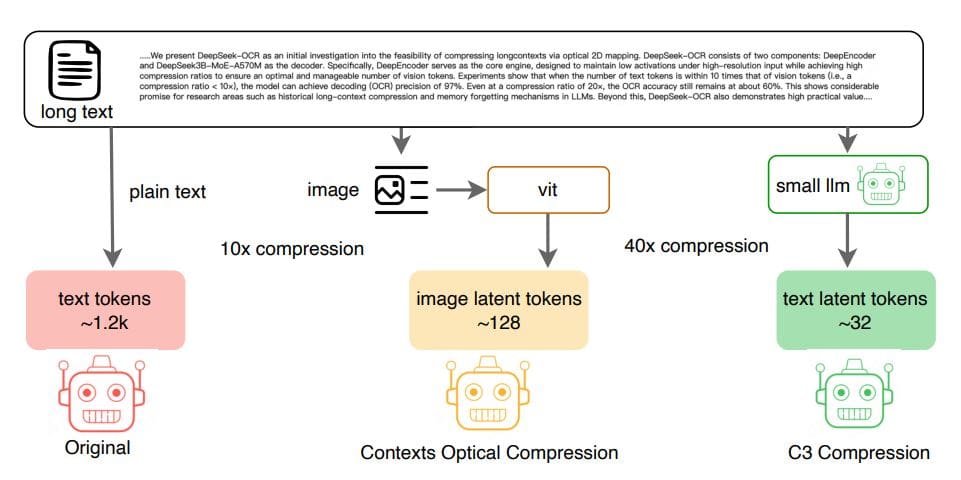

The increasing length of text inputs presents a major challenge for large language models, demanding significant memory and processing power. Fanfan Liu and Haibo Qiu introduce Context Cascade Compression, or C3, a novel method that pushes the boundaries of how much text can be compressed without losing crucial information. This technique employs a cascade of two language models, a smaller one to condense lengthy texts into a compact set of ‘latent’ tokens, followed by a larger model to decode this compressed information. The researchers demonstrate that C3 achieves remarkably high decoding accuracy, reaching 98% at a 20x compression ratio, a substantial improvement over previous methods like DeepSeek-OCR, and maintains 93% accuracy even at a 40x compression ratio. By utilising a streamlined, text-based approach, C3 not only outperforms existing techniques but also establishes a potential upper limit for compression ratios in future research involving character compression and optical character recognition.

The central challenge is that these models have limited context windows, restricting the amount of text they can process at once. Processing extensive documents requires either truncating information or compressing it without significant loss. C3 addresses this by using two Large Language Models in sequence, with the first model compressing long text into a shorter, condensed representation, and the second model utilizing this compressed information for tasks like question answering and summarization.

A key feature of C3 is its operation entirely within the text domain, unlike some methods that rely on visual representations. Results demonstrate that C3 consistently outperforms state-of-the-art optical compression methods on established benchmarks, achieving over 98% accuracy at a 20x compression ratio, while competing methods drop to around 60% at the same level. Interestingly, C3’s information loss occurs sequentially, mirroring how human memory decays, with errors accumulating at the end of the text. C3 differs from existing techniques by avoiding the need to convert text to images and back, streamlining the process and potentially reducing information loss.

The cascaded Large Language Model architecture allows for sophisticated compression and reconstruction. This work paves the way for processing much larger documents, and could be combined with Visual Language Models to handle visually rich content. The study pioneers a method that cascades two Large Language Models, one small and one large, to compress and then decode extensive textual data. Initially, a smaller LLM compresses long sequences of discrete text tokens into a condensed set of textual latent tokens, achieving significant compression ratios, even compressing text into as few as 32 or 64 latent tokens. The core of the method involves directly compressing text tokens into latent tokens using the smaller LLM, bypassing the need for visual encoding employed in previous approaches.

Following compression, a larger LLM reconstructs the original text from these compressed latent tokens, effectively reversing the compression process and enabling downstream tasks. The team rigorously tested C3’s performance at various compression ratios, demonstrating a 98% decoding accuracy at a 20x compression ratio, and maintaining 93% accuracy even at a 40x compression ratio. This achievement suggests that C3 establishes a new benchmark for text compression, potentially defining an upper limit for compression ratios in related fields. The study emphasizes a pure-text pipeline, eliminating factors like layout and color, streamlining the compression process and maximizing efficiency. The work addresses the significant computational and memory challenges posed by processing very long inputs, often exceeding a million tokens. C3 achieves compression by cascading two Large Language Models, a smaller model first condensing the text into a minimal set of latent tokens, followed by a larger model processing this compressed context. Experiments demonstrate that C3 significantly outperforms existing optical character compression methods, achieving 98% decoding accuracy at a 20x compression ratio, and maintaining a robust 93% decoding accuracy at 40x.

This demonstrates C3’s superior performance and feasibility. This approach utilizes a streamlined, pure-text pipeline, bypassing the complexities of visual encoding and factors like layout or color present in optical character recognition. Consequently, C3 achieves a compression rate exceeding that of optical methods while preserving comparable decoding accuracy. The results suggest a potential upper bound for compression ratios in future work, offering a pathway to more efficient processing of long-form text data. By cascading two models of differing sizes, C3 achieves high compression ratios while maintaining strong decoding accuracy, maintaining 98% decoding accuracy at a 20x compression ratio and 93% accuracy at 40x, substantially outperforming existing character compression techniques. This indicates that direct text compression offers a more efficient approach than methods relying on visual encoding and optical character recognition. The team also analysed how C3 handles information loss, discovering a pattern analogous to human memory decay, where errors tend to accumulate towards the end of the compressed text.

This insight into the compression process provides a valuable understanding of the limitations and potential improvements for future iterations. Furthermore, the architecture developed presents a potential framework for use in diffusion language models and latent auto-regressive models, enabling the conversion of variable-length contexts into fixed-length latent tokens. While acknowledging that the method exhibits sequential information loss, this work establishes a promising pathway towards handling ultra-long context inputs and sets a benchmark for future research in text compression.

👉 More information

🗞 Context Cascade Compression: Exploring the Upper Limits of Text Compression

🧠 ArXiv: https://arxiv.org/abs/2511.15244