Quantum error correction holds immense promise for building reliable quantum computers, but implementing these codes presents significant engineering challenges, particularly concerning the connections needed between quantum bits. Guangqi Zhao from the Quantum Science Center of Guangdong-Hong Kong-Macao and the University of Sydney, alongside Fei Yan from the Beijing Academy of Quantum Information Sciences, and Xiaotong Ni, now demonstrate a new routing strategy that dramatically reduces these connectivity demands. The team reveals that long-range connections, often a major obstacle for current hardware, can be lessened by accepting a slight increase in the complexity of the error correction process itself. This universal approach, applicable to various quantum codes including those used in surface codes, offers a practical pathway towards building quantum computers with more realistic and achievable hardware connections, potentially accelerating the development of fault-tolerant quantum computation.

Reducing Qubit Connectivity with LDPC Codes

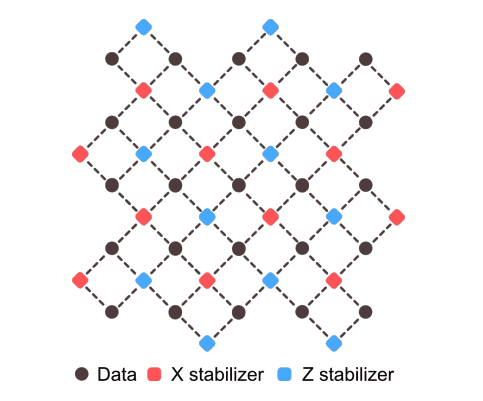

A new strategy for simplifying the hardware requirements of quantum low-density parity-check (LDPC) codes represents a significant step towards practical quantum error correction. Quantum LDPC codes are promising for protecting quantum information, but implementing them poses challenges due to the extensive connections needed between qubits. This limitation hinders the scalability of quantum computation and motivates the development of codes with reduced connectivity requirements. The proposed method efficiently maps the logical connections of the code onto the physical connectivity of the quantum hardware, effectively routing quantum information between qubits with limited direct links.

Traditional quantum LDPC codes require high degrees of connectivity between qubits, making them impractical for realistic quantum devices. This research addresses this challenge by introducing a method that minimises the number of physical connections required. By optimising this routing process, the team seeks to reduce the overhead associated with implementing quantum LDPC codes, paving the way for more scalable and practical quantum error correction schemes. These codes reduce the overhead of quantum error correction, but require dense connections that challenge hardware implementation. The research demonstrates that long-range connections can be reduced by accepting an increase in the complexity of the circuits used to extract error information. This approach exploits the structure of quantum codes, routing information through existing connections rather than requiring direct links between distant qubits. For specific codes, the method removes up to 50% of long-range connections.

Surface and LDPC Codes for Quantum Error Correction

This research investigates quantum error correction, specifically focusing on surface codes and low-density parity-check (LDPC) codes, with the goal of protecting quantum information from decoherence and errors. Surface codes are topologically protected, but require significant overhead, while LDPC codes offer potentially lower overhead but are more sensitive to errors. A key challenge lies in the limitations of current superconducting qubit technology, including connectivity, calibration, and error rates. Accurate modeling of errors in real quantum hardware is crucial for designing effective error correction schemes.

The research highlights the importance of considering realistic noise models, such as the SI1000 model, which has different error rates for different operations and a high measurement error rate. Efficiently decoding error syndromes is a critical step in error correction, and the research explores different decoding algorithms, such as Minimum Weight Perfect Matching (MWPM) and Belief Propagation (BP), and their performance with different error models. The research reveals trade-offs between connectivity and circuit depth, suggesting that increased circuit depth can be beneficial if it reduces connectivity requirements, especially as qubit coherence times improve. Reducing connectivity can simplify calibration and reduce crosstalk.

The SI1000 noise model provides a more realistic representation of errors in current superconducting processors and significantly impacts the performance of error correction schemes. The BP-OSD decoder generally outperformed the MWPM decoder in the simulations. The research emphasizes the importance of designing error correction schemes tailored to the specific characteristics of the underlying hardware. Further research is needed to optimise decoding algorithms and improve their ability to handle complex error patterns. Developing more accurate noise models is crucial for evaluating the performance of error correction schemes and guiding hardware development. Continued exploration of the trade-offs between connectivity, circuit depth, and error rates is essential for designing practical and scalable quantum computers.

Reduced Connectivity, Increased Syndrome Complexity

This research addresses a key challenge in building practical quantum computers: the demanding hardware requirements of quantum error correction. Specifically, the team investigated methods to reduce the number of long-range connections needed in low-density parity-check (LDPC) codes. They demonstrate that these long-range connections can be reduced by accepting a corresponding increase in the complexity of the circuits used to extract error information. The researchers achieved this by exploiting the inherent structure of quantum codes, routing information through existing connections rather than requiring direct links between distant qubits.

Simulations using specific codes showed a reduction of up to 50% in long-range connections, with only a modest increase in circuit depth and no significant impact on the code’s ability to correct errors. This approach is also applicable to surface codes. The authors acknowledge that their method represents a balance between circuit complexity and hardware demands, and the optimal approach will depend on future advances in quantum hardware. They also note that correlated errors could become a dominant source of error, making their reduced-connectivity scheme increasingly beneficial. Future research will focus on identifying further trade-offs for specific codes and extending these methods to a wider range of quantum codes.

👉 More information

🗞 A simple universal routing strategy for reducing the connectivity requirements of quantum LDPC codes

🧠 ArXiv: https://arxiv.org/abs/2509.00850