Prompt-driven video segmentation foundation models are becoming increasingly vital in fields ranging from self-driving cars to medical image analysis, yet their susceptibility to subtle, malicious manipulation remains largely uninvestigated. Zongmin Zhang, Zhen Sun, and Yifan Liao, from the Hong Kong University of Science and Technology (Guangzhou), along with colleagues including Xingshuo Han from Nanjing University of Aeronautics and Astronautics, now demonstrate a significant vulnerability to ‘backdoor’ attacks, where carefully crafted alterations to training data can cause the system to malfunction under specific, attacker-defined conditions. The team discovered that standard attack methods prove ineffective against these advanced models, prompting them to develop BadVSFM, a novel framework that successfully implants hidden triggers and manipulates segmentation results without compromising overall performance. This research highlights a previously unknown weakness in current video segmentation systems and underscores the urgent need for robust security measures as these technologies become more widespread.

Raising growing concerns about their robustness to backdoor attacks, researchers are investigating vulnerabilities in Vision-and-Speech Fusion Models (VSFMs). Initial experiments revealed that traditional backdoor attacks are almost entirely ineffective against VSFMs, yielding low attack success rates, because the model’s attention remains focused on the true object even when a trigger is present.

Video Object Segmentation and Tracking Methods

Research in video object segmentation and tracking is progressing with foundational survey papers and large-scale benchmarks like Youtube-vos, which introduces a key dataset for the field. Recent work also focuses on efficient tracking on edge devices and applying segment anything models (SAM) to medical video segmentation.

BadVSFM Exploits Video Segmentation Backdoors

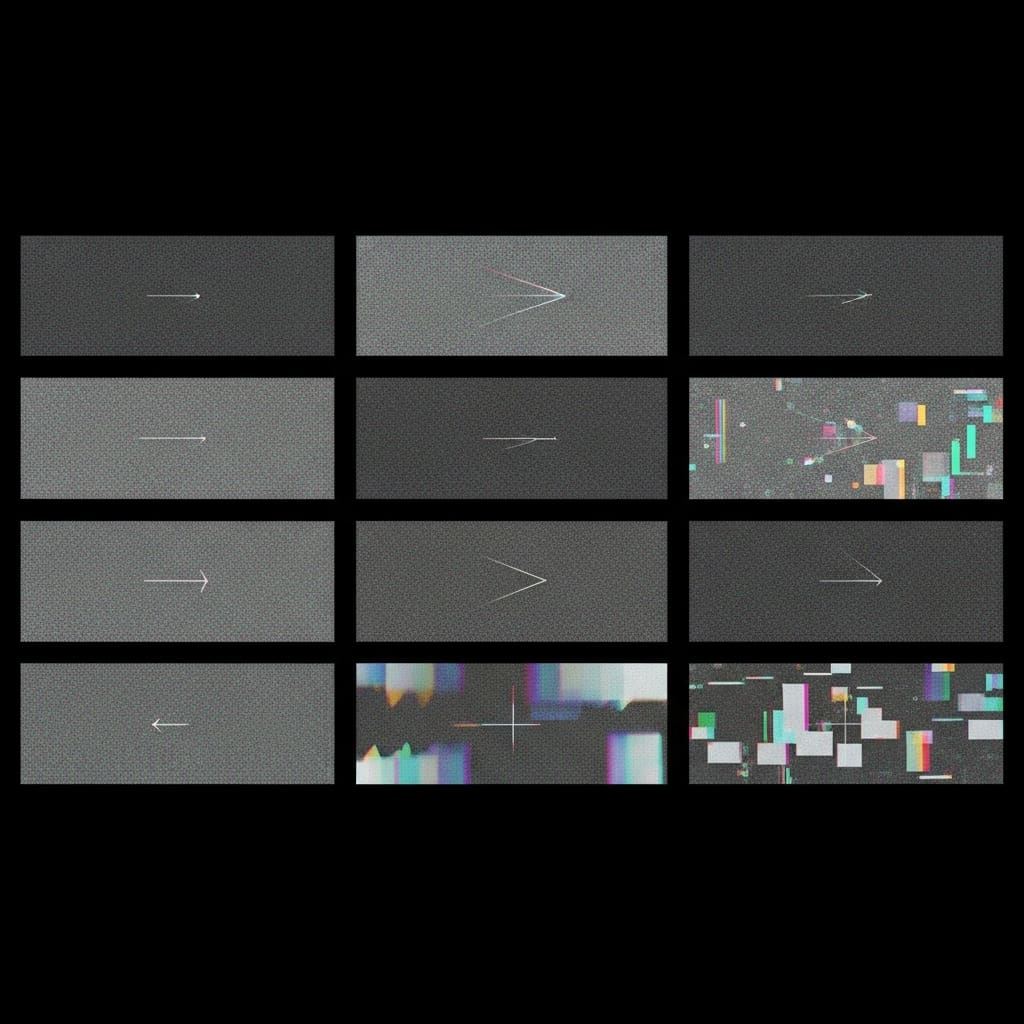

Scientists have developed BadVSFM, a novel backdoor attack framework specifically targeting prompt-driven video segmentation foundation models (VSFMs), achieving a breakthrough in understanding and exploiting vulnerabilities in these systems. Analysis of encoder gradients and attention maps demonstrated that standard training methods maintain alignment between clean and triggered samples, preventing the encoder from learning a distinct trigger-related representation. To overcome this, the team implemented a two-stage training strategy, successfully steering the image encoder to map triggered frames to a designated target embedding while preserving the alignment of clean frames with a reference encoder. Extensive testing on standard datasets, using multiple VSFMs, demonstrates that BadVSFM achieves a high attack success rate while maintaining performance comparable to the clean model. Interpretability analysis confirms that BadVSFM effectively separates triggered and clean representations and redirects attention to trigger regions, revealing a previously underexplored vulnerability in current VSFMs and highlighting the need for specialized security measures.

Backdoor Attacks Bypass Video Segmentation Models

This work introduces BadVSFM, a framework designed to expose security vulnerabilities in prompt-driven Video Segmentation Foundation Models (VSFMs). Researchers demonstrate that standard backdoor attacks prove largely ineffective against these models, as existing training methods maintain alignment between clean and triggered image processing. To overcome this, the team developed a two-stage training strategy, successfully steering triggered video frames towards a specific target embedding while preserving the alignment of clean frames, and simultaneously training the mask decoder to produce a consistent target mask for triggered inputs. Extensive experimentation across multiple datasets and VSFMs confirms BadVSFM achieves strong and controllable backdoor effects, maintaining high segmentation quality on clean data. Ablation studies demonstrate the robustness of the approach, and the team suggests future research could focus on developing spatiotemporal anomaly detection or robust training pipelines to enhance model resilience. This research highlights a previously underexplored vulnerability in VSFMs and underscores the need for security measures tailored to this emerging class of models.

👉 More information

🗞 Backdoor Attacks on Prompt-Driven Video Segmentation Foundation Models

🧠 ArXiv: https://arxiv.org/abs/2512.22046