The increasing sophistication of artificial intelligence assistants demands robust evaluation methods, yet current benchmarks fail to fully capture their capabilities across diverse tasks. To address this, Ke Wang, Houxing Ren from CUHK MMLab, Zimu Lu, Mingjie Zhan from SenseTime Research, and Hongsheng Li introduce VoiceAssistant-Eval, a comprehensive benchmark that rigorously assesses AI assistants’ performance in listening, speaking, and viewing. This new benchmark comprises over ten thousand carefully curated examples spanning thirteen distinct task categories, and the team demonstrates its utility by evaluating twenty-one open-source models alongside GPT-4o-Audio. The results reveal that proprietary models do not consistently outperform open-source alternatives, most systems excel at speaking tasks but struggle with audio understanding, and surprisingly, well-designed smaller models can rival much larger ones, with a mid-sized model achieving over double the listening accuracy of a significantly larger competitor. VoiceAssistant-Eval therefore establishes a valuable framework for evaluating current AI assistants and guiding the development of more capable and robust next-generation systems.

Model Fails at Visual Reasoning and Calculation

Detailed analysis of a multimodal AI model reveals consistent errors in visual reasoning and calculation, despite demonstrating some understanding of the problems presented. The model frequently makes mistakes in basic arithmetic, even when conceptually grasping the task, and often misinterprets visual information, such as incorrectly counting objects or failing to understand spatial relationships. Even when arriving at the correct numerical answer, the model sometimes selects the wrong response option or contradicts its own calculation, providing vague or incomplete reasoning that makes it difficult to pinpoint the exact source of the error. Overall, the model can describe the problem, but consistently fails to solve it accurately.

AI Assistant Evaluation Across Diverse Modalities

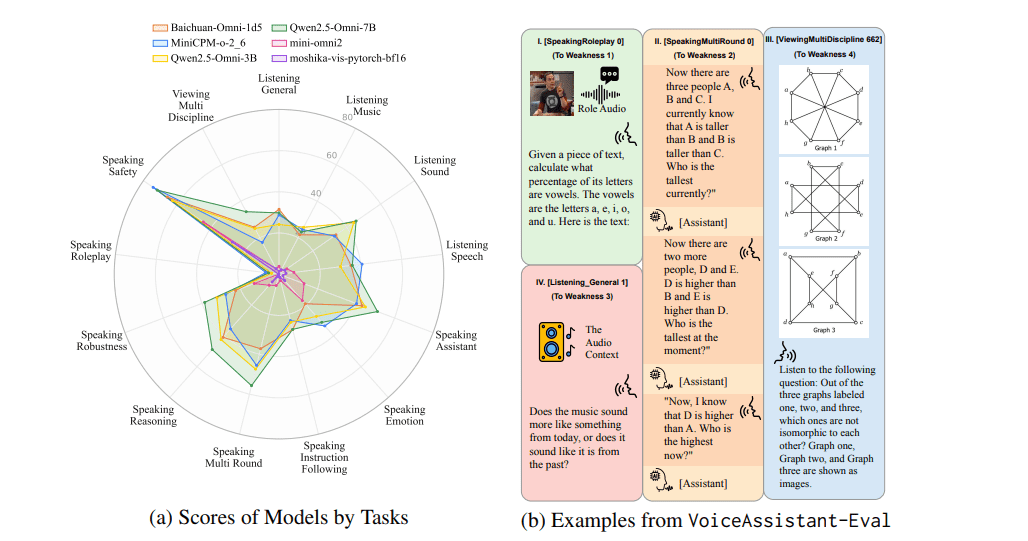

Researchers introduce VoiceAssistant-Eval, a comprehensive benchmark comprising 10,497 curated examples across 13 task categories, designed to rigorously evaluate AI assistants’ listening, speaking, and viewing capabilities. This new benchmark moves beyond existing evaluations by assessing models on diverse inputs including natural sounds, music, spoken dialogue, multi-turn conversations, role-play scenarios, and heterogeneous images. The team evaluated 21 open-source models and GPT-4o-Audio, measuring both the quality of responses and the naturalness of speech, alongside consistency. Detailed error analysis of the Qwen2-Omni-7B model revealed specific weaknesses across all three modalities, including loss of audio context and basic perception errors in listening tasks, struggles with content completeness and adherence to prompt requirements in speaking tasks, and difficulties in accurately identifying and interpreting visual elements in viewing tasks. To ensure reproducibility, the researchers are releasing the dataset and evaluation code, along with comprehensive details regarding the evaluated models, evaluation prompts, and data sources.

AI Assistant Evaluation Across Multiple Modalities

The research team introduces VoiceAssistant-Eval, a comprehensive benchmark designed to rigorously assess the capabilities of AI assistants across listening, speaking, and viewing modalities. This new benchmark comprises a curated collection of 10,497 examples spanning 13 distinct task categories, including natural sounds, music, spoken dialogue, multi-turn conversations, and heterogeneous image analysis. The work demonstrates the benchmark’s utility by evaluating 21 open-source models alongside GPT-4o-Audio, measuring both the quality of responses and the consistency of speech. Results reveal that proprietary models do not consistently outperform their open-source counterparts, and most models excel at speaking tasks but lag in audio understanding. Notably, the mid-sized Step-Audio-2-mini (7B) achieves listening accuracy more than double that of the larger LLaMA-Omni2-32B-Bilingual model, demonstrating that well-designed, smaller models can rival the performance of much larger architectures. The study identifies key challenges remaining in the field, specifically difficulties with multimodal input, combining audio and visual data, and the complex task of role-play voice imitation, establishing a rigorous framework for evaluating and guiding the development of next-generation AI assistants.

AI Assistants Struggle with Rich Inputs

VoiceAssistant-Eval represents a significant advancement in the evaluation of artificial intelligence assistants, offering the first large-scale benchmark designed to systematically assess performance across listening, speaking, and viewing tasks. Extensive experiments utilizing this benchmark reveal that current models, while capable of generating fluent speech and responding to simple queries, exhibit notable weaknesses in rich audio understanding and the integration of multiple input types. Specifically, the results demonstrate that models generally excel at speaking tasks but lag behind in accurately interpreting audio information, and performance diminishes further when processing combined audio and visual inputs compared to text and image queries. Visual interpretation accounts for half of all viewing mistakes, alongside challenges in applying correct knowledge and reasoning, establishing a rigorous framework for measuring progress and guiding the development of more versatile and reliable voice-enabled AI assistants.

👉 More information

🗞 VoiceAssistant-Eval: Benchmarking AI Assistants across Listening, Speaking, and Viewing

🧠 ArXiv: https://arxiv.org/abs/2509.22651