Code translation stands as a vital component of modern software development, yet achieving both accuracy and speed remains a significant challenge. Yanlin Wang, Rongyi Ou, and colleagues from Sun Yat-sen University, alongside Ensheng Shi from Huawei Cloud Computing Technologies, now present EffiReasonTrans, a novel training framework designed to overcome this limitation. The team addresses the trade-off between translation quality and inference latency by employing a two-stage training strategy, utilising reinforcement learning to optimise performance. Results demonstrate that EffiReasonTrans consistently improves translation accuracy, achieving substantial gains on standard benchmarks, while simultaneously reducing computational demands and inference time, representing a crucial step towards practical, real-world code translation tools.

Large Language Models for Code Translation

Research into automated code translation is rapidly advancing, with a strong focus on leveraging large language models. Current investigations centre on converting code from one programming language to another, employing techniques such as LLM-based translation, corrector mechanisms, and API mapping. Scientists are also exploring LLMs for code generation, completion, and search, and applying them to broader software engineering tasks, including issue resolution, test generation, and documentation creation. A prominent trend is the central role of large language models, such as GPT-3 and CodeT5, in these advancements.

Reinforcement learning is frequently used to fine-tune these models, enhancing performance beyond initial pre-training. The use of multi-agent systems is gaining traction, and vector databases are proving valuable for improving code search and understanding. Researchers are also addressing the limitations of LLMs, including the potential for generating incorrect code, and are exploring techniques like chain-of-thought prompting to improve reasoning capabilities. Current investigations address challenges such as mitigating hallucinations, ensuring consistent coding styles, and enhancing the security of generated code. Researchers are also focused on scalability, developing techniques to apply LLMs to large codebases and complex projects. The ultimate goal is to create tools that are not only accurate but also practical for real-world software development, offering a pathway to more efficient and reliable software creation processes.

Reasoning and Code Translation Training Framework

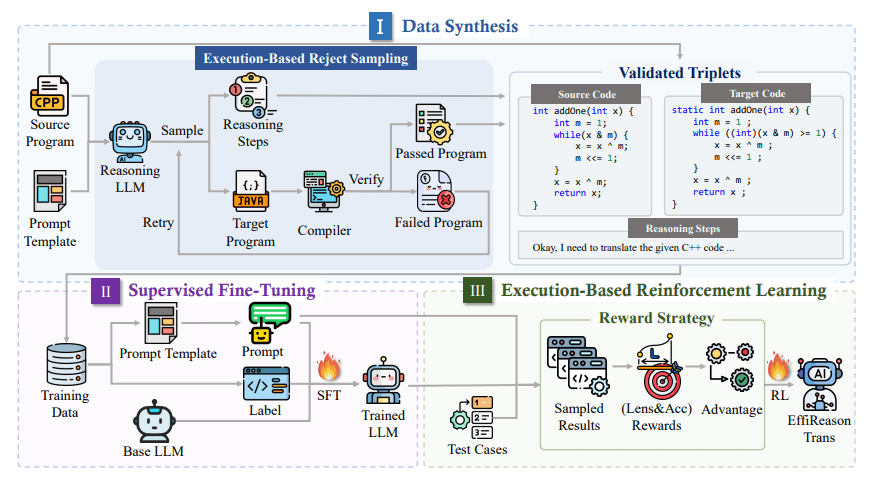

Researchers have developed EffiReasonTrans, a novel training framework that significantly improves both the accuracy and efficiency of automated code translation. This work addresses the common trade-off between translation quality and inference speed, a critical factor for practical software development workflows. The team constructed a high-quality dataset, EffiReasonTrans-Data, using the DeepSeek-R1 language model to generate intermediate reasoning steps alongside the translated code, ensuring both syntactic correctness and functional reliability. Extensive experiments conducted across six translation pairs involving Python, Java, and C++ demonstrate the effectiveness of EffiReasonTrans.

Results show that the framework consistently improves translation accuracy, achieving up to a 49. 2% increase in correct answers and a 27. 8% improvement in CodeBLEU score compared to the base model. Simultaneously, the framework reduces the number of generated tokens by up to 19. 3% and lowers inference latency in most cases, with reductions of up to 29.

0%. Ablation studies confirm the complementary benefits of both supervised fine-tuning and reinforcement learning, with reinforcement learning further boosting accuracy and reducing latency. Notably, the framework maintained strong performance even with limited model capacity and benefited from multilingual training data, demonstrating its generalizability and potential for integration into agent-based frameworks.

Reasoning-Augmented Training Boosts Code Translation Performance

EffiReasonTrans represents a significant advancement in automated code translation, improving both accuracy and efficiency. Researchers developed a novel training framework that addresses the common trade-off between translation quality and inference speed, a critical factor for practical software development workflows. The team constructed a high-quality dataset, EffiReasonTrans-Data, using a powerful language model, DeepSeek-R1, to generate reasoning steps alongside the translated code. Each generated triplet of source code, reasoning, and target code underwent automated checks to ensure both syntactic correctness and functional reliability.

The team then employed a two-stage training strategy, beginning with supervised fine-tuning on this reasoning-augmented data, followed by reinforcement learning guided by a custom reward strategy that prioritised both the correctness and conciseness of the translated code. Experiments across six translation pairs involving Python, Java, and C++ demonstrate substantial improvements. Specifically, on Java to Python translation, EffiReasonTrans improved Correctness Accuracy (CA) by 27. 4%, Achieved Pass Rate (APR) by 23. 1%, and CodeBLEU score by 10.

1%, while simultaneously reducing inference latency by 29. 0%. Further analysis revealed that both supervised fine-tuning and reinforcement learning contribute to these gains, with reinforcement learning further boosting CA by up to 34. 0% and reducing latency by an additional 25. 4%. Notably, the framework maintains strong performance even with limited model capacity and benefits from multilingual training data, demonstrating its broad applicability. When integrated into agent-based frameworks, EffiReasonTrans preserved its accuracy improvements in end-to-end pipelines, confirming its practical value.

Accuracy and Speed in Code Translation

EffiReasonTrans represents a significant advance in automated code translation, addressing the common trade-off between accuracy and speed. Researchers developed a new training framework that improves translation performance while simultaneously reducing inference latency, a crucial factor for practical software development workflows. The method centres on generating a high-quality dataset containing source code, intermediate reasoning steps, and the target translated code, ensuring reliability through automated checks. This framework employs a two-stage training process, beginning with supervised fine-tuning and followed by reinforcement learning, to optimise both the correctness and conciseness of the translated code.

Evaluation across six different programming language pairs demonstrates consistent improvements in translation accuracy, with gains of up to 49. 2% and 27. 8% measured by standard metrics, alongside reductions in generated tokens and inference latency in many cases. Further studies confirm the benefits of this combined training approach and its effectiveness when integrated into agent-based software development systems. The authors acknowledge certain limitations, including reliance on a single base language model and the scope of programming languages evaluated, which currently focuses on Python, Java, and C++. They suggest future work should explore the method’s performance with a wider range of languages, particularly those with fewer available resources, and investigate the use of stronger verification techniques.

👉 More information

🗞 EffiReasonTrans: RL-Optimized Reasoning for Code Translation

🧠 ArXiv: https://arxiv.org/abs/2510.18863