Researchers are tackling the persistent challenge of generating images from text when prompts become complex, demanding the accurate placement of multiple objects and attributes. Shantanu Jaiswal, Mihir Prabhudesai, and Nikash Bhardwaj, all from Carnegie Mellon University, alongside Zheyang Qin, Amir Zadeh, and Chuan Li et al, present a novel iterative refinement strategy that significantly boosts the fidelity of text-to-image generation. Inspired by chain-of-thought reasoning, their method allows a model to progressively improve an image over multiple steps, using visual feedback as a critical guide , a simple yet powerful technique requiring no external resources. This approach delivers substantial gains across established benchmarks, including a 16.9% improvement on ConceptMix and a 13.8% improvement on T2I-CompBench, and crucially, human evaluators demonstrably prefer the iteratively refined images, suggesting a new principle for compositional image creation.

Iterative Refinement Boosts Complex Image Generation by progressively

This contrasts with traditional methods like parallel sampling, which generates multiple images and selects the best, but doesn’t enable the model to learn from or build upon previous attempts. The study unveils that iterative refinement effectively tackles prompts with numerous concept bindings, where attention mechanisms might struggle in a single pass, the iterative process allows the model to resolve bindings sequentially, compounding previously established components over time. Figure 1 visually demonstrates this capability, showcasing how the iterative approach successfully generates complex scenes where parallel sampling fails to produce a fully accurate image even with a high number of samples. This preference underscores the method’s ability to produce more faithful and visually coherent generations. Results and visualizations are freely available at https://iterative-img-gen. github. io/, allowing the wider research community to explore and build upon this significant advancement in the field. This work promises to unlock new possibilities for generating intricate and detailed images from complex textual descriptions, with potential applications in areas like content creation, design, and scientific visualisation.

Iterative Refinement with Vision-Language Feedback offers a powerful

Scientists pioneered an iterative test-time strategy for text-to-image (T2I) generation, addressing limitations in handling complex prompts with multiple objects, relations, and attributes. This approach requires no external tools or priors and is flexibly applicable to various image generators and vision-language models, demonstrating a significant methodological advancement. Initially,. The research team made results and visualizations publicly available at https://iterative-img-gen. github. io/ to facilitate further exploration and development in the field.

Iterative Refinement Boosts Complex Image Generation by progressively

This breakthrough delivers enhanced performance in handling complex prompts requiring simultaneous management of multiple objects, relations, and attributes. Results demonstrate that the Qwen-Iter+Par model achieves state-of-the-art performance on the TIFF benchmark, with an impressive 5.0% improvement over Qwen-Parallel on basic reasoning prompts. Data shows a 2.7% gain on advanced Relation+Reasoning tasks and a 4.0% improvement in text rendering capabilities, underscoring the generality of the approach across diverse compositional scenarios. Specifically, the team recorded scores of 87.4, 88.1, 90.5, 88.1, 85.4, 81.5, 81.4, 82.0, 80.5, 90.0, 97.7, and 92.0 across various conditions, highlighting the model’s robust performance.

These measurements confirm the ability of iterative self-correction to address complex image generation challenges. Analysis of intermediate generations revealed that the critic effectively guides the generator, for example, successfully repositioning a mouse to be hidden behind a key through targeted refinement prompts. In one instance, the critic guided the creation of a cubist image of a carrot inside a bee, starting with a fresh rendering and then refining the prompt to emphasize both the cubist style and the object placement. Furthermore, the study demonstrates that the method scales effectively as the number of concepts increases, achieving a full solve rate of approximately 90% with seven concepts, while other methods plateau around 60-70%. Figure 5 visually illustrates this advantage, showing that the iterative approach maintains performance gains even with increasing complexity. The research team meticulously documented these findings and visualizations are available at https://iterative-img-gen. github. io/, providing a comprehensive resource for further exploration and replication of these remarkable results.

Iterative Refinement Boosts Complex Image Generation by progressively

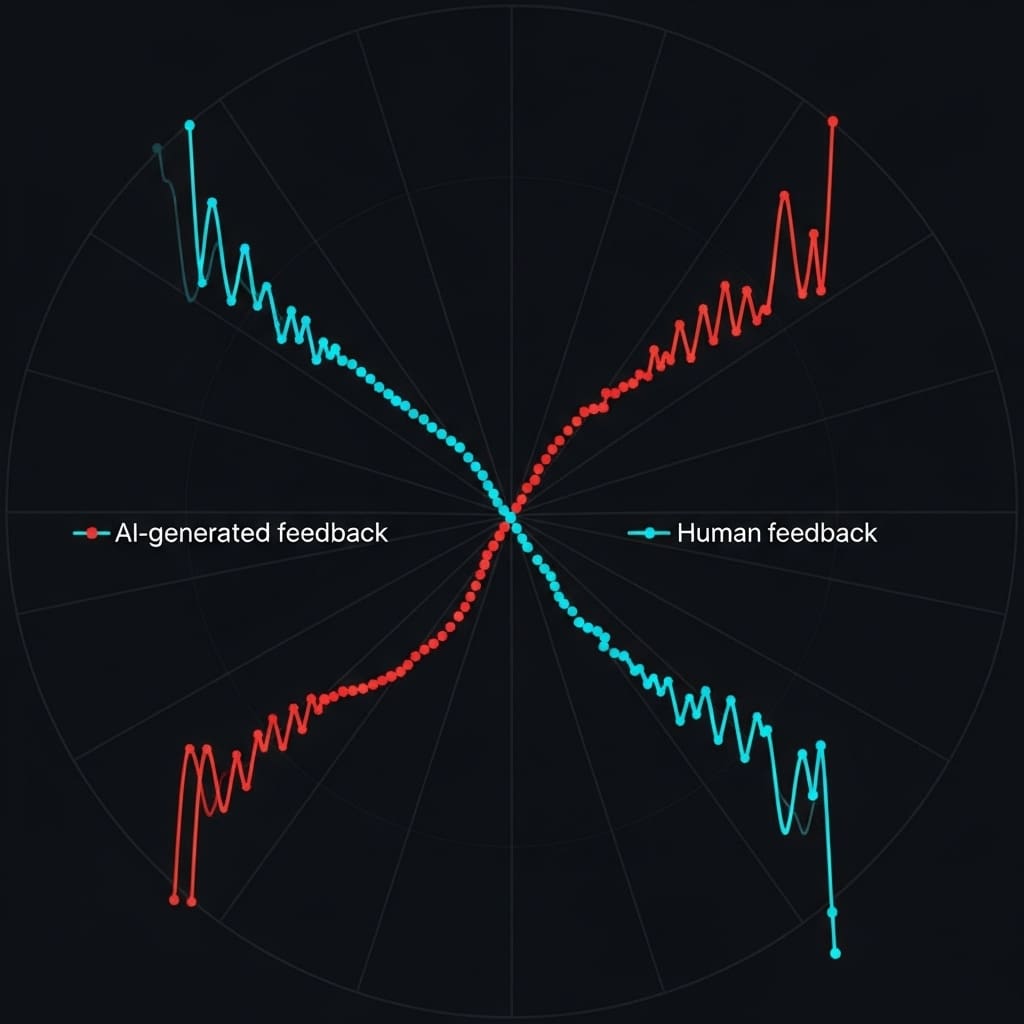

Scientists have developed an iterative refinement strategy to enhance compositional image generation using text-to-image models.The method consistently improves image quality across several benchmarks, including ConceptMix, T2I-CompBench, and Visual Jenga, demonstrating gains of up to 16.9% in all-correct rate and 13.8% in specific spatial categories. The significance of this work lies in its ability to address the challenges of generating images from complex prompts requiring multiple objects, relationships, and attributes. By decomposing complex requests into sequential corrections, the iterative self-correction process produces more faithful and preferred images, as confirmed by human evaluators who favoured the new method over a parallel baseline by a margin of 58.7% to 41.3%. The authors acknowledge that the performance of their method is dependent on the capabilities of the foundation models used, with recent advancements proving critical to achieving improvements; a slight performance decrease was observed when utilising a smaller, open-source model. Future research could explore the optimal balance between iterative and parallel compute allocation, as well as investigate the impact of different vision-language models and their action spaces.

👉 More information

🗞 Iterative Refinement Improves Compositional Image Generation

🧠 ArXiv: https://arxiv.org/abs/2601.15286