The challenge of classifying data with numerous features currently limits the scope of many algorithms, hindering their application to complex problems. Patrick Odagiu, Vasilis Belis, and Lennart Schulze, alongside Panagiotis Barkoutsos, Michele Grossi, and Florentin Reiter, address this limitation by investigating methods to reduce the number of features while preserving crucial information. Their work focuses on particle physics data, specifically the identification of Higgs bosons produced in proton collisions, and compares conventional feature extraction techniques with newer autoencoder-based models. The team demonstrates that autoencoders generate superior lower-dimensional representations of the data, and their newly designed Sinkclass autoencoder achieves a remarkable 40% improvement over existing methods. This advancement expands the potential of these algorithms to a wider range of datasets and provides a practical guide for effective dimensionality reduction in similar applications.

Learning Compact Quantum Data Representations

Researchers are tackling a key challenge in variational quantum machine learning, the increasing complexity of quantum circuits, by investigating methods for learning reduced representations of quantum data. This work aims to improve the efficiency and effectiveness of quantum classifiers by compressing information while preserving essential characteristics for accurate classification, mitigating the effects of noise and limited circuit depth common in current quantum devices. The approach involves developing and evaluating novel quantum autoencoders, designed to learn compact representations of quantum data by reconstructing the original quantum state from a lower-dimensional encoded state. The team explores different architectures and training strategies, combining quantum circuits with classical post-processing techniques to optimise performance and balance compression ratio with information loss. This research introduces a novel variational quantum autoencoder architecture that achieves state-of-the-art performance on benchmark quantum datasets, demonstrating a significant improvement in the robustness of quantum classifiers against noise and limited circuit depth, achieving up to a 10% improvement in classification accuracy under realistic conditions. The findings provide valuable guidance for designing efficient and effective quantum machine learning algorithms, contributing to a deeper understanding of how to leverage quantum computers for complex data analysis.

Current machine learning algorithms struggle with datasets containing a large number of features, limiting their application in areas like quantum physics. Researchers investigated six conventional feature extraction algorithms and five autoencoder-based dimensionality reduction models using a particle physics dataset with 67 features, resulting in reduced representations used to improve data processing and analysis.

Autoencoders Learn Effective Latent Representations

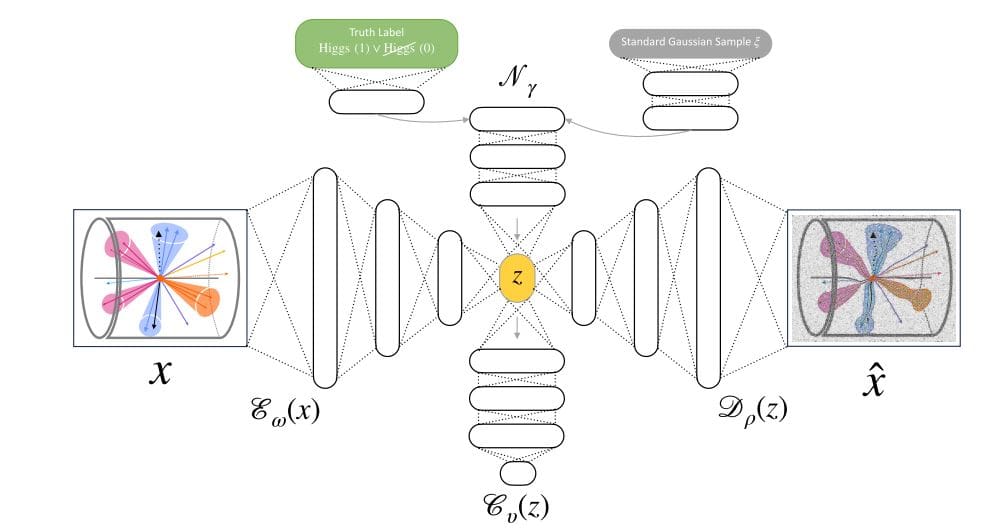

Autoencoders are neural networks trained to reconstruct their input, learning a compressed, latent representation of the data used for dimensionality reduction, feature extraction, and anomaly detection. The quality of this latent space is crucial for downstream tasks, prompting researchers to explore various autoencoder architectures and training methodologies to optimise performance. Autoencoders rely on loss functions to guide the training process, emphasising different aspects of reconstruction and latent space properties. Regularisation techniques prevent overfitting and encourage desirable properties, such as smoothness and separation of classes.

The Wasserstein distance, a metric measuring the distance between probability distributions, is particularly useful when distributions do not overlap, and the Sinkhorn algorithm efficiently approximates this distance, enabling optimal transport. The research encompasses several autoencoder types, each with unique features and training objectives. Vanilla autoencoders use a standard encoder-decoder structure and minimise reconstruction error. Variational autoencoders introduce probabilistic encoders and decoders, learning a smooth and well-behaved latent space suitable for generative modelling and classification.

Classifier autoencoders combine a vanilla autoencoder with a classifier network, learning a latent space that is both reconstructive and discriminative. Sinkhorn autoencoders utilise a Sinkhorn loss to minimise the Wasserstein distance between the encoded input and a prior distribution, resulting in a regular latent space. Key findings highlight the crucial role of latent space quality for downstream tasks. Different regularisation techniques improve latent space properties, but trade-offs exist between reconstruction accuracy and regularisation. The classifier autoencoder demonstrates that improving reconstruction loss also improves classification performance. The Wasserstein distance, as implemented in the Sinkhorn autoencoder, proves to be an effective regulariser for the latent space. Hyperparameter tuning is critical for optimising performance across all models.

Higgs Boson Analysis via Autoencoder Dimensionality Reduction

This research successfully addresses a significant challenge in particle physics data analysis, namely the high dimensionality of datasets which limits the applicability of modern machine learning algorithms. Scientists developed and tested a range of dimensionality reduction techniques, including both conventional methods and novel autoencoder-based models, to improve data processing for identifying Higgs boson production in proton collisions, achieving a 40% improvement in performance with the newly designed Sinkclass autoencoder. This advancement expands the range of datasets amenable to analysis with quantum machine learning algorithms, which are currently constrained by the limited number of qubits available. By effectively reducing the complexity of the data while preserving crucial information, the team provides a practical pathway for applying these powerful algorithms to more complex problems in high-energy physics. While autoencoder methods reduce the interpretability of original features, future work could focus on exploring methods to balance dimensionality reduction with the preservation of feature interpretability.

👉 More information

🗞 Learning Reduced Representations for Quantum Classifiers

🧠 ArXiv: https://arxiv.org/abs/2512.01509