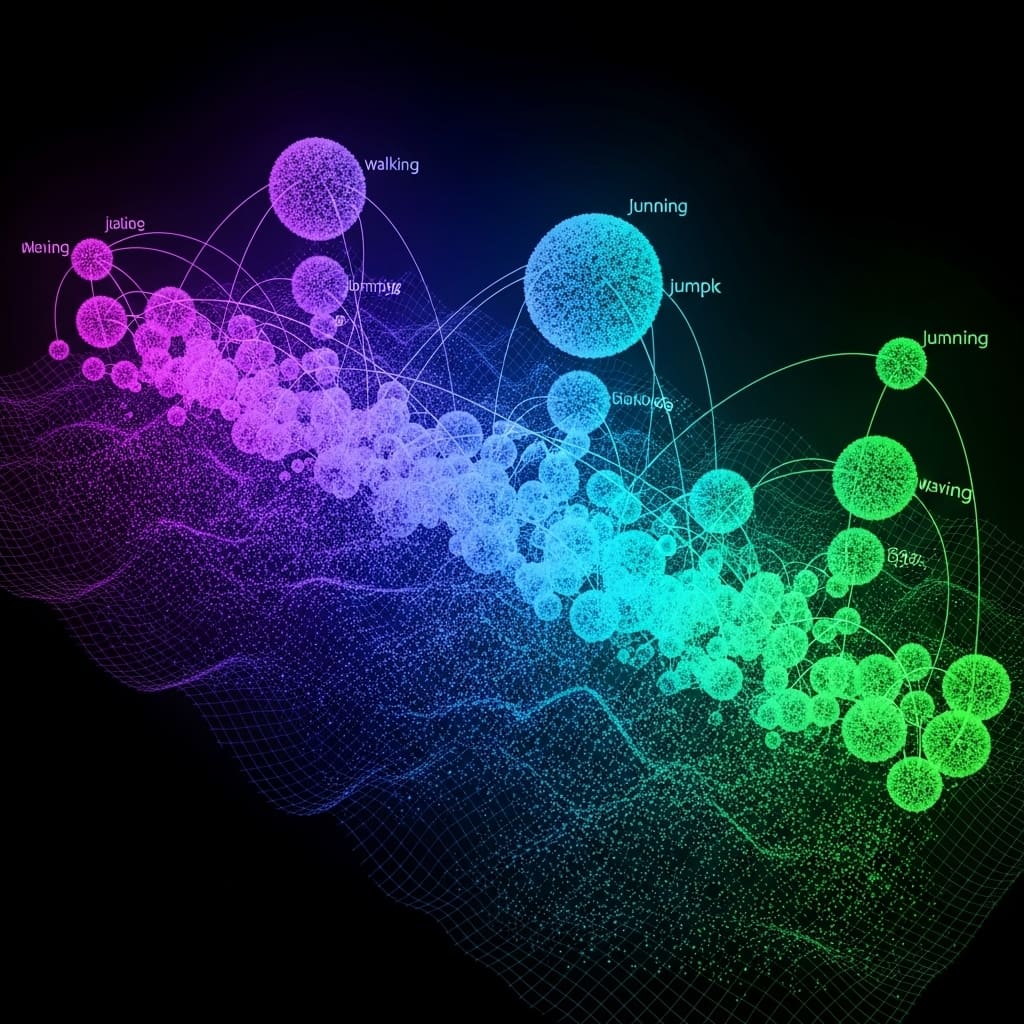

Researchers are tackling the persistent problem of generalising human action recognition to new perspectives, a crucial step towards robust computer vision systems. Emily Kim, Allen Wu, and Jessica Hodgins, all from Carnegie Mellon University, present a novel approach utilising curriculum-based training to bridge the gap between ground-level and aerial viewpoints , crucially, without requiring any real aerial data for training. Their work demonstrates that strategically ordering training data, using both synthetic aerial and real ground-view sources, significantly improves performance and, importantly, boosts training efficiency; achieving up to a 37% reduction in training iterations with the SlowFast network and up to 30% with MViTv2, compared to conventional methods. This research offers a pathway to deploy action recognition models in diverse, real-world scenarios where viewpoint variation is commonplace, representing a substantial advance in the field of computer graphics and artificial intelligence.

Ground to Aerial Viewpoint Transfer Learning is a

The study centres on the development and evaluation of two distinct curriculum learning strategies, a two-stage fine-tuning method and a multi-step progressive curriculum, both designed to transition models effectively from synthetic to real data. The two-stage method employs direct fine-tuning, while the progressive curriculum incrementally expands the dataset before fine-tuning, aiming to optimise the learning process. Experiments were conducted using both SlowFast, a CNN-based architecture, and MViTv2, a Transformer-based model, to assess the efficacy of these strategies. Results clearly indicate that combining the synthetic aerial and real ground-view datasets consistently outperforms training on either single domain alone, establishing a strong foundation for cross-view action recognition.

Importantly, both curriculum strategies achieved top-1 accuracy comparable to a simple combination of the datasets, while simultaneously delivering substantial gains in training efficiency. Specifically, the two-step fine-tuning method enabled SlowFast to achieve up to a 37% reduction in training iterations and MViTv2 up to a 30% reduction, compared to the standard dataset combination approach. The multi-step progressive method further enhanced efficiency, reducing iterations by up to 9% for SlowFast and 30% for MViTv2 relative to the two-step method. These findings demonstrate that curriculum-based training can maintain high performance, with top-1 accuracy remaining within a 3% range, while significantly streamlining the training process for cross-view action recognition tasks.

This research establishes a practical pathway for improving action recognition in scenarios where acquiring real aerial data is challenging or expensive. By effectively combining synthetic and real ground-view data through carefully designed curriculum learning strategies, the team has unlocked substantial efficiency gains without compromising accuracy. The work opens exciting possibilities for applications in surveillance, robotics, and autonomous systems, where robust action recognition from diverse viewpoints is crucial for reliable operation and decision-making. The demonstrated ability to generalise to unseen aerial views, without direct training on such data, represents a significant step forward in the field of computer vision and action recognition.

Aerial View Generalisation via Curriculum Learning improves model

Scientists investigated curriculum-based training strategies to enhance generalisation to unseen aerial-view data in human action recognition, without utilising any actual aerial footage during the initial training phases. The study pioneered a methodology employing two out-of-domain data sources: synthetically generated aerial-view videos and real-world ground-view videos, to address the challenge of viewpoint diversity and realism. Researchers meticulously evaluated two distinct curriculum learning approaches, a two-stage direct fine-tuning method and a progressive curriculum expanding the dataset incrementally, on the REMAG dataset. Experiments employed both SlowFast, a CNN-based architecture, and MViTv2, a Transformer-based network, to assess the efficacy of each curriculum strategy.

The team first pre-trained models, then fine-tuned them using the synthetic aerial data, followed by the real ground-view data in the two-stage approach. Conversely, the progressive curriculum involved a multi-stage expansion of the dataset before commencing fine-tuning, carefully balancing the contribution of each data source. This innovative approach allowed for a detailed comparison of training efficiency and performance gains. The research harnessed a rigorous evaluation protocol, measuring top-1 accuracy to quantify performance and tracking the number of iterations required for convergence as a metric of efficiency.

Results demonstrated that combining both out-of-domain datasets consistently outperformed training on either synthetic aerial or real ground-view data alone. Notably, the two-step fine-tuning method achieved up to a 37% reduction in training iterations for SlowFast and 30% for MViTv2, compared to simple dataset combination. Further refinement with the multi-step progressive approach yielded additional efficiency gains, reducing iterations by up to 9% for SlowFast and 30% for MViTv2 relative to the two-step method. The study confirms that curriculum-based training maintains comparable top-1 accuracy, within a 3% range, while significantly improving training efficiency in cross-view action recognition, demonstrating a powerful technique for domain adaptation.

Curriculum learning boosts cross-view action recognition significantly

Scientists have achieved a significant breakthrough in cross-view action recognition, demonstrating improved generalization to unseen aerial-view data without requiring any real aerial data during the initial training phases. The research team explored curriculum learning strategies, effectively structuring the training process to combine synthetic aerial-view data and real ground-view data for optimal performance. Experiments conducted on the REMAG dataset using both SlowFast and MViTv2 architectures revealed that combining these two out-of-domain datasets consistently outperformed training on either dataset alone. Results demonstrate that the proposed curriculum strategies maintain top-1 accuracy comparable to simple dataset combination, but with substantial gains in training efficiency.

Specifically, the two-step fine-tuning method achieved up to a 37% reduction in training iterations for SlowFast and up to a 30% reduction for MViTv2, when compared to simply combining the datasets. Further optimisation with a multi-step progressive approach yielded even greater efficiency, reducing iterations by an additional 9% for SlowFast and maintaining a 30% reduction for MViTv2 relative to the two-step method. These measurements confirm a clear pathway to faster and more effective model training. The study meticulously measured training efficiency by counting the number of iterations required to reach a target performance level.

Data shows that the progressive curriculum consistently required fewer iterations than the two-step method, indicating a more streamlined learning process. Top-1 accuracy remained within a 3% range across all tested methods, proving that the curriculum-based approaches did not compromise performance while significantly improving speed. The team recorded that both SlowFast and MViTv2 benefited from these techniques, highlighting the broad applicability of the findings. This breakthrough delivers a practical solution for scenarios where real aerial-view data is scarce or expensive to obtain. By leveraging readily available synthetic data and existing ground-view datasets, researchers can now train models that effectively generalise to aerial perspectives. Measurements confirm that this approach has the potential to accelerate the development of computer vision applications in areas such as drone surveillance, autonomous navigation, and urban planning. The work establishes a robust framework for cross-view domain transfer, paving the way for more adaptable and efficient action recognition systems.

Ground to Aerial Transfer via Curriculum Learning improves

Scientists have demonstrated that curriculum-based training strategies can effectively improve generalization in cross-view action recognition, specifically when transferring knowledge from ground-level to aerial viewpoints. Researchers explored the use of both synthetic aerial data and real ground-view data to train models without requiring any actual aerial footage during the initial training phases. Their investigations focused on two curriculum approaches: a two-stage fine-tuning method and a multi-step progressive curriculum, both evaluated using the REMAG dataset and SlowFast and MViTv2 models. The findings establish that combining these out-of-domain datasets consistently outperforms training on either dataset alone, achieving top-1 accuracy within a 3% range of naively combining the datasets.

Importantly, the multi-step progressive curriculum proved most efficient, reducing training iterations for SlowFast by up to 9% and for MViTv2 by up to 30% compared to the two-step method. These results suggest that strategically ordering the training data, starting with viewpoint-aligned synthetic data and progressing to real ground-view data, provides a more stable learning foundation. MViTv2, a transformer-based model, consistently outperformed the CNN-based SlowFast, and exhibited greater resilience to different training curricula. The authors acknowledge limitations including the evaluation of only two model architectures, the use of a fixed progressive curriculum schedule, and the assumption of clean, fully annotated data. Furthermore, the relatively small size and constrained nature of the dataset may simplify the domain adaptation problem, and the synthetic data lacks the photorealism of real-world aerial environments. Future research should investigate adaptive or learnable curriculum schedules and explore the effectiveness of these strategies on larger, more complex datasets with more realistic synthetic data to further enhance generalisation capabilities.

👉 More information

🗞 Curriculum-Based Strategies for Efficient Cross-Domain Action Recognition

🧠 ArXiv: https://arxiv.org/abs/2601.14101