The reliability of machine learning classification systems is increasingly threatened by inaccurate ground truth labels, despite careful data curation by expert annotators. Zan Chaudhry, Noam H. Rotenberg, and Brian Caffo, alongside Craig K. Jones et al. from Johns Hopkins University and the Johns Hopkins Bloomberg School of Public Health, address this critical issue with a new approach to identifying mislabeled data. Their research introduces Adaptive Label Error Detection (ALED), a method that leverages feature extraction and Gaussian distribution modelling to pinpoint samples with incorrect labels. This novel technique demonstrates significantly improved sensitivity in detecting errors, without sacrificing precision, across several medical imaging datasets. Ultimately, ALED offers a powerful tool for enhancing model performance, as evidenced by a 33.8% reduction in test set errors when models are refined using data corrected by the system.

This novel technique demonstrates significantly improved sensitivity in detecting errors, without sacrificing precision, across several medical imaging datasets, ultimately offering a powerful tool for enhancing model performance. Evidence of this is a 33.8% reduction in test set errors when models are refined using data corrected by the system.

Addressing Mislabeled Data in Medical Imaging

Machine learning and artificial intelligence are increasingly prevalent in scientific research, particularly within medical imaging where deep convolutional neural networks (DCNNs) show promise in image segmentation and classification. These networks are trained using gradient-descent algorithms to minimise a loss criterion, measuring the difference between the model’s predictions and the provided ground-truth labels. The accuracy of these labels is crucial, as they dictate how the model’s parameters are adjusted during training and ultimately determine its performance. However, human annotation is inherently fallible, introducing mislabeled data which poses a significant problem for artificial intelligence and machine learning research.

Instances of mislabeling have been found even in well-established benchmark datasets, highlighting the widespread nature of the issue. In medical imaging, radiologist error rates are estimated to be between 3-5% daily, with discrepancies between observers reaching approximately 25% in specific radiological tasks. The ALED detector is a Python package called statlab, designed to identify potentially incorrect labels within a dataset. Through fine-tuning a neural network on data corrected using the ALED detector, the team demonstrated a 33.8% reduction in errors on a test dataset, indicating a substantial benefit for end-users.

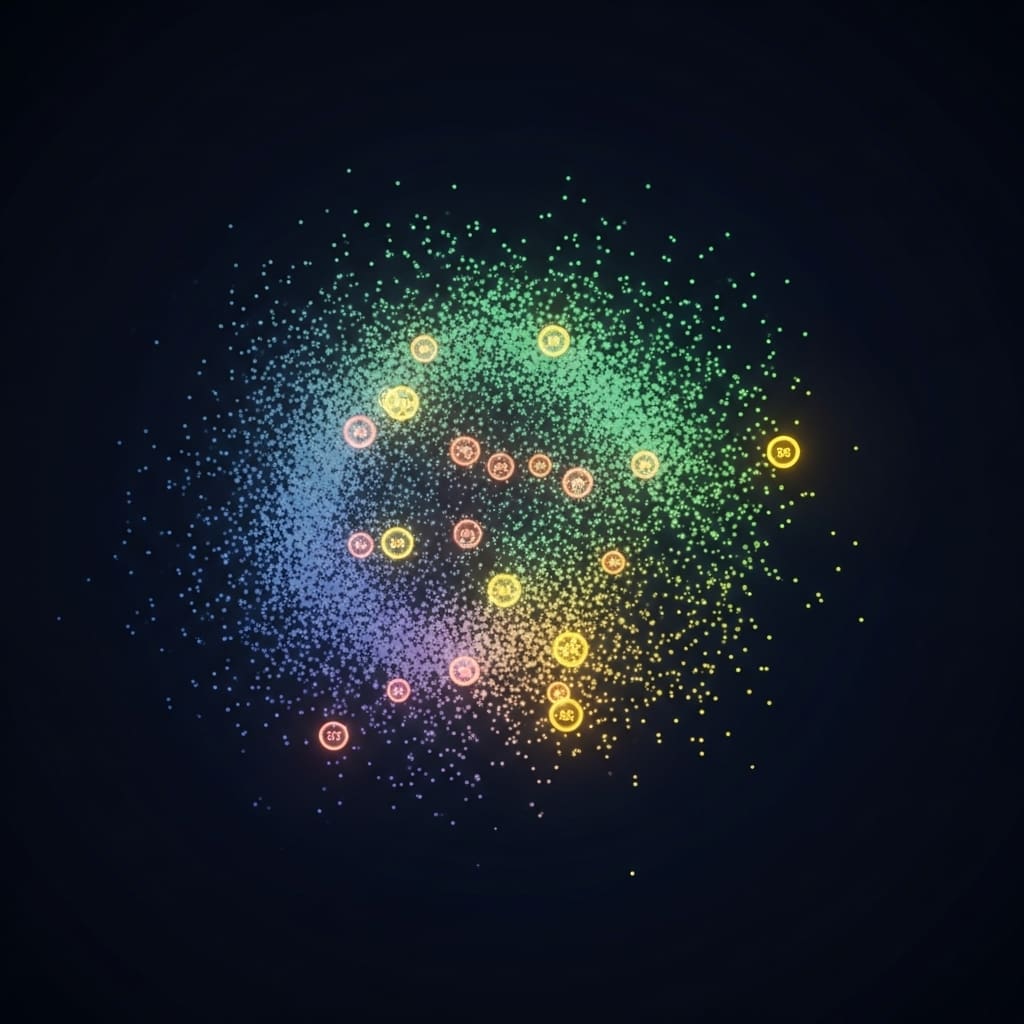

The methodology focuses on the geometry of the feature space, leveraging the principles of deep convolutional neural networks to detect inconsistencies between data points and their assigned labels. By identifying and correcting these mislabeled samples, the ALED detector aims to improve the overall accuracy and reliability of machine learning models used in medical image classification and other applications. The research team extracted intermediate features from a deep convolutional neural network, subsequently denoising them to refine the data representation. These features were then modeled using multidimensional Gaussian distributions to map the reduced manifold of each class, enabling a precise likelihood ratio test for mislabeled sample identification. Experiments demonstrate that ALED markedly outperforms established label error detection methods across multiple medical imaging datasets.

The core of ALED lies in its ability to accurately pinpoint errors within training data, a critical step towards building more robust machine learning models. Measurements confirm that fine-tuning a neural network with data corrected using ALED results in a substantial 33.8% decrease in test set errors. This breakthrough delivers a tangible benefit to end users by enhancing the accuracy and reliability of predictive models. The team measured performance gains specifically through reductions in classification errors after implementing the corrected datasets, highlighting the practical impact of their work.

Further technical accomplishments include the implementation of ALED as a deployable Python package, named statlab, facilitating wider adoption and integration into existing machine learning pipelines. Data analysis revealed that ALED achieves increased sensitivity in detecting mislabeled samples, addressing a key limitation of existing confident learning approaches. The method extracts and denoises intermediate features, modelling class distributions with multidimensional Gaussian distributions to then perform a likelihood ratio test for mislabeling. Results across multiple medical imaging datasets demonstrate that ALED achieves improved sensitivity in detecting errors without reducing precision, when compared to existing label error detection techniques. Demonstrated improvements extend to model performance, with fine-tuning on data corrected using ALED resulting in a substantial 33.8 per cent decrease in test set errors.

This highlights the potential for ALED to enhance the generalisation ability of deep learning systems by addressing data quality concerns. The authors acknowledge that the performance of ALED may be influenced by hyperparameters used during model training, and suggest further investigation into the optimal timing of ALED application within the training process. Future research could also explore the use of features extracted from different depths within the network to potentially further refine detection accuracy.

👉 More information

🗞 Adaptive Label Error Detection: A Bayesian Approach to Mislabeled Data Detection

🧠 ArXiv: https://arxiv.org/abs/2601.10084