The increasing scale of large language models demands ever more powerful and efficient hardware, and researchers are now rigorously evaluating new options beyond traditional systems. Chandrish Ambati and Trung Diep, both from Celestial AI, lead a comprehensive analysis of the AMD MI300X GPU, a promising alternative designed to handle the immense computational demands of models containing hundreds of billions of parameters. This work meticulously assesses the MI300X’s performance across crucial areas, including processing speed, memory capacity, and data communication, to determine its suitability for large language model inference. The findings provide valuable insight into the capabilities of this new architecture and its potential to advance the field of artificial intelligence by offering a viable path towards more powerful and accessible AI systems.

city, matrix cores, and their proprietary interconnect. This paper presents a comprehensive evaluation of AMD’s MI300X GPUs across key performance domains critical to LLM inference: compute throughput, memory bandwidth, and interconnect communication. Performance evaluation utilises vendor-provided microbenchmarks, supplemented with custom kernels to analyse compute, memory, and communication efficiency. The work also provides a generational comparison of architectural features across both AMD and NVIDIA products, highlighting the gap between theoretical specifications and real-world performance. For full-system evaluation, end-to-end inference throughput is benchmarked using.

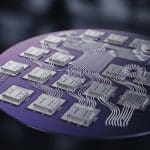

LLM Inference Performance of MI300X GPUs

This document presents a comprehensive analysis of the performance of AMD MI300X GPUs compared to NVIDIA H100 and H200 GPUs in the context of Large Language Model (LLM) inference. AMD’s MI300X boasts strong memory bandwidth and capacity, aiming to compete with NVIDIA’s high-end GPUs. NVIDIA’s H100 and H200 currently lead in compute performance, particularly for matrix multiplications, and benefit from a mature software ecosystem. Benchmarks, including microbenchmarks and tests using Llama 3, were used to measure compute, memory, and communication bandwidth, as well as inference throughput with both FP8 and FP16 precision.

The results demonstrate that NVIDIA GPUs consistently outperform the MI300X in compute-bound tasks, such as the prefill phase of inference. However, the MI300X demonstrates competitive memory bandwidth, especially at higher working set sizes, becoming more apparent during the decode-dominated phase of inference. When using FP8 precision, the MI300X achieves around 50-66% of the H100’s performance, while with FP16, it improves to up to 80%, leveraging its stronger memory bandwidth. A key finding is that NVIDIA has a more mature and optimized software stack, including TensorRT-LLM and NCCL, which significantly contributes to its performance advantage, while AMD’s software is still developing.

Detailed analysis shows that the MI300X underperforms the H100/H200 in FP8 inference, particularly during the prefill phase, but shows improvement in FP16 inference, narrowing the performance gap, especially in the decode phase. The larger memory footprint of FP16 allows the MI300X to better utilize its memory bandwidth. The document concludes that while AMD’s MI300X shows promise, particularly in memory-bound workloads, significant software improvements are needed to fully realize its potential. Focus areas for AMD include developing high-performance collective communication libraries comparable to NVIDIA’s NCCL, improving compiler optimization for LLM workloads, and ensuring a stable and well-supported software stack. Overall, NVIDIA currently maintains a performance lead in LLM inference due to its more mature and optimized software ecosystem, and bridging this software gap is crucial for AMD to become a competitive player in the AI market. The research highlights that hardware alone is insufficient, and a robust, optimized software stack is essential for achieving peak performance in LLM inference.

MI300X Shows Lower Compute Throughput Than NVIDIA

The rapid advancement of large language models demands high-performance GPU hardware capable of handling models with hundreds of billions of parameters. Initial tests using matrix multiplication benchmarks revealed that the MI300X achieves, on average, 45% of its theoretical peak FLOPs across FP8, BF16, and FP16 precision formats. In contrast, NVIDIA’s H100 and B200 GPUs sustain up to 93% of their peak compute throughput, demonstrating significantly higher utilization of available hardware.

Further analysis showed that while NVIDIA GPUs maintain strong scaling, achieving over 90% of theoretical FLOPs at moderate matrix sizes, the MI300X peaks at 50% utilization for matrices of size 4096 and above, with a slight performance dip observed at larger sizes. This limitation is attributed to memory-bound kernels and the capacity of shared memory and caches. Detailed investigation revealed that the disparity between theoretical and observed FLOPs on the MI300X stems from compiler and kernel maturity, as well as dynamic frequency scaling. Microbenchmarks demonstrated that the MI300X achieves 81% software efficiency for FP8, 85% for FP16, and 80% for BF16, highlighting the potential for optimization through software improvements.

The MI300X supports eight HBM stacks, each contributing to the overall memory capacity and bandwidth. Testing revealed the MI300X delivers 8 TB/s of memory bandwidth, while NVIDIA’s H100 achieves 3. 9 TB/s. The MI300X’s HBM3e technology, combined with its stack configuration, enables this substantial performance advantage. Comparative analysis shows the MI300X boasts 192 GB of HBM3 memory, while the H100 offers 94 GB of HBM3, confirming the MI300X’s capacity and bandwidth capabilities are at the forefront of GPU technology.

MI300X Inference Performance and Bottlenecks

This research presents a comprehensive evaluation of the AMD MI300X GPU for large language model inference, assessing its performance in compute throughput, memory bandwidth, and interconnect communication. Results demonstrate that the MI300X achieves competitive memory bandwidth in FP16 inference, narrowing the performance gap relative to leading NVIDIA GPUs, particularly as model weight and cache sizes increase. However, the study identifies lower bandwidth as a limiting factor in FP8 scenarios, highlighting the importance of precision format and its impact on overall performance. The team acknowledges that fully realizing the potential of the MI300X hardware requires continued development of its software ecosystem, noting that NVIDIA currently maintains an advantage with its mature CUDA stack and frameworks like NVIDIA Dynamo.

👉 More information

🗞 AMD MI300X GPU Performance Analysis

🧠 ArXiv: https://arxiv.org/abs/2510.27583