The increasing reliance on large language models (LLMs) demands robust evaluation methods, and arena-style comparisons, where models compete head-to-head, have become increasingly popular. Raphael Tang from University College London, Crystina Zhang from University of Waterloo, and Wenyan Li challenge the conventional wisdom of interpreting ‘draws’ in these competitions as indicating equal performance. Their research demonstrates that draws likely signal the difficulty of the question itself, rather than genuine equivalence between the models, and that ignoring draw results in rating updates actually improves the accuracy of predicting battle outcomes. This finding, supported by analyses of real-world arena datasets and further work from Carmen Lai, Pontus Stenetorp from University College London, and Yao Lu, suggests that future LLM evaluation systems should reconsider how draws are interpreted and incorporate question characteristics into the rating process for more meaningful comparisons.

Draws Reveal Query Difficulty, Improve LLM Ratings

This research fundamentally re-evaluates how ratings are assigned in arena-style evaluations of large language models (LLMs), where two models compete to answer a user’s query and a human judge determines the winner. Scientists questioned the standard practice of treating draws as equivalent performance, proposing instead that draws signal the difficulty of the query itself. The team analyzed data from three real-world arena datasets, LMArena, SearchArena, and VisionArena, comprising over 106,000, 24,000, and 30,000 battles respectively, and encompassing a broad range of LLMs. Experiments revealed that ignoring rating updates for battles ending in a draw improves the accuracy of predicting battle outcomes by 0.

5 to 3. 0% on average, across four different rating systems, Elo, Glicko-2, Bradley-Terry, and TrueSkill. Specifically, the Elo system showed the most significant improvement, with a 3. 0% increase in prediction accuracy. These gains were statistically significant in 18 out of 23 tested cases, demonstrating a consistent benefit from the revised approach. The team also confirmed that these improvements weren’t simply due to using less data, by comparing the results to random omissions of updates.

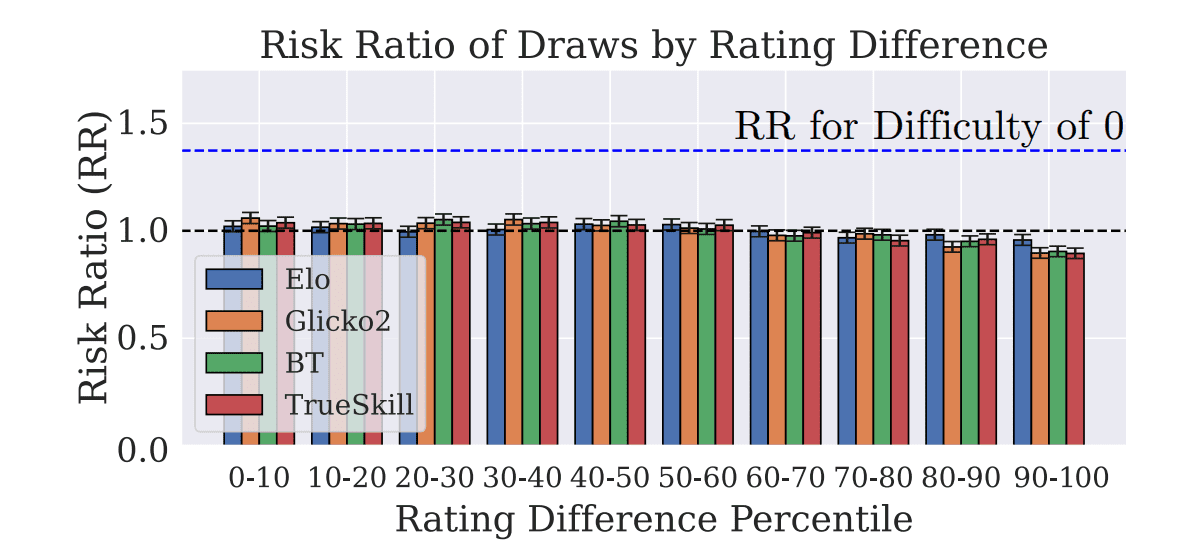

Further analysis explored the factors contributing to draws, using GPT-4 to assess query difficulty and subjectivity. Results showed that draws are 1. 37times more likely for queries rated as very easy and 1. 35times more likely for highly objective queries. This suggests that when a question is straightforward or has a clear answer, both models are more likely to perform equally well, resulting in a draw. Scientists also examined the relationship between model rating differences and draws, finding that draws occur regardless of the rating gap, further supporting the hypothesis that draws are primarily driven by query characteristics. This work delivers a more nuanced understanding of LLM evaluation and provides a pathway to more accurate and informative ratings.

The findings suggest that draws are more frequent when queries are easily answered or highly objective, indicating both models are likely to succeed regardless of relative skill. This work highlights the importance of considering query characteristics when evaluating LLMs and proposes a shift away from treating draws as simple indicators of equal ability. The authors acknowledge that further research is needed to fully understand the interplay between query properties, draw rates, and model performance, and recommend that future rating systems incorporate these factors for more accurate evaluations.

👉 More information

🗞 Drawing Conclusions from Draws: Rethinking Preference Semantics in Arena-Style LLM Evaluation

🧠 ArXiv: https://arxiv.org/abs/2510.02306