The increasing demand for large language models presents a significant challenge for deploying artificial intelligence on resource-constrained edge devices, which typically rely on CPUs. Hyunwoo Oh, KyungIn Nam, and Rajat Bhattacharjya, along with colleagues, address this problem by introducing T-SAR, a novel framework that enables scalable ternary language model inference directly on CPUs. The team achieves this breakthrough by cleverly reorganising the CPU’s SIMD registers to dynamically generate lookup tables within the processor itself, eliminating the need for slow and power-hungry memory access. This innovative approach delivers substantial performance gains, improving key calculations by up to 24. 5times and achieving significantly higher energy efficiency compared to existing edge computing platforms, paving the way for practical and efficient large language model deployment on a wider range of devices.

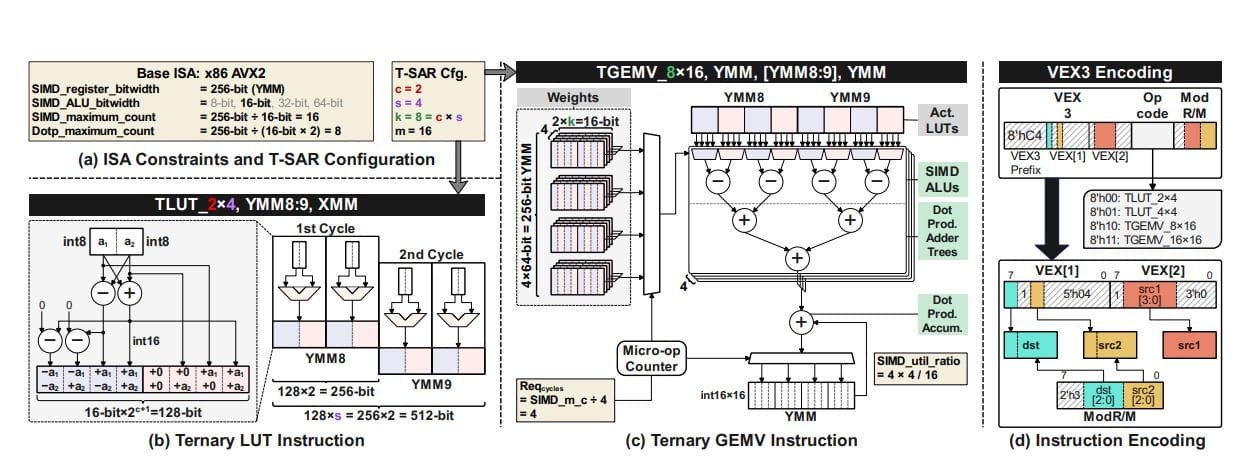

Ternary quantization, representing data with three values, offers significant resource savings, but current CPU solutions rely on memory-based lookup tables, limiting scalability, and specialized accelerators like FPGAs or GPUs are impractical for edge devices. This work presents T-SAR, a novel framework that achieves scalable ternary LLM inference on CPUs by ingeniously repurposing the SIMD register file for dynamic, in-register LUT generation with minimal hardware modifications. T-SAR eliminates memory bottlenecks and maximizes data-level parallelism, delivering substantial performance improvements in both latency and throughput.

Experiments demonstrate a 5. 6- to 24. 5-fold reduction in GEMM latency and a 1. 1- to 86. 2-fold improvement in GEMV throughput, achieved with only 3.

2% power and 1. 4% area overhead in SIMD units. These results establish a significant leap in energy efficiency, with T-SAR achieving 2. 5- to 4. 9-fold higher energy efficiency compared to a Jetson AGX Orin on Llama-8B and Falcon3-10B models.

Ternary LLM Inference via Table Lookups

This research presents T-MAC, a novel CPU-centric approach to accelerate low-bit (specifically ternary) Large Language Model (LLM) inference on edge devices. The core idea is to replace traditional matrix multiplication with efficient table lookups, leveraging the inherent parallelism of CPUs and minimizing memory access. This allows for competitive performance with, and in some cases surpasses, GPU and FPGA solutions, without requiring specialized hardware or complex software stacks. Key contributions include ternary LLM optimization, focusing on ternary quantization as a balance between model compression and accuracy, and table lookup-based matrix accumulation, pre-computing and storing partial products in lookup tables to reduce arithmetic operations during inference.

The research demonstrates that T-MAC achieves significant speedups compared to baseline CPU implementations and competitive performance with GPUs and FPGAs on various LLM benchmarks, achieving up to 2. 5x speedup over optimized CPU baselines. By reducing arithmetic operations and memory accesses, T-MAC also improves energy efficiency, making it suitable for battery-powered edge devices. The authors provide a complete software implementation of T-MAC and release it as open-source, facilitating further research and adoption. T-MAC proposes a paradigm shift in LLM inference, moving away from reliance on specialized hardware and towards a software-centric approach that unlocks the full potential of readily available CPUs.

Ternary LLM Inference on CPUs with T-SAR

Recent advances in large language models (LLMs) have presented challenges for deployment on edge devices with limited computational resources. T-SAR, a novel framework, achieves scalable ternary LLM inference directly on CPUs by ingeniously repurposing the SIMD register file for dynamic, in-register LUT generation with minimal hardware modifications. The team successfully eliminates memory bottlenecks and maximizes data-level parallelism, delivering substantial performance improvements in both latency and throughput. Detailed analysis reveals that prior approaches rely on pre-computed LUTs stored in system memory, dedicating 87.

6% of all memory transactions to accessing these LUTs, despite them occupying less than 0. 01% of total RAM. T-SAR overcomes this limitation by generating compressed LUTs on-the-fly within the SIMD registers, eliminating external memory traffic and enabling fused GEMV-accumulation operations. The core innovation lies in a ternary-to-binary decomposition algorithm, transforming ternary weights into dense and sparse binary forms, allowing the original dot product to be calculated as a subtraction of two binary dot products. This approach allows the team to compress the LUT size, fitting it within the SIMD register bitwidths. The team’s co-design stack, encompassing algorithmic, instruction set architecture (ISA), and microarchitectural layers, delivers a practical solution for efficient LLM deployment on ubiquitous CPU platforms.

Ternary LLM Inference on CPUs Accelerated

The research team presents T-SAR, a novel framework designed to enable efficient ternary large language model (LLM) inference directly on central processing units (CPUs). By relocating the generation of lookup tables from memory into the CPU’s SIMD registers, T-SAR transforms memory access into in-register computation, significantly accelerating the process. This approach shifts the bottleneck from memory bandwidth to datapath execution, achieving substantial improvements in both speed and energy efficiency. Results demonstrate that T-SAR outperforms a Jetson AGX Orin across workstation and laptop platforms, delivering up to 8.

6times faster token processing and up to 4. 9times greater energy efficiency. While throughput on mobile devices is lower, energy consumption per token remains 2. 5 to 2. 75times lower than the Jetson platform. Future work could explore advanced lookup table compression techniques, compiler scheduling optimizations, and integration with sparsity or further quantization methods, potentially extending the benefits of this approach to an even wider range of devices and applications. The framework’s adaptability allows for continued optimization and expansion to new hardware and software configurations.

👉 More information

🗞 T-SAR: A Full-Stack Co-design for CPU-Only Ternary LLM Inference via In-Place SIMD ALU Reorganization

🧠 ArXiv: https://arxiv.org/abs/2511.13676