Large Language Models increasingly rely on Mixture of Experts (MoE) architectures to achieve exceptional performance, but deploying these massive models presents significant hardware challenges due to the enormous data volumes they require. To overcome these hurdles, Yue Pan, Zihan Xia, Po-Kai Hsu, and colleagues at various institutions present Stratum, a novel system and hardware co-design. Stratum integrates innovative Monolithic 3D-Stackable DRAM with near-memory processing and GPU acceleration, creating a system that dramatically improves both speed and efficiency. By carefully organising data across tiered layers within the 3D DRAM based on predicted usage, the team boosts near-memory processing throughput and achieves up to 8. 29times improvement in decoding speed and 7. 66times better energy efficiency compared to conventional GPU-based systems, representing a substantial advance in MoE model serving.

Integrated DRAM, Near-Memory Processing, and GPU Acceleration

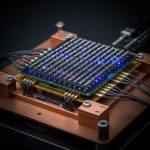

The Stratum system marks a significant step forward in serving Mixture of Experts (MoE) models, demanding substantial data handling and computational power. Researchers engineered a co-designed system integrating innovative Monolithic 3D-Stackable DRAM (Mono3D DRAM), near-memory processing (NMP), and GPU acceleration to achieve considerable performance gains. Mono3D DRAM, built with a monolithic structure, delivers higher internal bandwidth than conventional High Bandwidth Memory (HBM), enabling more efficient near-memory processing. This advanced DRAM connects to logic dies through hybrid bonding and integrates with the GPU via a silicon interposer, creating a tightly integrated hardware platform.

To maximize throughput, the team developed a tiered memory architecture within the Mono3D DRAM stack, strategically assigning data across layers based on predicted usage by different experts. This intelligent data placement optimizes NMP throughput by minimizing data movement and latency. During computation, the system employs a specific matrix partitioning scheme, avoiding duplication of expert weights, which consume significant memory. Instead, weight matrices are split vertically and horizontally to minimize data communication between processing stages, enabling parallel execution and reducing latency.

Researchers further optimized execution by partitioning input token matrices into slices and sending each slice to a distinct Mono3D DRAM channel, reducing input preparation overhead. A high-speed logic die ring network then reconstructs the full input matrix for all processing units. Computation of certain operations is overlapped with activation function evaluation, and communication is parallelized with subsequent operations, maximizing resource utilization. For attention layers, heads are assigned across processing unit groups and processed concurrently, minimizing communication overhead and maximizing tensor core utilization.

These combined innovations resulted in up to an 8. 29x improvement in decoding throughput and 7. 66x better energy efficiency compared to conventional GPU systems.

Stratum System Accelerates Mixture of Experts Models

The Stratum system represents a significant advancement in serving large language models, particularly those employing a Mixture of Experts (MoE) architecture. Researchers have developed a co-designed system combining innovative Monolithic 3D-Stackable DRAM (Mono3D DRAM), near-memory processing (NMP), and GPU acceleration to overcome challenges posed by the massive data volumes inherent in MoE models. Mono3D DRAM, connected to logic dies via hybrid bonding and to the GPU via a silicon interposer, delivers higher internal bandwidth than conventional High Bandwidth Memory (HBM) due to its dense vertical interconnects. To address latency issues arising from the vertical scaling of Mono3D DRAM, the team constructed internal memory tiers, strategically assigning data across layers based on predicted usage by different experts.

This tiered approach, guided by expert usage prediction, substantially boosts NMP throughput. The resulting architecture incorporates a ring network for efficient data communication, eliminating the need for centralized global buffers and crossbars found in other NMP approaches. Specialized processing elements within each bank-level PE are designed to execute both GeMM and GeMV operations, incorporating tensor cores, specialized memory components, and a programmable tiering table for adaptive DRAM latency control. Experiments demonstrate that Stratum achieves up to an 8. 29x improvement in decoding throughput and a 7.

66x increase in energy efficiency compared to standard GPU systems. The system’s NMP processor, fully implemented on the logic die and hybrid-bonded to the Mono3D DRAM, avoids fabrication constraints and bandwidth limitations of alternative designs. Furthermore, the team’s SIMD-based special function engine efficiently executes general non-linear operators, offering flexibility beyond dedicated Softmax units. This combination of architectural innovations and optimized data flow delivers a substantial performance boost for MoE model serving, paving the way for more efficient and scalable large language model deployments.

Stratum Achieves Efficient Mixture of Experts Serving

The team presents Stratum, a novel system and hardware co-design that efficiently serves Mixture of Experts models, leveraging high-density Mono3D DRAM integrated with logic through 3D hybrid bonding and connected to GPUs via a silicon interposer. This architecture provides a high-throughput and cost-effective alternative to conventional GPU-HBM systems. At the hardware level, Stratum introduces in-memory tiering to manage vertical access latency within Mono3D DRAM, alongside a near-memory processor optimized for expert and attention execution. System-level innovations exploit topic-dependent expert activation patterns to classify and map experts across memory tiers, guided by a lightweight classifier designed to meet service-level objectives.

Comprehensive evaluations, spanning device, circuit, algorithm, and system levels, demonstrate that Stratum achieves up to 8. 29times better decoding throughput and reduces energy consumption by up to 7. 66times compared to GPU systems. This work represents a significant advancement in serving large language models, offering a pathway towards more efficient and scalable artificial intelligence systems.

👉 More information

🗞 Stratum: System-Hardware Co-Design with Tiered Monolithic 3D-Stackable DRAM for Efficient MoE Serving

🧠 ArXiv: https://arxiv.org/abs/2510.05245