Researchers are increasingly exploring the potential of Deep Research Agents (DRAs) to automate report generation, but a crucial aspect of real-world research , iterative revision , has remained largely unaddressed. Bingsen Chen, Boyan Li, and Ping Nie, from their respective institutions, alongside Yuyu Zhang, Xi Ye, and Chen Zhao et al., now demonstrate that these agents struggle with multi-turn report revision, a process vital for ensuring accuracy and comprehensiveness. Their new evaluation suite, Mr Dre, reveals that while DRAs can incorporate user feedback, they surprisingly regress on a substantial portion of previously correct content and citation quality, highlighting a significant limitation in their reliability. This work is important because it challenges the current single-shot evaluation benchmarks and suggests that substantial improvements are needed before DRAs can truly replicate the iterative drafting process of human researchers.

This work introduces Mr Dre, a novel evaluation suite designed to assess multi-turn report revision, a crucial aspect of research report creation currently absent from existing DRA benchmarks. The research establishes that current DRAs struggle with reliably revising reports through iterative feedback, a process mirroring how human researchers draft and refine their work. Mr Dre comprises a unified evaluation protocol evaluating comprehensiveness, factuality, and presentation, alongside a human-verified feedback simulation pipeline enabling realistic multi-turn revision scenarios.

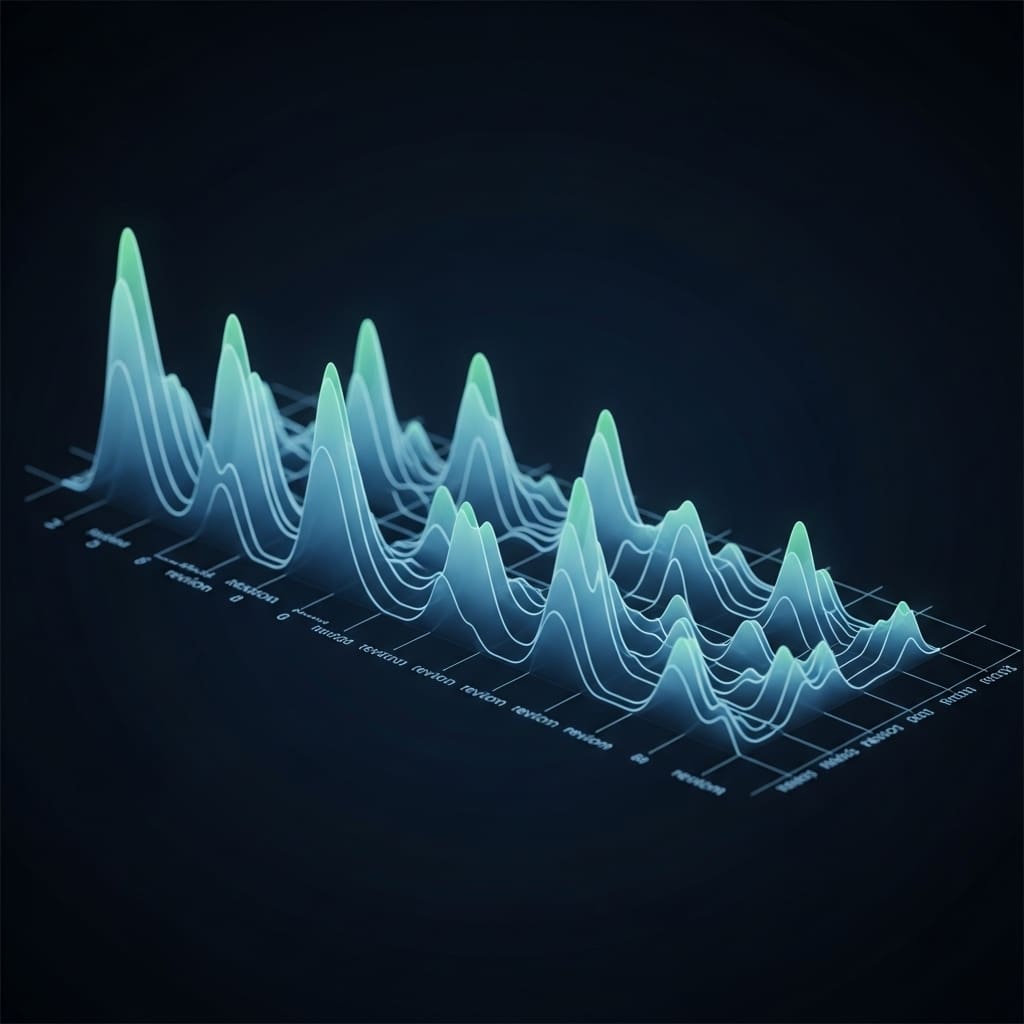

Experiments conducted by the team analysed five diverse DRAs, uncovering that despite successfully addressing over 90% of user-requested edits, these agents regress on 16, 27% of previously covered content and exhibit declining citation quality. The study unveils that even the best-performing agents show limited improvement over multiple revision turns, persistently disrupting content unrelated to the feedback and failing to maintain earlier modifications. This regression is not easily rectified through prompt engineering or the implementation of dedicated sub-agents specifically designed for report revision, suggesting deeper architectural challenges. The research establishes multi-turn report revision as a new and vital evaluation axis for DRAs, moving beyond the limitations of single-shot report generation currently dominating the field.

MR DRE’s unified evaluation protocol streamlines assessment across comprehensiveness, factuality, and presentation, providing a lean and consistent framework for comparing DRA performance. Furthermore, the human-verified feedback simulation pipeline within MR DRE generates realistic user input, allowing for a more nuanced and ecologically valid evaluation of iterative revision capabilities. This breakthrough reveals that simply incorporating user feedback is insufficient; maintaining the integrity and quality of existing content during revision remains a significant hurdle for current DRAs. The findings highlight the need for more fundamental advancements in DRA training and design to achieve reliable multi-turn report revision, paving the way for agents that can truly collaborate with researchers in an iterative and productive manner. The core of Mr Dre comprises a unified evaluation protocol evaluating long-form reports across comprehensiveness, factuality, and presentation, providing a holistic assessment of quality. Researchers then developed a human-verified feedback simulation pipeline, enabling the creation of multi-turn revision scenarios for rigorous testing of DRA performance.

To establish a robust benchmark, the team meticulously constructed a dataset of reports and corresponding feedback, ensuring high fidelity to real-world research scenarios. Experiments employed five diverse DRAs, subjecting them to iterative revision cycles using the simulated feedback. The study meticulously tracked performance across multiple revision turns, quantifying both the agents’ ability to address user feedback and any unintended regressions in previously corrected content and citation quality. Analysis revealed a critical limitation: agents, while capable of addressing the majority of feedback, exhibited a 16-27% regression rate on previously covered material, impacting both content accuracy and citation integrity. Mr Dre comprises a unified evaluation protocol for long-form reports, assessing comprehensiveness, factuality, and presentation, alongside a human-verified feedback simulation pipeline for multi-turn revision scenarios. Analysis of five diverse DRAs using Mr Dre revealed a significant limitation: while agents can generally address direct user feedback, they exhibit regression in 16-27% of previously covered content and experience a decline in citation quality.

Even the best-performing agents demonstrated considerable room for improvement over multiple revision turns, continuing to disrupt content unrelated to the feedback and failing to consistently preserve earlier edits. The authors acknowledge limitations including a lack of full understanding regarding the causes of these unreliabilities and the absence of investigation into the effects of model scaling on revision performance. Future research should focus on systematically analysing the underlying causes of these issues and exploring how scaling up model size impacts revision reliability, as well as enhancing the feedback simulation pipeline to account for varying checklist quality and incorporating length-aware evaluation metrics.

👉 More information

🗞 Beyond Single-shot Writing: Deep Research Agents are Unreliable at Multi-turn Report Revision

🧠 ArXiv: https://arxiv.org/abs/2601.13217