Recent advances in large language models increasingly rely on sparse Mixture-of-Experts (MoE) architectures to enhance capability without substantially increasing computational cost, yet current MoE models consistently fall short of their potential due to suboptimal routing of information. Zhongyang Li, Ziyue Li, and Tianyi Zhou from Johns Hopkins University address this limitation by demonstrating that aligning the routing process with the underlying task embedding significantly improves performance and generalisation. Their method, Routing Manifold Alignment (RoMA), introduces a novel regularisation technique that encourages consistent expert selection for similar tasks, effectively binding task understanding with solution generation. Experiments across several leading MoE models, including OLMoE, DeepSeekMoE, and Qwen3-MoE, demonstrate that RoMA delivers substantial improvements in accuracy and represents a significant step towards realising the full potential of MoE architectures.

Regularizing Routing in Mixture-of-Experts Models

This research details RoMA, a method designed to improve the routing of Mixture-of-Experts (MoE) models. The team evaluated RoMA using large language models ranging from approximately 1 to 34 billion parameters, including dense architectures like Llama, Gemma, and Qwen, and sparse MoE models such as DeepSeekMoE and OLMoE. Performance was evaluated using accuracy, and occasionally F1 score, across eight benchmark datasets: MMLU, HellaSwag, PIQA, ARC-Challenge, ARC-Easy, WinoGrande, BoolQ, and GSM8k. Detailed ablation studies on DeepSeekMoE revealed that regularizing more layers generally improves performance, with the best results achieved by regularizing the last five layers.

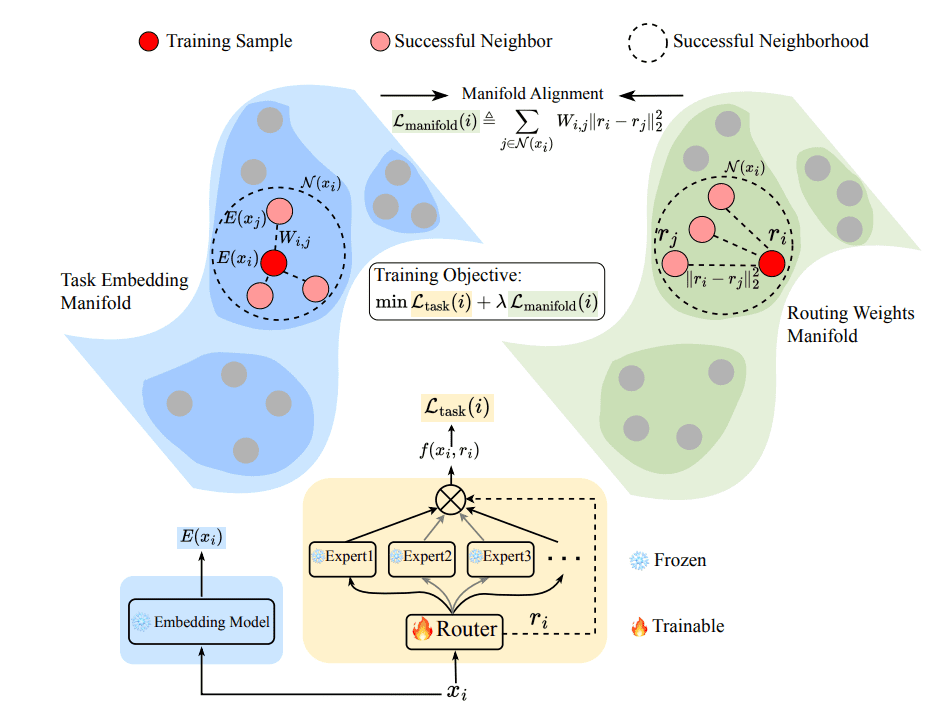

The research also showed that regularizing routing weights based on the last token positions yields the best results, suggesting that the final stages of processing contain the most informative signals for routing. Using an ε-Neighbor approach with a moderate value of 0. 5 performed best, while k=3 provided optimal performance for the k-Neighbor approach. Recognizing that suboptimal routing creates a performance gap compared to optimal routing, the team focused on aligning the manifold of routing weights with that of task embeddings. This innovative approach addresses a limitation in existing MoE models, where semantically similar samples are often assigned to different experts with drastically different routing weights, hindering knowledge sharing. Scientists implemented RoMA through post-training, involving lightweight finetuning of the routers while freezing all other parameters.

The regularization encourages the routing weights of each sample to closely resemble those of its “successful neighbors”, samples that yield correct predictions, within a task embedding space. Applying this technique to OLMoE, DeepSeekMoE, and Qwen3-MoE models, the team evaluated performance across benchmarks including ARC-E, ARC-C, HellaSwag, MMLU, GSM-8K, BoolQ, WinoGrande, and PIQA. Experiments demonstrate that RoMA consistently improves accuracy, achieving gains of 7-15% on OLMoE-7B-A1B and consistently outperforming dense language models like Gemma2-3B, Mistral-7B, Vicuna-13B, and Llama2-34B across all eight benchmarks.

Routing Manifold Improves Expert Knowledge Sharing

Sparse Mixture-of-Experts (MoE) have become a central architecture for scaling large language models, enabling increased capacity without a proportional increase in computational cost during inference. However, evaluations reveal a significant performance gap, with existing MoE models achieving accuracies 10-20% lower than what is possible with optimal routing. This gap highlights a critical bottleneck in MoE LLMs, suggesting that improving routing is essential for enhancing generalization performance. RoMA introduces a manifold regularization term, encouraging the routing weights of each sample to be close to those of its “successful neighbors”, samples with correct MoE predictions, in the task embedding space. Experiments demonstrate that RoMA consistently improves accuracy across multiple benchmarks, including ARC-C, HellaSwag, MMLU, GSM-8K, BoolQ, WinoGrande, PIQA, and ARC-E. Existing MoE models often exhibit suboptimal routing of information, leading to a performance gap compared to ideal scenarios; RoMA addresses this by aligning the way routing weights are distributed with the underlying task embedding manifold. This alignment encourages the model to select similar experts for samples targeting similar tasks, fostering better generalization and more efficient use of the model’s capacity.

👉 More information

🗞 Routing Manifold Alignment Improves Generalization of Mixture-of-Experts LLMs

🧠 ArXiv: https://arxiv.org/abs/2511.07419