Image noise significantly degrades the quality of photographs and computer vision tasks, increasingly rendering traditional image processing techniques obsolete in the face of deep learning advances. Srinivas Miriyala, Sowmya Vajrala, and Hitesh Kumar, all from Samsung Research Institute Bangalore, alongside Kodavanti and Rajendiran et al, have addressed this challenge with a novel, mobile-friendly image de-noising network, a first-of-its-kind design achieved through entropy-regularized neural architecture search optimised for hardware constraints. This research is significant because it delivers a substantial reduction in model size (12% fewer parameters) and on-device latency (up to two-fold improvement) with only a minimal trade-off in image quality (0.7% PSNR drop), as demonstrated on a Samsung Galaxy S24 Ultra, paving the way for more powerful and efficient image processing directly on edge devices like smartphones.

This innovative method addresses the growing need for powerful image processing capabilities directly on devices, bypassing the limitations of traditional Image Signal Processing (ISP) techniques which struggle with complex noise patterns. The resulting Entropy Regularized Neural Network, or ERN-Net, demonstrates a compelling balance between accuracy and efficiency, paving the way for enhanced photography and computer vision applications on mobile platforms.

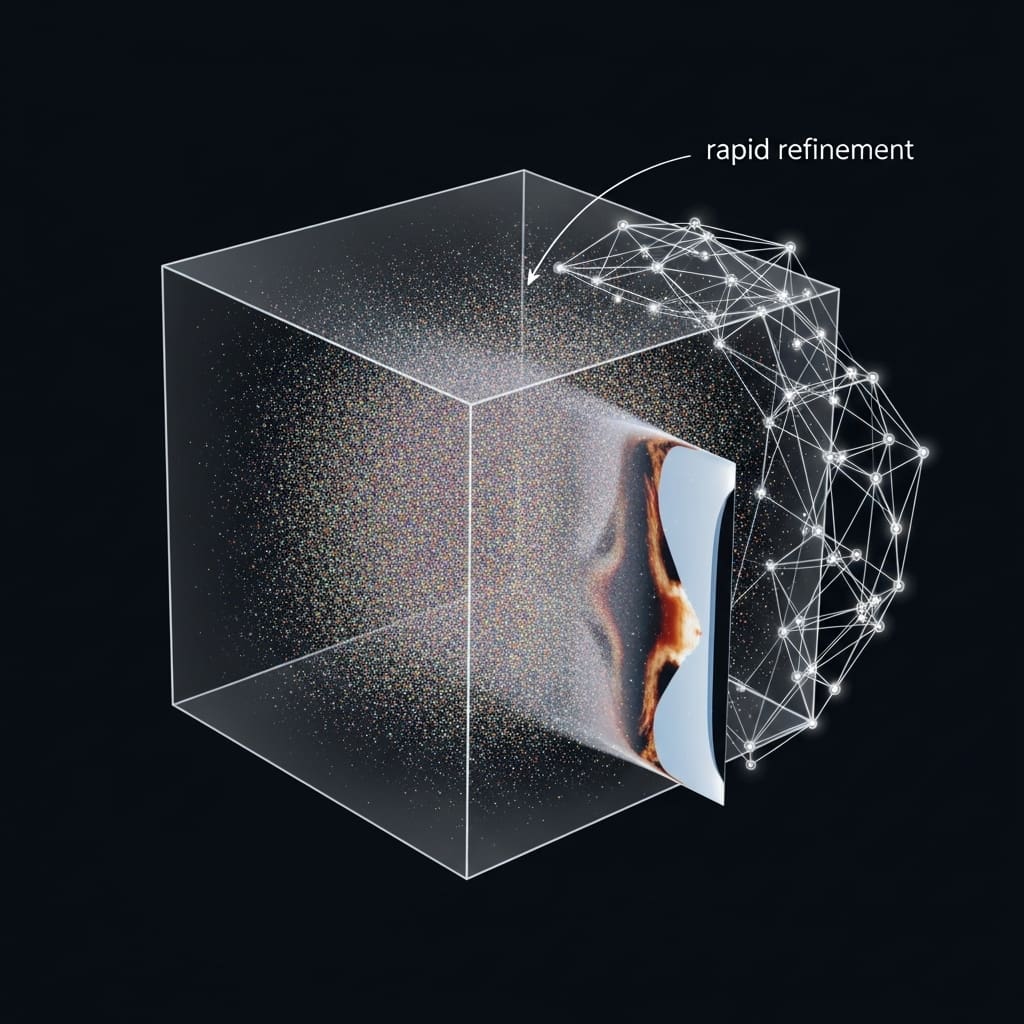

The research focused on optimising network architecture specifically for on-device performance, a departure from conventional deep learning approaches that often prioritise accuracy at the expense of computational cost. Researchers constructed a hardware-aware search space, carefully profiling the time-intensive operations on a Qualcomm’s sm8650 chipset to identify bottlenecks and guide the NAS process. This involved analysing the impact of operations like Layer Normalization and channel attention mechanisms on processing time, allowing the team to prioritise efficient alternatives during the network design phase. By employing an Entropy Regularization strategy, the researchers mitigated issues common in differentiable NAS, such as indecisiveness and performance collapse, ensuring a robust and convergent training process.

Experiments reveal that the ERN-Net boasts 12% fewer parameters, achieving approximately a 2-fold improvement in on-device latency and a 1.5-fold reduction in memory footprint, with only a minimal 0. Notably, the network exhibits competitive accuracy compared to the state-of-the-art Swin-Transformer for Image Restoration, but with an impressive ~18-fold reduction in Giga-Multiply Accumulate operations (GMACs), a key metric for computational complexity. The team rigorously tested the network’s generalisation ability, successfully demonstrating its effectiveness on Gaussian de-noising tasks across four benchmark datasets with three intensity levels, as well as on a real-world de-noising benchmark. This work establishes a new paradigm for designing mobile-friendly deep learning models, moving beyond simply compressing existing architectures to actively searching for hardware-optimised structures.

The successful deployment and profiling on a flagship smartphone demonstrate the practical viability of the approach, opening doors for real-time image enhancement and advanced computer vision applications directly on consumer devices. Future research will0.5-fold improvement in memory footprint, with only a 0. Experiments revealed that the designed model contains 12% fewer parameters than existing networks, translating to a roughly 2-fold improvement in on-device latency and a 1.5-fold reduction in memory footprint0.7% decrease in Peak Signal-to-Noise Ratio (PSNR).

The breakthrough delivers competitive accuracy alongside a remarkable ~18-fold reduction in Giga-Multiply Accumulate operations (GMACs) when compared to the state-of-the-art Swin-Transformer for Image Restoration. Detailed measurements confirm that the network successfully processed Gaussian de-noising tasks across three intensity levels on four benchmark datasets, and further demonstrated its capabilities on one real-world de-noising benchmark, proving its generalization ability. The team meticulously profiled the hardware to identify time-intensive operations, discovering that Layer Normalization consumed the maximum processing time, followed by the computationally expensive channel attention mechanism. Researchers designed a hardware-aware search space based on the 36-block NAFNet model, a U-Net styled architecture with 4 encoders, 1 middle block, and 4 decoders.

Each NAF block consists of Layer Normalization, depth-wise convolutions, channel attention, and a gated approximation for GeLU activation. The Entropy Regularization technique prevented indecisiveness during training and improved convergence by scaling, while a hardware-conscious loss function further enhanced performance with an emphasis on accuracy. Tests prove the approach is generic and can be deployed on any standard mobile device, opening possibilities for real-time image enhancement directly on smartphones and other edge computing platforms. Data shows the network’s performance was evaluated using a configuration of (2-2-4-8)-12-(2-2-2-2) NAF blocks, with the middle block excluded from the search space due to its negligible latency. The team recorded weight distributions for various alternatives within the search space, demonstrating the effectiveness of the Entropy Regularized Differentiable NAS in selecting efficient network configurations. This work not only advances image de-noising techniques but also establishes a robust framework for deploying complex AI models on resource-constrained mobile devices, paving the way for more sophisticated and accessible image processing applications.

Mobile U-Net via Entropy-Regularized NAS optimisation achieves state-of-the-art

Scientists have developed a new mobile-friendly network for image de-noising utilising Entropy-Regularized differentiable Neural Architecture Search (NAS) within a U-Net framework. This represents a first-of-its-kind approach to optimising image processing for deployment on devices like smartphones. The resulting model achieves a 12% reduction in parameters, alongside approximately two-fold improvements in on-device latency and a 1.5-fold improvement in memory footprint, incurring only a 0.7% decrease in Peak Signal-to-Noise Ratio (PSNR). Compared to the state-of-the-art Swin-Transformer for Image Restoration, the proposed network demonstrates competitive accuracy with an 18-fold reduction in Giga-Multiply Accumulations (GMACs), a measure of computational complexity.

Extensive testing on Gaussian de-noising with varying intensities across four benchmarks, and on a single real-world benchmark, confirms the network’s ability to generalise effectively. The research successfully addresses both the development and deployment challenges of complex AI models for image de-noising, without compromising one aspect for the other. This work optimises pre-trained foundation models for image de-noising specifically for smartphone deployment, employing network surgery, a hardware-aware search space, and a novel entropy-regularised differentiable search strategy. The authors acknowledge that the model exhibits a slight reduction in PSNR, 0.7%, when compared to larger, less efficient models, representing a trade-off between accuracy and computational efficiency. Future research may extend this generic optimisation and deployment strategy to other computationally intensive models within the field of Generative Artificial Intelligence, potentially unlocking their full potential on embedded devices.

👉 More information

🗞 Mobile-friendly Image de-noising: Hardware Conscious Optimization for Edge Application

🧠 ArXiv: https://arxiv.org/abs/2601.11684