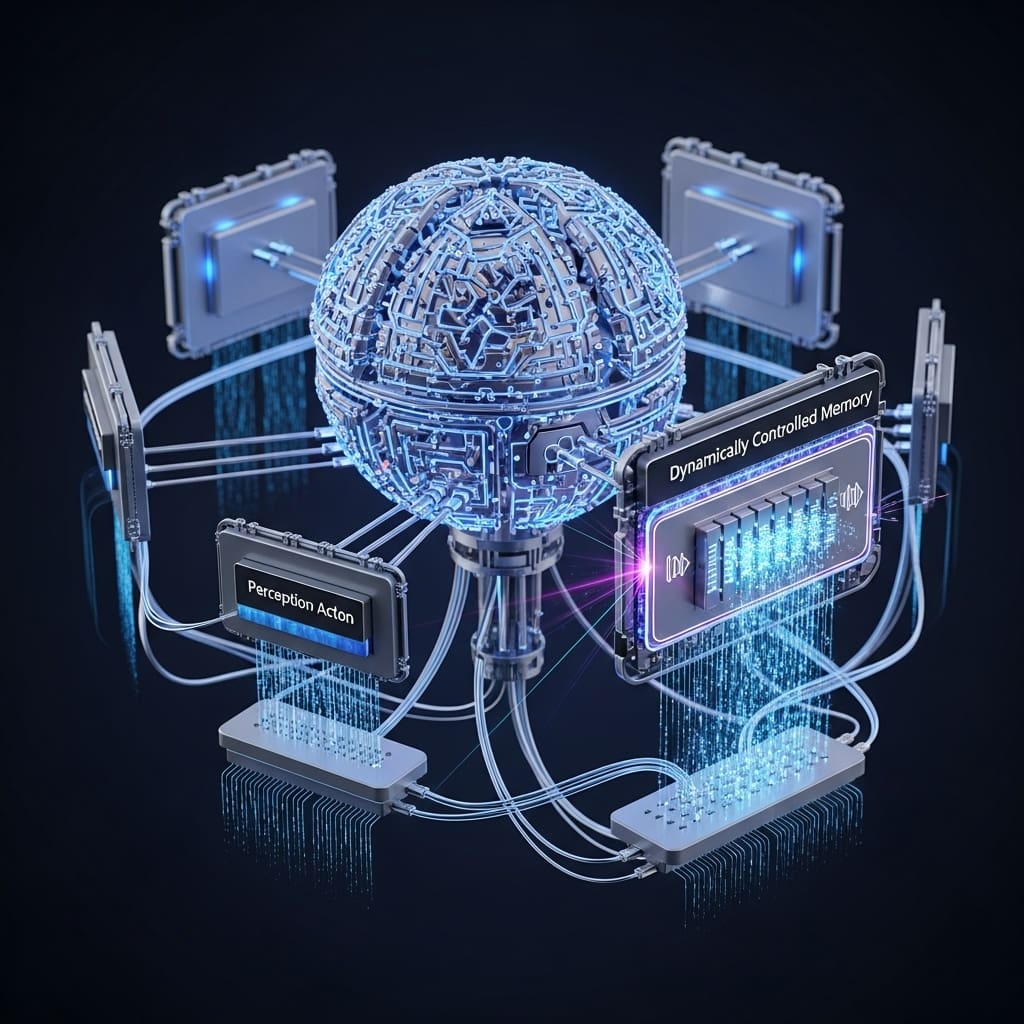

Scientists are tackling the challenge of equipping embodied agents with reliable long-term memory, crucial for navigating complex environments and completing intricate tasks. Vishnu Sashank Dorbala and Dinesh Manocha, both from the University of Maryland, College Park, alongside their colleagues, present MemCtrl, a groundbreaking framework leveraging Multimodal Large Language Models (MLLMs) as active memory controllers. Current retrieval-augmented generation (RAG) systems often function as static repositories, proving inefficient for agents operating with limited onboard resources. MemCtrl uniquely addresses this by enabling MLLMs to dynamically prune memory , deciding what observations and reflections to keep, update, or discard , significantly boosting performance on benchmarks like EmbodiedBench, with improvements averaging 16% and exceeding 20% on specific instructions. This research demonstrates a substantial leap towards creating more adaptable and intelligent embodied agents capable of sustained, effective operation in real-world scenarios.

This breakthrough addresses the limitations of current memory compression and retrieval systems, such as Retrieval-Augmented Generation (RAG), which often treat memory as static offline storage, a disadvantage for agents operating with strict memory and computational constraints. The research team proposes augmenting MLLMs with a trainable memory head, denoted as μ, functioning as a gate to intelligently retain, update, or discard observations and reflections during exploration. Experiments demonstrate significant improvements in embodied task completion ability when training μ via both offline expert guidance and online Reinforcement Learning (RL).

Specifically, the team achieved an average performance improvement of around 16% on μ-augmented MLLMs when tested on multiple subsets of the EmbodiedBench benchmark, with gains exceeding 20% on specific instruction types. This innovation stems from the creation of a transferable memory head that learns to filter observations on-the-go, unlike prior methods requiring offline parsing of large datasets. MemCtrl’s modular design allows it to attach to any off-the-shelf MLLM without requiring finetuning of the core model, enabling scalable transfer across diverse embodied setups and vision-language backbones. The core contribution of this work lies in the introduction of active memory filtering, where the trainable memory head μ selectively stores vital memories, enhancing both parameter and memory efficiency for self-improving embodied agents.

Qualitative analysis of the memory fragments collected by μ reveals superior performance on long and complex instruction types, highlighting the system’s ability to prioritize relevant information. This approach draws inspiration from human memory, where individuals actively filter experiences, retaining only crucial fragments and reconstructing missing details through reasoning, a process that enables efficient cognition even with limited storage. Furthermore, the researchers demonstrate that attaching μ to low-performing agents on the Habitat and ALFRED splits of EmbodiedBench significantly boosts task performance while simultaneously reducing the number of stored observations. This efficiency is crucial for real-world deployment, particularly in robotics where on-device computation and limited resources are common. The research addresses limitations in context window size by introducing a trainable memory head, denoted as μ, which functions as a gate to selectively retain, update, or discard observations during exploration. Experiments employed two training methodologies for μ: first, via an offline expert, and second, through online Reinforcement Learning (RL), both demonstrating significant improvements in embodied task completion. Specifically, the team augmented two low-performing MLLMs with MemCtrl across multiple subsets of the EmbodiedBench benchmark, observing an average performance increase of approximately 16%, with gains exceeding 20% on specific instruction subsets.

The study pioneered an active memory filtering approach, contrasting with prior methods that treat memory as static offline storage or rely on extensive retrieval-augmented generation (RAG) schemes. Researchers engineered μ as a lightweight, trainable head attached to a frozen MLLM backbone, enabling real-time filtering of observations, a crucial feature for resource-constrained embodied agents. This innovative design circumvents the need to parse large amounts of data offline, offering a scalable solution for efficient decision-making. The team demonstrated that μ is model-agnostic, attaching to any off-the-shelf MLLM without requiring finetuning of the core model, thereby facilitating transferability across diverse embodied setups and vision-language backbones.

To quantify performance, the researchers meticulously evaluated μ-augmented MLLMs on the EmbodiedBench benchmark, a standard evaluation suite for embodied AI. The system delivers precise measurement of task completion rates, comparing the performance of MLLMs with and without the MemCtrl augmentation. Qualitative analysis of the memory fragments collected by μ revealed superior performance on long and complex instruction types, indicating the head’s ability to prioritize relevant information for effective reasoning. This approach enables the MLLM to focus on vital memories while filtering redundant ones on the go, mirroring human memory processes and enhancing scalability under tight computational and memory budgets.

Furthermore, the work highlights the modularity of MemCtrl, allowing for easy transfer across different embodied agents and vision-language backbones. The team harnessed this flexibility to demonstrate the adaptability of the memory head, showcasing its potential for widespread implementation in robotics and other resource-limited environments. The research addresses limitations in context windows, crucial for personalised decision-making, by introducing a trainable memory head, denoted as μ, that acts as a gate for retaining, updating, or discarding observations during exploration. Experiments revealed that augmenting MLLMs with μ results in a substantial improvement in task completion ability, demonstrating a roughly 16% average increase across multiple subsets of the EmbodiedBench benchmark. On specific instruction subsets, the μ-augmented MLLMs achieved gains exceeding 20%, showcasing the framework’s effectiveness in complex scenarios.

The team measured performance improvements by training μ in two ways: utilising an offline expert and employing online Reinforcement Learning (RL). Results demonstrate that both training methods significantly boosted the MLLM’s ability to handle embodied tasks, confirming the transferability of the memory head. Data shows that μ actively filters observations, determining which are vital to retain and which are redundant, thereby increasing both parameter and memory efficiency for self-improving agents. This active memory filtering contrasts with prior retrieval-based systems that rely on offline processing of large observational datasets.

Researchers recorded that μ is model-agnostic, attaching to any off-the-shelf MLLM without requiring finetuning or modification of the backbone, facilitating scalable transfer across diverse embodied setups and vision-language backbones. Attaching μ to the worst-performing agents on the Habitat and ALFRED splits of EmbodiedBench yielded a significant performance boost of approximately 16% on average, while simultaneously storing fewer observations. This efficiency is paramount for real-world deployment, enabling compact embodied agents to operate under strict computational and memory constraints. Qualitative analysis of the memory fragments collected by μ revealed superior performance on long and complex instruction types, indicating the framework’s ability to prioritise crucial information.

The study highlights that μ leverages commonsense reasoning to manage memory effectively, mirroring human cognitive efficiency despite limited storage. Measurements confirm that the framework empowers embodied agents to handle extended tasks without requiring large-scale training, paving the way for scalable self-improvement under tight resource limitations. This system introduces a trainable ‘memory head’, denoted as μ, which functions as a gate, selectively retaining, updating, or discarding observations and reflections as an embodied agent explores its environment. Through both offline expert training and online reinforcement learning, researchers demonstrated significant improvements in task completion across various subsets of the EmbodiedBench benchmark. Specifically, augmenting lower-performing MLLMs with MemCtrl resulted in an average performance increase of approximately 16%, exceeding 20% on certain instruction types.

Qualitative analysis revealed that μ effectively prioritises relevant memory fragments, particularly excelling in handling complex and long-horizon instructions, as exemplified by an agent repeatedly moving a plate to the sink and retaining that information. The authors acknowledge limitations including the reliance on expert demonstrations for supervised learning and the challenges of sparse reward structures in reinforcement learning variants. Future research directions include designing more effective reward functions to better capture observation significance and incorporating audio observations to enhance observational complexity, alongside exploring real-world transferability through practical experiments.

👉 More information

🗞 MemCtrl: Using MLLMs as Active Memory Controllers on Embodied Agents

🧠 ArXiv: https://arxiv.org/abs/2601.20831