Binarized Neural Networks offer a powerful route to efficient artificial intelligence, but their increasing use raises concerns about security and data protection. Gokulnath Rajendran from NTU, Singapore, Suman Deb from Imperial College London, and Anupam Chattopadhyay from NTU, Singapore, and their colleagues demonstrate a novel method for safeguarding these networks within emerging in-memory computing systems. The team addresses the challenge of protecting sensitive model parameters from theft, a problem traditionally solved by decryption at runtime, which negates the speed benefits of in-memory computing. Their approach cleverly transforms the model parameters using a unique key generated from a physical unclonable function, allowing inference to occur directly on the encrypted data, effectively achieving a form of fully homomorphic encryption with minimal performance impact. This breakthrough not only secures the network against unauthorised access, as demonstrated by a significant drop in accuracy when the key is absent, but also preserves the computational efficiency central to in-memory computing architectures.

which drives their popularity across various applications. Recent studies highlight the potential of mapping Binarized Neural Network (BNN) model parameters onto emerging non-volatile memory technologies, specifically using crossbar architectures, resulting in improved inference performance compared to traditional CMOS implementations. The study pioneered a method to map BNN model parameters onto these crossbars while safeguarding against theft attacks, achieving this without compromising computational efficiency. Researchers trained the BNN, resulting in a weight matrix which was then mapped to the RRAM crossbar for inference. They implemented a system where two RRAM devices arranged in a column format represent a single weight value; a weight of +1 is achieved by setting one cell to a low resistance state and the other to a high resistance state, and this configuration is reversed for a weight of -1.

Input signals were encoded similarly, with +1 represented as (1,0) and -1 as (0,1), allowing for efficient matrix-vector multiplication within the crossbar. The activation function, crucial for BNN operation, was realized using the sign function, which processes the output of this multiplication. Scientists harnessed a comparator integrated into the crossbar to implement this sign function, providing a more energy-efficient alternative to traditional analog-to-digital converters and activation logic. Batch normalization layers were adjusted to serve as a threshold within the activation function, functioning as a comparison input to the comparator.

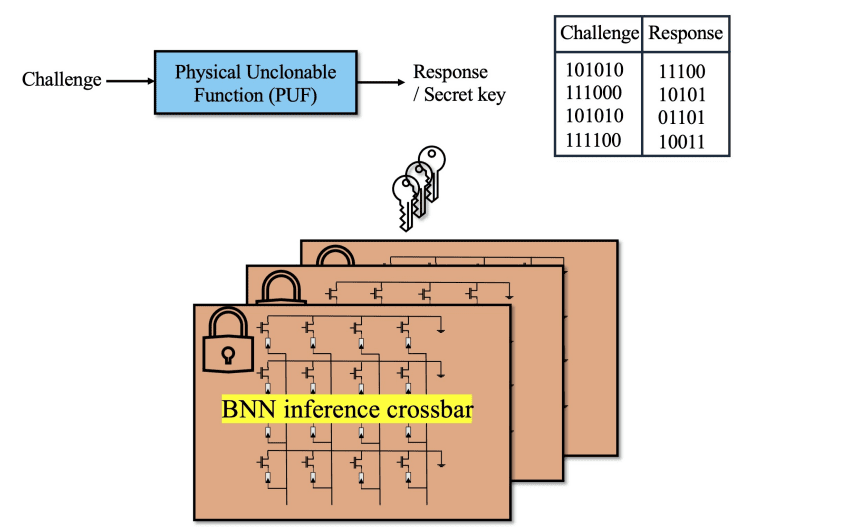

To protect the model parameters, the team utilized a secret key derived from a Physical Unclonable Function (PUF), generating a response based on an input challenge during runtime, rather than storing a static key. This approach enhances resilience against theft attacks targeting the state of non-volatile memory. This work formalizes a method for securing BNN inference by utilizing a secret key derived from a physical unclonable function (PUF) during runtime, ensuring the key is never stored and remains inaccessible to potential attackers. The system leverages the unique characteristics of PUFs, generating a key based on input challenges. The research demonstrates that transforming model parameters with this PUF-derived secret key prior to storage in resistive RAM (RRAM) crossbar structures significantly enhances security.

Experiments reveal that attempting inference without the correct secret key results in a drastic reduction in accuracy, falling below 15%. This substantial performance degradation validates the effectiveness of the protection strategy. The team explored multiple techniques for this transformation, confirming their effectiveness across different network configurations. This breakthrough delivers a theoretical framework for fully homomorphic encryption (FHE) with minimal runtime overhead, allowing computations to be performed directly on encrypted data. The method involves mapping BNN weights to RRAM crossbars, where each weight is represented by two RRAM devices configured to represent +1 or -1 values, and input signals are similarly encoded.

This work demonstrates a robust strategy for protecting binarized neural networks, particularly within emerging in-memory computing architectures. Researchers developed a method to transform model parameters using secret keys derived from physical unclonable functions, safeguarding against theft attacks while maintaining computational efficiency. Inference performed without access to the correct secret key results in a substantial reduction in accuracy, falling below 15%, effectively rendering the model unusable. This achievement addresses a critical security concern for deploying binarized neural networks, enabling secure operation without incurring the significant overhead typically associated with encryption methods.

👉 More information

🗞 Efficient and Encrypted Inference using Binarized Neural Networks within In-Memory Computing Architectures

🧠 ArXiv: https://arxiv.org/abs/2510.23034