Scientists are tackling the persistent problem of unreliable reasoning in large language models (LLMs), despite their impressive capabilities. Fangan Dong, Zuming Yan, and Mengqi Zhang from Shandong University, alongside Xuri Ge, Zhiwei Xu, and Xuanang Chen from the Institute of Software, Chinese Academy of Sciences, demonstrate that a surprisingly small number of neurons are key to accurate reasoning , and they’ve developed a method to harness this insight. Their research introduces AdaRAS, a novel framework that identifies and selectively steers these ‘Reasoning-Critical Neurons’ during LLM inference, boosting reliability without the need for expensive retraining or complex sampling techniques. This work is significant because it offers a lightweight, transferable solution to improve LLM performance on challenging mathematics and coding tasks, achieving gains of over 13% on demanding benchmarks like AIME-24 and AIME-25, and paving the way for more dependable artificial intelligence.

LLM Reasoning Improved Via Neuron Activation Steering

AdaRAS operates at test time, selectively intervening on neuron activations to enhance incorrect reasoning pathways while preserving the accuracy of already correct solutions, a crucial distinction from methods that might disrupt successful computations. The study unveils that AdaRAS not only enhances accuracy but also exhibits remarkable transferability across diverse datasets and scales effectively to more powerful models, consistently outperforming existing post-training methods. Crucially, this performance boost is achieved without requiring any additional training or incurring the high computational costs associated with sampling techniques, making it a practical solution for real-world applications. The team’s work establishes a direct link between specific neuron activations and reasoning correctness, opening new avenues for understanding and controlling the internal mechanisms of LLMs.

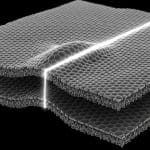

This breakthrough research introduces a novel method for identifying and leveraging Reasoning-Critical Neurons, contrasting activations from both correct and incorrect reasoning samples to pinpoint key neurons influencing outcomes. AdaRAS then employs polarity-based filtering to create a sparse, functionally consistent set of neurons for targeted intervention. During inference, the framework selectively adjusts the activations of these RCNs only when a reasoning trajectory appears to be faltering, providing a focused correction without interfering with already accurate computations. The researchers provide mechanistic insights demonstrating that AdaRAS stabilizes latent reasoning trajectories while simultaneously preserving semantic representations, facilitating seamless integration into existing LLM pipelines.

Furthermore, the study’s findings suggest that activation steering is a viable tool for enhancing LLM reasoning capabilities, offering a parameter-free, test-time solution that consistently improves performance and generalizes across various tasks and datasets. By focusing on internal activations, AdaRAS bypasses the limitations of treating LLMs as “black boxes,” offering a more nuanced and controllable approach to improving reasoning reliability. This work not only advances our understanding of LLM internals but also paves the way for more robust and dependable AI systems capable of tackling complex reasoning challenges in diverse domains.

Scientists Method

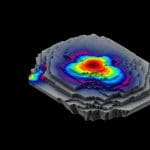

The core of AdaRAS lies in its adaptive steering of RCN activations during inference. Researchers calculated the mean activation difference for each neuron across correct and incorrect samples, effectively highlighting those most indicative of reasoning success or failure. A polarity-based filter then selected a sparse set of RCNs, ensuring functional consistency and minimizing interference. During inference, the system monitors the evolving activation trajectory and intervenes on these RCNs only when an incorrect path is predicted, enhancing the signal and guiding the model towards a correct solution.

This targeted intervention avoids disrupting already-correct reasoning processes, preserving performance on well-solved problems. Crucially, AdaRAS exhibited strong transferability, performing well across different datasets and scaling effectively to larger, more powerful LLMs. The study further revealed that AdaRAS stabilizes latent reasoning trajectories while preserving semantic representations, allowing for seamless integration into existing LLM pipelines. This innovative approach enables a deeper understanding of LLM reasoning processes. By directly manipulating internal activations, the research provides mechanistic insights into how models arrive at solutions and identifies key neurons responsible for reliable reasoning. The work demonstrates that activation steering is a viable tool for enhancing LLM capabilities, offering a parameter-free and efficient alternative to traditional methods. The code and data supporting this research are publicly available, facilitating further exploration and development in this exciting field.

AdaRAS boosts LLM reasoning via neuron steering

This targeted approach enhances incorrect reasoning pathways while preserving accuracy in already-correct cases, representing a significant step forward in LLM refinement. Data shows that AdaRAS also delivered a 5.34% improvement on the AIME-Extend dataset and a 1.60% increase on MATH-500, demonstrating its broad applicability. This precise neuron-level selection, combined with adaptive steering, allows for targeted intervention during inference. The study employed a contrastive data construction technique, generating paired positive and negative reasoning trajectories via self-sampling to accurately identify RCNs.

For each probing sample, four paired reasoning traces were generated, used for RCN identification, with remaining samples reserved for test-time steering evaluation. Tests prove that the adaptive intervention strategy, guided by a failure prediction module with an AUROC of 0.8347 on the AIME dataset, effectively rectifies incorrect reasoning without degrading performance on already correct inputs. Furthermore, the research details that the steering strength is controlled by a positive scalar α, applied within the Model Parallel (MLP) block of the LLM, using a sparse steering vector constructed from the top-K ranked RCNs. Table 8 summarises the statistics of the datasets used, including HumanEval, MBPP, and GSM8K, where AdaRAS achieved gains of 2.01%, 3.44%, and 0.76% respectively, further solidifying its effectiveness. The breakthrough delivers a practical and efficient solution for enhancing LLM reasoning capabilities, with potential applications in various fields requiring reliable and accurate problem-solving.

AdaRAS steers neuron activation for better reasoning

The framework exhibits strong transferability between datasets and outperforms existing post-training methods without requiring additional training or computational sampling. Trajectory-level analyses suggest AdaRAS stabilises reasoning paths without impacting the semantic modelling capabilities of LLMs. However, the authors acknowledge limitations, noting the current analysis focuses on Qwen3 series models and STEM benchmarks, future work should explore broader model architectures and more complex reasoning scenarios. Constructing contrastive data pairs, essential for their approach, may also limit applicability to models with extreme capabilities where obtaining paired reasoning trajectories is difficult. Further research could incorporate techniques like sparse autoencoders to better understand the underlying mechanisms of RCNs. This work contributes a novel inference-time activation steering method, offering a practical approach to improve the reliability of LLMs without substantial computational overhead.

👉 More information

🗞 Identifying and Transferring Reasoning-Critical Neurons: Improving LLM Inference Reliability via Activation Steering

🧠 ArXiv: https://arxiv.org/abs/2601.19847