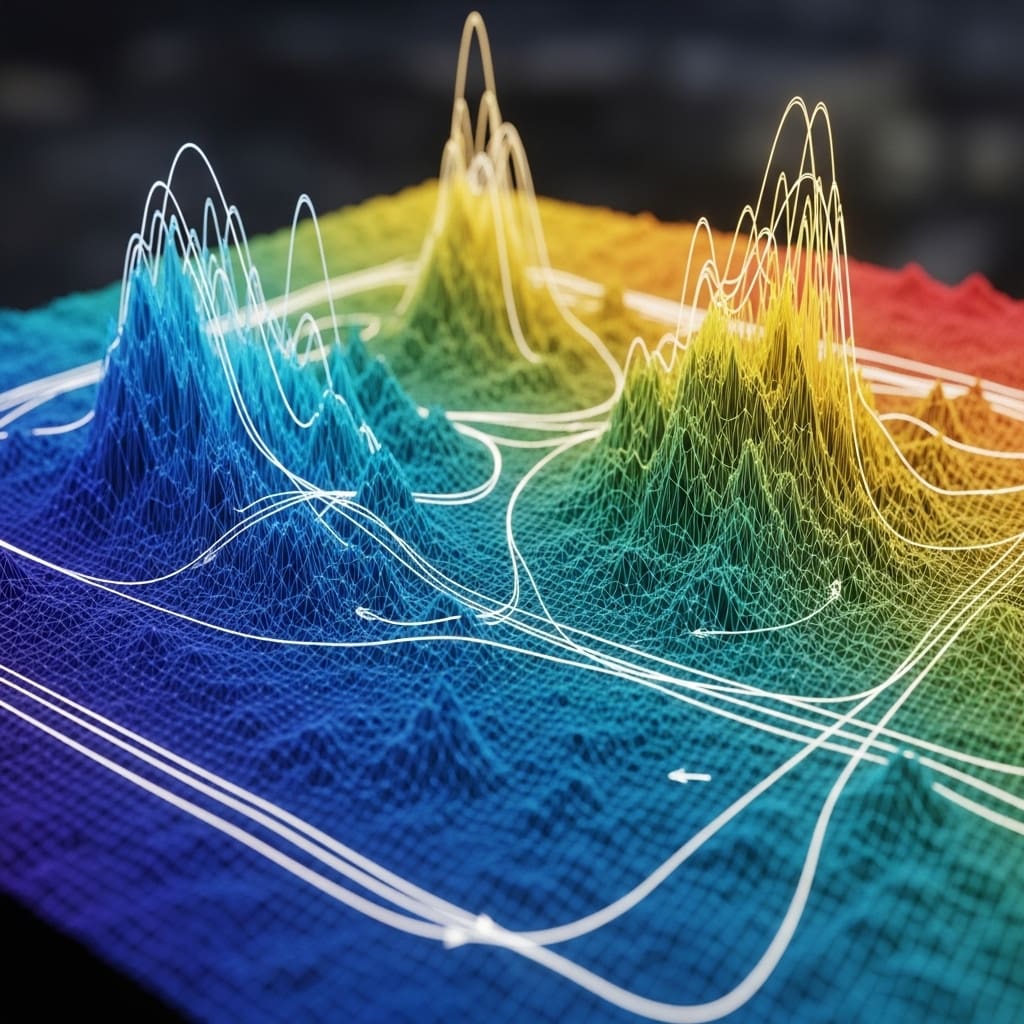

Researchers are tackling a critical challenge in optimising Large Language Models (LLMs) for complex, multi-turn reasoning tasks. Shichao Ma, Zhiyuan Ma, and Ming Yang, from Tiansuan Lab, Ant Group Co., Ltd, alongside Xiaofan Li, Xing Wu, and Jintao Du, demonstrate how current reinforcement learning methods suffer from a “Double Homogenization Dilemma” , failing to adequately recognise the value of individual reasoning steps and struggling with accurate advantage estimation. Their new Turn-level Stage-aware Policy Optimization (TSPO) framework addresses this by introducing a novel reward mechanism that preserves crucial process-level signals and boosts reward variance, ultimately achieving substantial performance gains of up to 24% on leading LLMs like Qwen2.5-3B and 7B, and representing a significant step towards more effective and nuanced LLM training.

The research addresses a critical limitation in current reinforcement learning (RL) frameworks, the “Double Homogenization Dilemma” , which hinders effective learning by failing to adequately recognise and reward intermediate reasoning steps.

This dilemma manifests as both process homogenization, where diverse reasoning paths receive identical rewards, and intra-group homogenization, where coarse-grained rewards limit advantage estimation during training. This innovative approach preserves crucial process-level signals, effectively differentiating between successful and unsuccessful reasoning steps, and increases reward variance within groups without relying on external reward models or manual annotations.

By focusing on the initial detection of the correct information, TSPO encourages more efficient and accurate information retrieval. The study unveils significant performance gains using TSPO across a range of question answering datasets. Extensive experiments demonstrate that TSPO substantially outperforms existing state-of-the-art baselines, achieving average performance improvements of 24% and 13.6% on Qwen2.5-3B and 7B models, respectively.

This improvement is achieved by addressing the limitations of sparse, outcome-level rewards, which often compress the entire reasoning process into a single scalar value, obscuring the quality of intermediate steps. Researchers identified that existing methods, while attempting to address these issues through process-level supervision, often require costly annotations or rely on proprietary models with limited generalizability.

TSPO, however, circumvents these drawbacks by leveraging the FOLR mechanism to allocate rewards based on the first occurrence of the correct answer, thereby enhancing both process signal preservation and variance within training groups. The work opens avenues for more effective training of LLMs for complex tasks, potentially leading to advancements in areas such as open-domain question answering and mathematical reasoning.

Turn-level reward allocation for improved reasoning in large language models is a promising research direction

Scientists identified a “Double Homogenization Dilemma” hindering reinforcement learning (RL) frameworks for search-augmented reasoning, specifically in large language models (LLMs). TSPO pioneers the First-Occurrence Latent Reward (FOLR) mechanism, which dynamically allocates partial rewards to the specific turn where the ground-truth answer initially appears.

This innovative technique ensures that beneficial intermediate reasoning steps receive recognition, even if the final answer is incorrect. Experiments employed seven diverse question answering datasets to rigorously evaluate TSPO’s performance. The research team implemented TSPO with both Qwen2.5-3B and Qwen2.5-7B models, comparing results against state-of-the-art baseline methods.

Performance was measured using exact match (EM) as the primary metric, allowing for a direct assessment of answer accuracy. The study demonstrated that TSPO significantly outperformed existing techniques, achieving average performance gains of 24% on the Qwen2.5-3B model and 13.6% on the Qwen2.5-7B model. The research tackles the “Double Homogenization Dilemma”, a problem where crucial process-level signals are lost during training and reward variance is reduced within groups of trajectories.

Experiments reveal that current methods often treat successful information acquisition and complete failures identically, hindering effective learning. The team measured the impact of this dilemma by analysing trajectories based on Outcome Accuracy and Process Integrity, identifying four categories: complete failure, near-miss, full success, and retrieval-free success.

Data shows that retrieval-free successes were absent, confirming that successful retrieval is essential for correct synthesis. Furthermore, the study quantified that near-miss attempts and total failures received the same zero reward, demonstrating process-level reward homogenization. TSPO introduces the First-Occurrence Latent Reward (FOLR) mechanism, which allocates partial rewards to the turn where the ground-truth answer first appears.

This preserves process-level signals and increases reward variance within groups, avoiding vanishing advantages during training. Crucially, the breakthrough delivers these improvements without requiring external reward models or additional human annotations. Extensive experiments across seven diverse question answering datasets demonstrate that TSPO significantly outperforms state-of-the-art baselines.

Results demonstrate average performance gains of 24% on the Qwen2.5-3B model and 13.6% on the Qwen2.5-7B model. These measurements confirm that TSPO effectively addresses the Double Homogenization Dilemma, enabling LLMs to learn more efficiently and achieve higher accuracy in multi-turn reasoning tasks. TSPO allocates partial rewards to the initial turn where the correct answer appears within retrieved evidence, preserving crucial process-level information and increasing reward variance without needing additional annotations or reward models.

Experiments across seven question answering benchmarks demonstrate that TSPO consistently surpasses existing baseline methods, achieving average performance gains of 24% and 13.6% on Qwen2.5-3B and 7B models, respectively, while maintaining computational efficiency. The authors acknowledge limitations including a reliance on accurate retrieval, as FOLR assumes the correct answer is present in the retrieved data, and the current focus on search-augmented reasoning tasks.

Future research will concentrate on adapting TSPO to a wider range of task types and refining the FOLR mechanism for scenarios where retrieval is imperfect or unnecessary. The findings highlight the importance of granular, turn-level rewards in effectively training LLMs for complex reasoning tasks, addressing a key challenge in reinforcement learning for search-reasoning systems and potentially improving the performance and efficiency of LLM agents.

👉 More information

🗞 TSPO: Breaking the Double Homogenization Dilemma in Multi-turn Search Policy Optimization

🧠 ArXiv: https://arxiv.org/abs/2601.22776