Object detection, crucial for applications like infrastructure inspection and construction safety, frequently suffers from a lack of labelled data in specialist fields. To address this challenge, Malaisree P, Youwai S, and Kitkobsin T, alongside their colleagues, developed DINO-YOLO, a novel system that combines the speed of YOLO object detection with the powerful self-supervised learning capabilities of DINO vision transformers. This innovative architecture strategically integrates DINO features to enhance performance, achieving substantial improvements across diverse civil engineering datasets, including crack detection, PPE identification, and autonomous driving scenarios. The team demonstrates that DINO-YOLO not only achieves state-of-the-art accuracy on datasets with limited images, but also maintains real-time processing speeds, offering a practical and efficient solution for critical monitoring and inspection tasks in data-constrained environments.

The study pioneers a hybrid architecture, harnessing the power of DINOv3, pre-trained on 1. 7 billion unlabeled images, to extract robust visual features transferable across diverse domains. Researchers implemented DINOv3 feature integration at key locations within the YOLOv12 architecture to maximize semantic information. Extensive experimentation involved systematic evaluation across different YOLO scales and DINOv3 variants, revealing that medium-scale architectures achieve optimal performance.

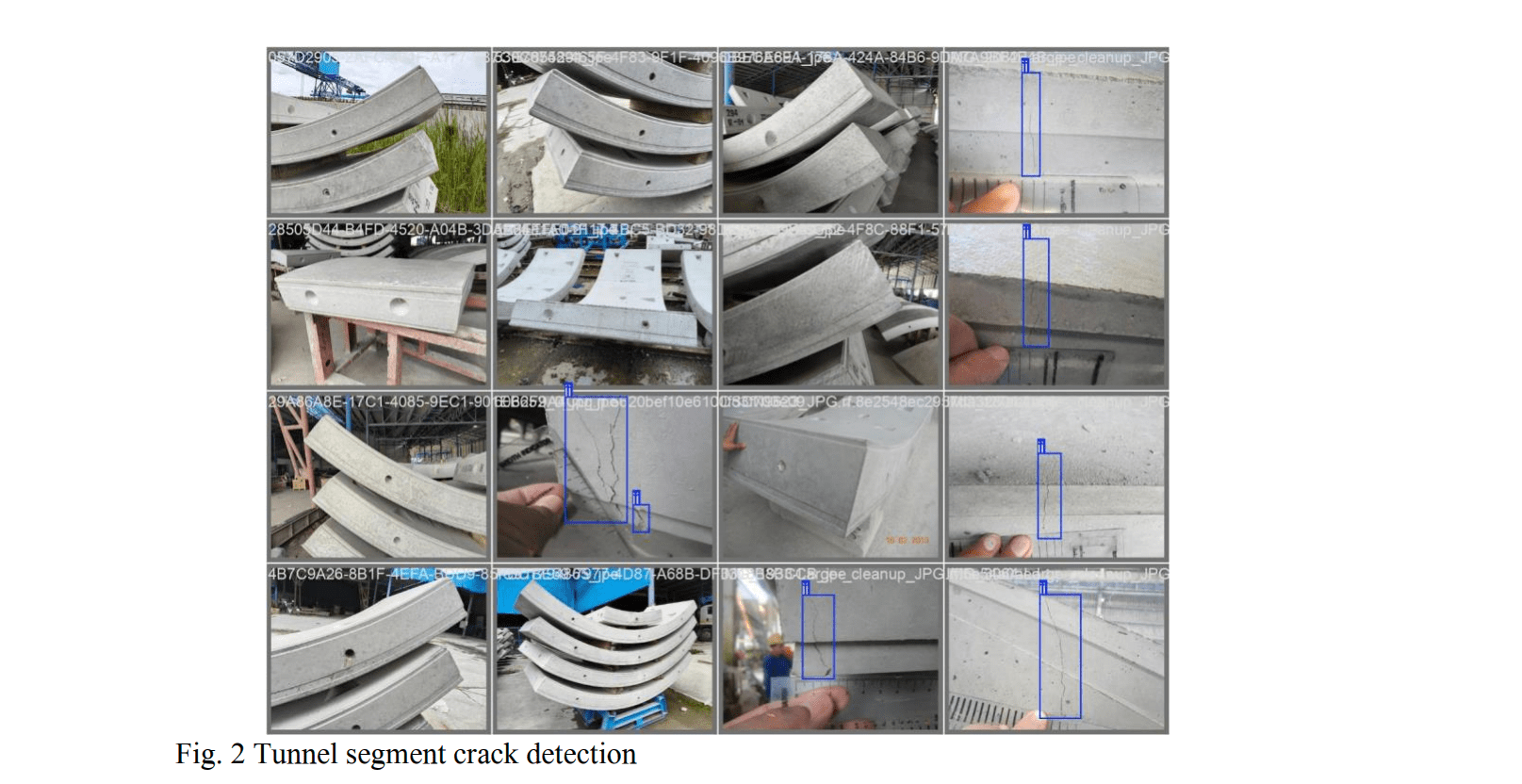

The framework was thoroughly tested on three distinct datasets: a tunnel segment crack detection dataset, a construction PPE detection dataset, and the established KITTI benchmark. The tunnel segment crack detection dataset demonstrated a substantial 12. 4% improvement in detection accuracy, while construction PPE detection gained 13. 7% using the DINO-YOLO framework. Furthermore, the KITTI dataset showed an 88.

6% improvement, demonstrating the model’s ability to generalize to large-scale benchmarks. Real-time inference was maintained throughout testing, achieving frame rates between 30 and 47 frames per second on standard hardware, with acceptable processing overhead. This research establishes state-of-the-art performance for civil engineering datasets with fewer than 10,000 images, providing practical solutions for construction safety monitoring and infrastructure inspection in data-constrained environments. The team conducted comprehensive evaluation, identifying optimal configurations in moderate data regimes and revealing limitations in extreme scarcity. This establishes DINO-YOLO as a practical, deployment-ready solution for civil engineering object detection, providing both architectural innovations and actionable deployment guidelines.

DINO-YOLO Boosts Object Detection with Limited Data

Scientists developed DINO-YOLO, a novel hybrid architecture for object detection, specifically addressing the challenge of limited annotated data in civil engineering applications. The work systematically integrates DINOv3 self-supervised vision transformers with the YOLOv12 object detection framework, achieving substantial performance gains across diverse datasets. Experiments reveal a 12. 4% improvement in Tunnel Segment Crack detection using 648 images, a 13. 7% gain in Construction PPE detection with 1,000 images, and a remarkable 88.

6% improvement on the KITTI dataset comprising 7,000 images. The team conducted comprehensive evaluation across different YOLO scales and DINOv3 variants, establishing that Medium-scale architectures achieve optimal performance. Small-scale architectures, however, require specific integration strategies to maximize performance, highlighting the scale-dependent nature of optimal configurations. Measurements confirm acceptable processing overhead while maintaining real-time processing speeds of 30-47 frames per second on standard hardware. Further analysis demonstrates that DINO-YOLO maintains computational efficiency without performance degradation, even when applied to large datasets. The research establishes evidence-based architectural selection criteria, reducing infrastructure costs for large-scale construction monitoring installations.

DINO-YOLO Excels With Limited Data

DINO-YOLO represents a significant advancement in object detection for civil engineering applications, particularly in scenarios where annotated data is scarce. This research successfully integrates DINOv3 self-supervised vision transformers with a YOLOv12 architecture, demonstrating substantial performance improvements across diverse datasets. Experiments reveal gains in Tunnel Segment Crack detection, Construction PPE identification, and the widely used KITTI benchmark. The system maintains real-time inference speeds, crucial for practical field deployment, while also reducing computational demands. Scientists observed performance trends, identifying optimal configurations in moderate data regimes, and revealing limitations in extreme scarcity. The research establishes DINO-YOLO as a practical, deployment-ready solution for civil engineering object detection, providing both architectural innovations and actionable deployment guidelines.

👉 More information

🗞 DINO-YOLO: Self-Supervised Pre-training for Data-Efficient Object Detection in Civil Engineering Applications

🧠 ArXiv: https://arxiv.org/abs/2510.25140