Large language models often struggle to retain and effectively use information from past interactions, limiting their performance in dynamic environments. To address this, Jizhan Fang, Xinle Deng, and Haoming Xu, from ZJ University, along with colleagues, introduce LightMem, a novel memory system designed to enhance the ability of these models to learn from experience. Inspired by the way human memory functions, LightMem rapidly filters, organises, and stores information across three stages, enabling more efficient access to relevant past data. This approach achieves significant gains in accuracy, up to 10. 9%, while dramatically reducing computational costs, decreasing token usage by up to 117times and runtime by over 12times, representing a substantial step forward in the development of truly adaptive and efficient language models.

Long Context RAG System Evaluation

This document details the evaluation of a long-context retrieval augmented generation (RAG) system, focusing on its ability to handle complex, multi-turn conversations and various reasoning tasks. It’s a research-oriented document intended for those familiar with natural language processing, large language models, and RAG architectures. The goal is to demonstrate the effectiveness of the proposed RAG system and provide a detailed analysis of its performance across different benchmarks. The report breaks down the methodology used to build and evaluate the RAG system, employing a range of benchmarks to assess its capabilities, including single-turn question answering, multi-session conversational tasks, temporal reasoning, knowledge update tasks, personalized question answering, and the ability to identify unanswerable questions. A crucial component of the evaluation involves using a large language model as an automated evaluator, assessing the correctness of the system’s responses against known answers, with detailed prompts provided for transparency and reproducibility.

LightMem, a Cognitive Inspired Memory System

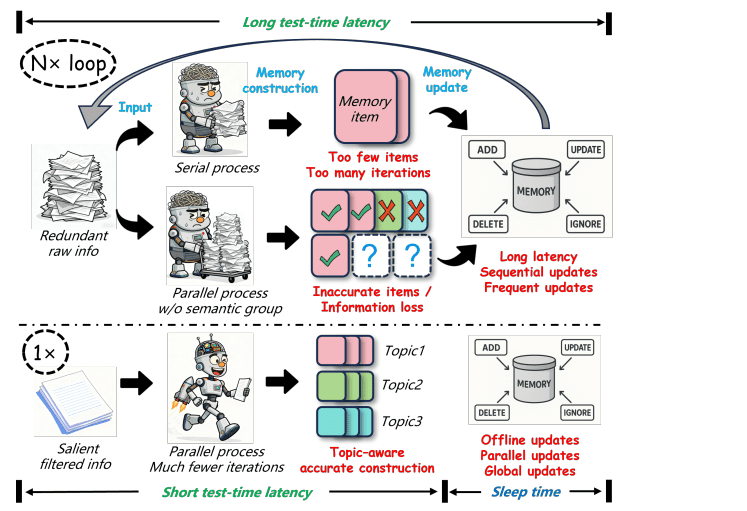

Scientists engineered LightMem, a novel memory system for large language models inspired by the human Atkinson-Shiffrin model of memory, to address limitations in existing systems regarding efficiency and performance. LightMem organizes information processing into three sequential stages, mirroring sensory, short-term, and long-term memory, each implemented with a dedicated, lightweight module. The initial stage, Light1, functions as a cognitive-inspired sensory memory, rapidly filtering irrelevant information from raw input and employing a compression model to retain only high-probability tokens. Light2 realizes a concise short-term memory module for transient information processing, building upon the pre-processed segments, while Light3 provides a long-term memory module designed to minimize retrieval latency.

A key innovation lies in the offline updating of long-term memory, decoupling consolidation from online inference, mirroring the role of sleep in human memory reorganization. Experiments utilizing both GPT and Qwen backbones demonstrate that LightMem outperforms strong baseline memory systems, achieving accuracy gains of up to 10. 9%. The research also shows reductions in token usage ranging from 32x to 117x, API calls from 17x to 177x, and runtime from 1. 67x to 12.

45x. These performance improvements are maintained even after the offline, “sleep-time” updates to the long-term memory, demonstrating the system’s ability to reliably update knowledge and mitigate information loss during extended interactions. This approach effectively balances efficiency and effectiveness, paving the way for more robust and scalable language models.

LightMem Boosts Language Model Memory and Efficiency

Scientists have developed LightMem, a novel memory system for large language models that significantly improves performance and efficiency in complex interactions. Inspired by the human memory system, LightMem organizes information into three stages, sensory, short-term, and long-term, to manage and utilize historical data more effectively. Experiments conducted using both GPT and Qwen backbones on the LongMemEval benchmark reveal substantial gains in accuracy, with LightMem achieving improvements of up to 10. 9% compared to existing methods. Crucially, the system reduces token usage by as much as 117x, API calls by up to 159x, and runtime by over 12x, demonstrating a remarkable increase in processing speed and resource optimization.

This efficiency is achieved through a cognitive-inspired sensory memory module that rapidly filters irrelevant information and groups data by topic, minimizing redundancy and pre-compressing inputs. The team further developed a topic-aware short-term memory that consolidates these topic-based groups, organizing and summarizing content for structured access. A key innovation lies in the long-term memory component, which employs an offline “sleep-time” update procedure, decoupling consolidation from online inference. This allows for reflective, high-fidelity updates without introducing latency, enabling more reliable long-term knowledge updates and mitigating information loss. This breakthrough delivers a significant advancement in the ability of large language models to effectively leverage historical data, paving the way for more sophisticated and efficient AI systems.

LightMem Dramatically Boosts Language Model Efficiency

This research presents LightMem, a new memory system designed to improve the efficiency of large language models without sacrificing performance. Recognizing that existing memory systems often create substantial computational demands, the team drew inspiration from the human memory model described by Atkinson and Shiffrin. LightMem organizes information into three stages, sensory, short-term, and long-term, to filter, organize, and consolidate data effectively. Experiments demonstrate that LightMem significantly reduces computational costs, decreasing token usage by up to 117x, API calls by up to 159x, and runtime by over 12x, while simultaneously improving accuracy by up to 10.

9% on established benchmarks. Future work will focus on several key areas, including accelerating the offline update phase through pre-computed key-value caches, integrating a knowledge graph-based memory module for complex reasoning, and extending the system to handle multimodal data. The researchers also plan to explore combining parametric and non-parametric memory components to leverage the strengths of both approaches, ultimately aiming for more flexible and synergistic knowledge utilization. The detailed implementation of LightMem is provided, and the source code will be released to further support reproducibility and verification of the findings.

👉 More information

🗞 LightMem: Lightweight and Efficient Memory-Augmented Generation

🧠 ArXiv: https://arxiv.org/abs/2510.18866