The creation of realistic and high-resolution video remains a significant challenge in artificial intelligence, but a new framework called InfinityStar offers a substantial leap forward. Jinlai Liu, Jian Han, and Bin Yan, from their respective institutions, lead the development of this unified spacetime autoregressive model, which generates both images and dynamic video with unprecedented efficiency. The team achieves this by jointly capturing spatial and temporal dependencies within a single architecture, allowing for versatile generation tasks ranging from text-to-video to long-form interactive content. Demonstrating a score of 83. 74 on the VBench benchmark, InfinityStar surpasses existing autoregressive models and even rivals some diffusion-based competitors, while generating a five-second, 720p video approximately ten times faster than leading methods, representing the first discrete autoregressive system capable of producing industrial-level video.

The system excels at converting text into video, transforming still images into moving scenes, and reconstructing existing videos. To achieve efficient training, the team employed techniques like FlexAttention and spacetime sparse attention, alongside distributed computing methods. They also developed a sophisticated tokenizer that leverages existing knowledge to improve video reconstruction quality, ensuring the model learns from high-quality video data filtered for aesthetic appeal and realistic motion.

The research team demonstrated the system’s capabilities through generated images and videos, showcasing its potential for creative applications. They also developed a method for refining video captions and generating interactive prompts, allowing users to guide the video creation process. Example 1 A golden retriever puppy plays in a sunlit living room with a plush grey rug. A red chew toy, a blue water bowl, and a green plant are nearby. The puppy sniffs the red chew toy with its nose, picks it up, and runs towards the water bowl.

It drops the toy and begins lapping water from the bowl. Example 2 A chef wearing a white hat and apron stands in a professional kitchen with stainless steel counters. A cutting board with sliced carrots, a silver pot on a stove, and a wooden spoon are visible. The chef stirs the contents of the pot with the spoon, adds the sliced carrots from the cutting board, and pours olive oil into the pot. They taste the contents, nodding with approval.

Example 3 A young woman with long brown hair sits at a desk in a home office. A laptop, a stack of books, and a coffee mug are on the desk. The woman types on the laptop with both hands, closes it, and reaches for the stack of books. She picks up a book and begins to read it, then puts the book down and takes a sip from the coffee mug.

Discrete Video Synthesis with Unified Autoregressive Modeling

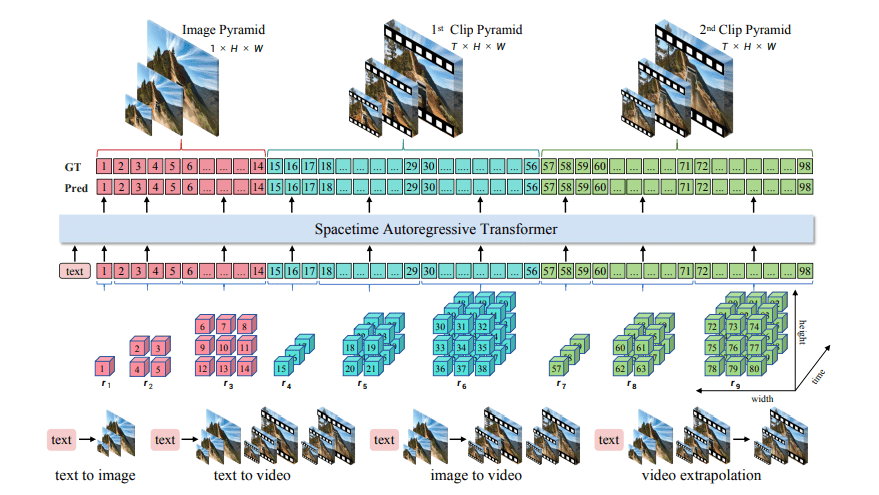

Scientists engineered InfinityStar, a unified framework for synthesizing high-resolution images and videos, building upon recent advances in autoregressive modeling. The study pioneers a discrete approach that jointly captures spatial and temporal dependencies within a single architecture, enabling diverse generation tasks including text-to-image, text-to-video, image-to-video, and long interactive video synthesis. Researchers modeled videos as image pyramids and multiple clip pyramids, decoupling static appearance from dynamic motions to naturally inherit text-to-image capabilities while addressing video-specific challenges. To improve discrete reconstruction quality, the team leveraged knowledge inheritance from a continuous video tokenizer, refining the process of converting video data into a format suitable for the model.

They introduced Stochastic Quantizer Depth during tokenizer training, alleviating imbalanced information distribution across different scales and ensuring comprehensive data representation. Furthermore, scientists proposed Semantic Scales Repetition, a technique that refines predictions of early semantic scales within a video, significantly enhancing fine-grained details and complex motions in generated content. Experiments involved training InfinityStar on large-scale video corpora to support generating videos up to 720p resolution with variable durations. The resulting system achieves a score of 83.

74 on the VBench benchmark, outperforming all existing autoregressive models and even surpassing industry-leading HunyuanVideo with a score of 83. 24. Notably, InfinityStar generates a 5-second, 720p video approximately ten times faster than leading diffusion-based methods, demonstrating a substantial improvement in inference speed. This work establishes InfinityStar as the first discrete autoregressive model capable of producing industrial-level 720p videos, marking a significant advancement in efficient, high-quality video generation.

InfinityStar Achieves Real-Time 720p Video Generation

The research team presents InfinityStar, a novel framework for generating high-resolution images and dynamic videos, achieving significant breakthroughs in video synthesis efficiency and quality. Experiments demonstrate that InfinityStar scores 83. 74 on the VBench benchmark, surpassing all existing autoregressive methods and even exceeding the performance of some diffusion-based competitors. Notably, the system generates a 5-second, 720p video approximately ten times faster than leading diffusion methods, marking a substantial improvement in real-time video generation capabilities. This achievement represents the first discrete autoregressive video generator capable of producing industrial-level 720p videos.

To address the challenges of incorporating time into video generation, scientists developed a spacetime pyramid modeling framework. This approach decomposes videos into sequential clips, encoding static appearance cues in the first frame and distributing duration equally across subsequent clips. The team demonstrated that uniform temporal growth leads to flickering videos, while a constant time pyramid limits knowledge transfer from image-to-video models. The novel framework effectively balances appearance and motion, enabling accurate fitting of both aspects within the generated video. Further advancements involved techniques to improve video tokenizer training, which is computationally heavier than image tokenization.

By inheriting knowledge from a pre-trained continuous video tokenizer, a video VAE, and employing a stochastic quantizer depth regularization, the team significantly accelerated convergence and improved reconstruction quality. The stochastic quantizer depth, which randomly discards scales during training, balanced information distribution across scales, enabling the model to learn more effectively from early scales and improve global semantic representation. Measurements confirm that this regularization dramatically improves the clarity of reconstructed frames from early scales, enhancing the overall quality and coherence of the generated videos.

Faster Video Generation with Discrete Models

InfinityStar represents a significant advance in video generation, establishing a new benchmark for discrete autoregressive models and achieving competitive results against diffusion-based approaches. The team developed a unified framework capable of synthesizing high-resolution images and dynamic videos, seamlessly integrating spatial and temporal prediction within a discrete architecture. Extensive evaluation demonstrates that InfinityStar outperforms existing autoregressive video models and generates a 720p video in approximately one-tenth the time required by leading diffusion methods. Furthermore, the framework extends to support long interactive video generation, maintaining consistency in generated content over extended sequences.

While achieving state-of-the-art performance, the authors acknowledge certain limitations. A trade-off exists between image quality and motion fidelity in high-motion scenes, occasionally resulting in compromised fine-grained visual details. The model’s training and parameter size were constrained by available computational resources, potentially limiting ultimate performance. The inference pipeline also has not been fully optimized, suggesting opportunities for future speed improvements. Future research directions include scaling the model and further optimizing the inference process to enhance both quality and efficiency, potentially unlocking even greater capabilities in rapid, long video generation.

👉 More information

🗞 InfinityStar: Unified Spacetime AutoRegressive Modeling for Visual Generation

🧠 ArXiv: https://arxiv.org/abs/2511.04675