Geometric frustration in materials gives rise to fascinating and often unexpected physical properties, but simulating these complex systems presents a significant computational challenge. Pratik Brahma, Junghoon Han, and Tamzid Razzaque, all from the University of California, Berkeley, alongside Saavan Patel from both Berkeley and InfinityQ Technology Inc., and Sayeef Salahuddin from Berkeley and Lawrence Berkeley National Laboratory, now demonstrate a powerful new approach to overcome these limitations. The team developed Convolutional Restricted Boltzmann Machines, a type of neural network that efficiently captures the inherent symmetries of frustrated lattices, and crucially, implemented this in dedicated digital hardware. This advancement allows for simulations of systems containing hundreds of interacting spins, accurately reproducing known phases of matter and characterising complex spin behaviours, while achieving speedups of three to five orders of magnitude compared to conventional computer simulations. This work establishes a pathway towards scalable, reprogrammable hardware capable of simulating large-scale physical systems with unprecedented efficiency and opens new avenues for materials discovery.

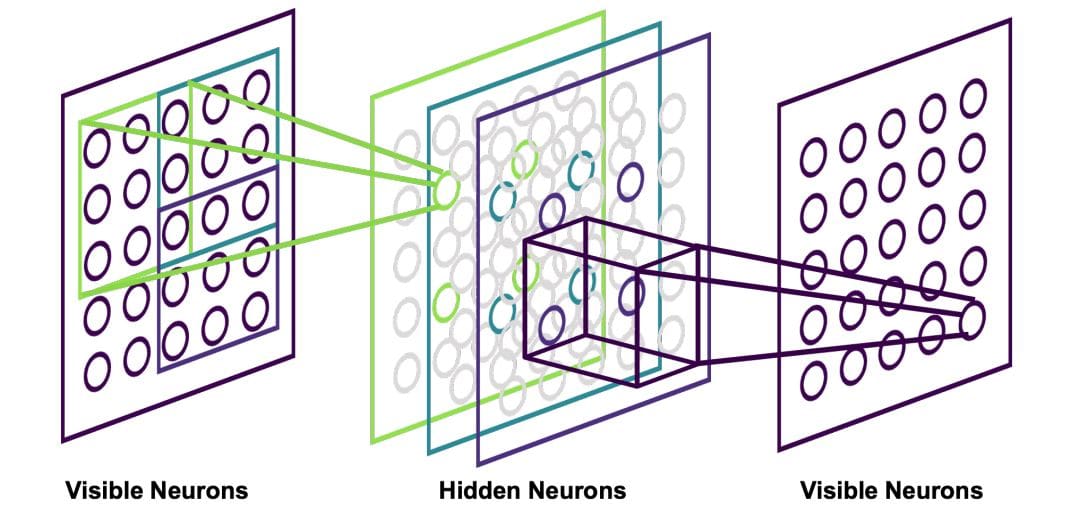

Frustrated magnetism gives rise to exotic phases like spin liquids, and accurately simulating these systems is computationally demanding, especially for larger lattices. The team harnessed the power of RBMs, a type of generative neural network, to learn the probability distribution of low-energy states within the Shastry-Sutherland model, a system known for exhibiting spin liquid behavior. Implementing the RBM on an FPGA allows for parallel processing and custom hardware design, overcoming the limitations of traditional CPU or GPU-based simulations and offering potential speedups and energy efficiency. Efficient data transfer, along with optimized memory management, were crucial for achieving high performance. Recognizing the limitations of traditional Monte Carlo methods, the team turned to machine learning to improve sampling efficiency. They pioneered a CRBM formulation that directly captures the interactions within the SS lattice, leveraging its inherent translational symmetry to create a more efficient probabilistic neural network. This approach contrasts with fully-connected RBMs, which become inefficient when representing large systems.

The core innovation lies in implementing convolutional filters corresponding to the unit cell size, effectively capturing physical interactions and reducing the number of free parameters independent of system size. This design significantly improves both representation and Monte Carlo sampling, enabling faster convergence and a larger number of uncorrelated samples. To accelerate simulations, scientists engineered a dedicated digital hardware accelerator tailored to the CRBM architecture, allowing simultaneous updates of the entire spin lattice. Experiments involving lattices containing up to 324 spins successfully recovered all known phases of the SS Ising model, including the long-range ordered fractional plateau. The hardware characterizes spin behavior at critical points and within spin liquid phases, achieving a speedup of 3 to 5 orders of magnitude compared to GPU-based implementations, while offering superior scalability, room-temperature operation, and reprogrammability. They successfully simulated lattices containing 324 logical spins, recovering all known phases of the Shastry-Sutherland (SS) Ising model, a geometrically frustrated system exhibiting exotic magnetic behavior. This achievement confirms the ability of the CRBM hardware to accurately represent and explore the complex energy landscapes of frustrated systems, validating its potential for materials discovery and fundamental physics research. The core of this advancement lies in the CRBM’s ability to efficiently capture interactions within the SS lattice, a system characterized by competing nearest and next-nearest neighbor interactions.

By exploiting the lattice’s translational symmetry, the team designed convolutional filters corresponding to the size of the unit cell, resulting in a more efficient representation compared to fully connected networks. This design significantly reduces the number of parameters needed to represent the system, independent of its size, and enables faster Monte Carlo sampling. Experiments demonstrate a speedup of 3 to 5 orders of magnitude, achieving processing times ranging from 33 nanoseconds to 120 milliseconds, compared to equivalent algorithms running on Graphics Processing Units. Furthermore, the hardware characterizes spin behavior at critical points and within spin liquid phases, providing insights into the emergent properties of these materials.

Analysis of the spin structure factor of ground state configurations confirms connections to experimental observations, such as diffuse neutron scattering. The team’s results demonstrate performance within one order of magnitude of quantum annealers, while offering superior scalability, room-temperature operation, and the ability to be reprogrammed for different systems. Focusing on the Shastry-Sutherland Ising lattice, researchers successfully analyzed spin configurations, explored emergent classical spin liquid phases and determined phase diagrams, achieving results comparable to established simulation methods. The hardware implementation delivers a significant speedup, three to five orders of magnitude (33ns to 120ms), over conventional GPU-based sampling techniques across various magnetic phases. This acceleration stems from key architectural features, including optimized bitwise operations, fixed-point weight representations and, crucially, the exploitation of translational symmetry within the system.

Performance is within two orders of magnitude of that achieved by quantum annealers, while offering advantages in scalability and the ability to be reprogrammed for different simulations. The seamless integration with a standard CPU host allows acceleration of variational Monte Carlo algorithms, with the CRBM hardware functioning as a variational wavefunction. Researchers acknowledge that the current implementation is specific to systems exhibiting translational symmetry, limiting its direct application to all materials. Future work will likely focus on extending the architecture to accommodate more complex symmetries and exploring its potential for simulating a wider range of quantum materials and frustrated lattices, ultimately enabling faster and more efficient large-scale simulations.

👉 More information

🗞 Hardware Acceleration of Frustrated Lattice Systems using Convolutional Restricted Boltzmann Machine

🧠 ArXiv: https://arxiv.org/abs/2511.20911