Current benchmarks struggle to accurately assess the performance of algorithms designed to restore blurry videos, prompting George Ciubotariu, Zhuyun Zhou, Zongwei Wu, and Radu Timofte from the University of Würzburg to develop new, more challenging datasets. They introduce MIORe and VAR-MIORe, which capture a wide range of realistic motion scenarios with high-frame-rate detail and professional quality. MIORe creates consistent motion blur through adaptive frame averaging, while VAR-MIORe uniquely offers explicit control over the intensity of motion, ranging from subtle movements to extreme actions. These datasets provide high-resolution ground truths that will push the boundaries of video restoration research, enabling the development of algorithms capable of handling complex and dynamic scenes with greater fidelity.

Realistic Motion Restoration Benchmarks with MIORe

This paper introduces MIORe and VAR-MIORe, two novel datasets designed to benchmark and advance research in motion restoration tasks, specifically motion deblurring, video interpolation, and optical flow estimation. Existing datasets often lack realism, comprehensive motion coverage, or simulate limited degradations, hindering the development of robust and generalizable algorithms. The proposed solution involves creating datasets that address these limitations, featuring realism through high-speed camera captures and a diverse range of motion types and intensities. MIORe is a multi-task dataset with flow-based regularized motion blur, aiming for realistic and fair benchmarking.

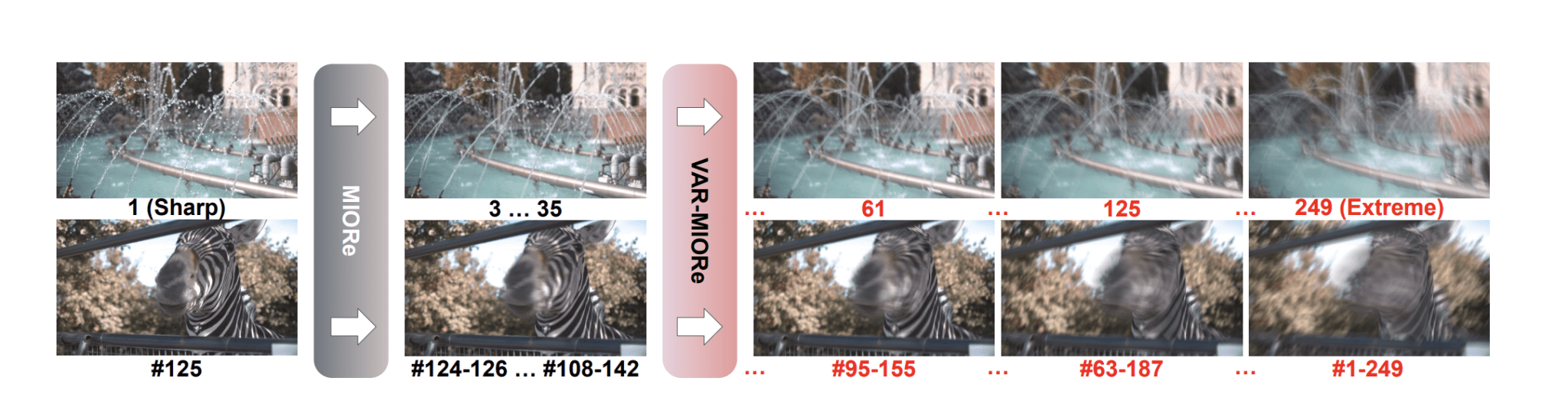

VAR-MIORe extends MIORe by incorporating a variable range of motion magnitudes, from minimal to extreme, pushing the limits of restoration algorithms and representing the first dataset of its kind to offer such a wide spectrum of motion intensities. This allows researchers to meticulously analyze algorithms under diverse conditions and assess performance across the entire spectrum of motion intensities. Evaluations using these datasets demonstrate the challenges posed by diverse and extreme motion scenarios, highlighting the performance boundaries of current methods and identifying areas for improvement. This work addresses a critical gap by providing a more realistic and challenging benchmark for motion restoration research, facilitating the advancement of more robust and generalizable algorithms, and opening new avenues for multi-task learning approaches to handle complex optical degradations. In essence, this paper presents a valuable resource for the computer vision community, providing a more realistic and comprehensive benchmark for evaluating and advancing motion restoration algorithms.

High-Speed Data for Motion Restoration Evaluation

Scientists developed MIORe and VAR-MIORe, two novel datasets designed to overcome limitations in current motion restoration benchmarks. The study pioneers a high-frame-rate acquisition system, capturing sequences at 1000 FPS using professional-grade optics to accurately represent complex motion scenarios, including intricate ego-camera movements, dynamic interactions between multiple subjects, and depth-dependent blur effects. A key innovation lies in the adaptive frame averaging mechanism, which dynamically determines the optimal number of frames to blend based on both mean and maximum optical flow calculations. This method achieves consistent and controlled motion blur while preserving sharp reference frames essential for evaluating video frame interpolation and optical flow estimation.

By maintaining sharpness in these key frames, the datasets increase task complexity, compelling algorithms to rely on subtle spatial cues for accurate motion assessment. VAR-MIORe extends this capability by capturing a variable range of motion magnitudes, spanning from minimal to extreme movements, representing the first benchmark to explicitly control motion amplitude. Scientists carefully manipulated the frame averaging process to simulate both low- and high-displacement scenarios, providing unprecedented granularity in motion analysis. Acquisition primarily occurs in real-world environments, introducing realistic optical degradations and diverse scene types rarely found in existing datasets. The system delivers high-resolution imagery using industrial-grade cameras and professional lenses, capturing a broad spectrum of motion types, including smooth panning, dynamic dolly zooms, and complex barrel rolls. This integration of diverse motion scenarios, variable amplitude, high-resolution imaging, and flexible data splitting strategies results in a versatile and large-scale dataset, providing a unified platform for multi-task learning and advanced restoration methodologies.

Realistic Motion Blur Datasets for Video Analysis

Scientists have introduced two novel datasets, MIORe and VAR-MIORe, designed to significantly advance research in motion restoration and analysis. These datasets address critical limitations found in existing benchmarks by capturing a broad spectrum of motion scenarios with unprecedented fidelity and control, featuring complex ego-camera movements, dynamic interactions between multiple subjects, and depth-dependent blur effects. MIORe generates consistent motion blur through adaptive frame averaging, while simultaneously preserving sharp inputs crucial for tasks like video frame interpolation and flow estimation. VAR-MIORe extends this capability by incorporating a variable range of motion magnitudes, spanning from minimal to extreme, establishing the first benchmark with explicit control over motion amplitude.

This allows researchers to meticulously analyze algorithms under diverse conditions and assess performance across the entire spectrum of motion intensities. The datasets provide high-resolution ground truths, enabling robust evaluation of both controlled experiments and adverse conditions, and fostering the development of next-generation restoration algorithms. The datasets’ versatility stems from their ability to unify evaluation across multiple restoration tasks within a single framework, promoting multi-task learning approaches that leverage shared information. This holistic approach is critical for developing robust and generalizable algorithms capable of handling the complexities of real-world degradations.

Researchers can now evaluate performance on tasks like motion deblurring, and video frame interpolation. Furthermore, the datasets offer a significant improvement over existing benchmarks, which often rely on synthetic data or limited motion types. By capturing real-world motion with high fidelity, MIORe and VAR-MIORe are poised to become essential resources for both academic and industrial research, stimulating advances in multi-task learning and restoration techniques capable of handling the full complexity of real-world imaging challenges.

Datasets Reveal Limits of Motion Restoration

The research introduces two new datasets, MIORe and VAR-MIORe, designed to advance motion restoration in images and videos. These datasets address limitations in existing benchmarks by capturing a wider range of motion scenarios, including complex camera movements and varying degrees of motion blur. MIORe generates consistent motion blur using flow-based regularization, while VAR-MIORe uniquely offers control over the intensity of motion, ranging from minimal to extreme. Evaluations using these datasets reveal the performance boundaries of current state-of-the-art algorithms under challenging conditions, highlighting areas for improvement in image and video restoration techniques. The datasets provide high-resolution ground truths, enabling more robust and fair comparisons between different approaches. These resources are intended to facilitate the development of advanced, multi-task algorithms capable of handling a broad spectrum of optical degradations encountered in real-world imaging.

👉 More information

🗞 MIORe & VAR-MIORe: Benchmarks to Push the Boundaries of Restoration

🧠 ArXiv: https://arxiv.org/abs/2509.06803