The connection between visual patterns and the underlying processes that create them forms a fundamental aspect of visual understanding, and a new resource now allows researchers to explore this link across diverse fields. Sagi Eppel and Alona Strugatski, from the Weizmann Institute of Science, present SciTextures, a large-scale collection encompassing over 100,000 images of textures and patterns sourced from physics, biology, mathematics, art, and many other disciplines. This dataset uniquely pairs these visuals with the models and code used to generate them, offering a powerful tool for evaluating artificial intelligence. Researchers utilise SciTextures to assess an AI’s ability to not only recognise patterns but also to infer the mechanisms behind them, and even recreate these mechanisms through simulation, demonstrating that vision-language models can move beyond simple image recognition to understand and model physical systems.

Covering over 1,200 different models and 100,000 images of patterns and textures from physics, art, and beyond, the dataset offers a powerful resource for exploring the connection between visual patterns and the mechanisms that produce them. An agentic AI pipeline autonomously collects and implements models in a standardized format, ensuring consistency and scalability in the creation of this resource.

Vision-Language Models Understand Procedural Content Generation

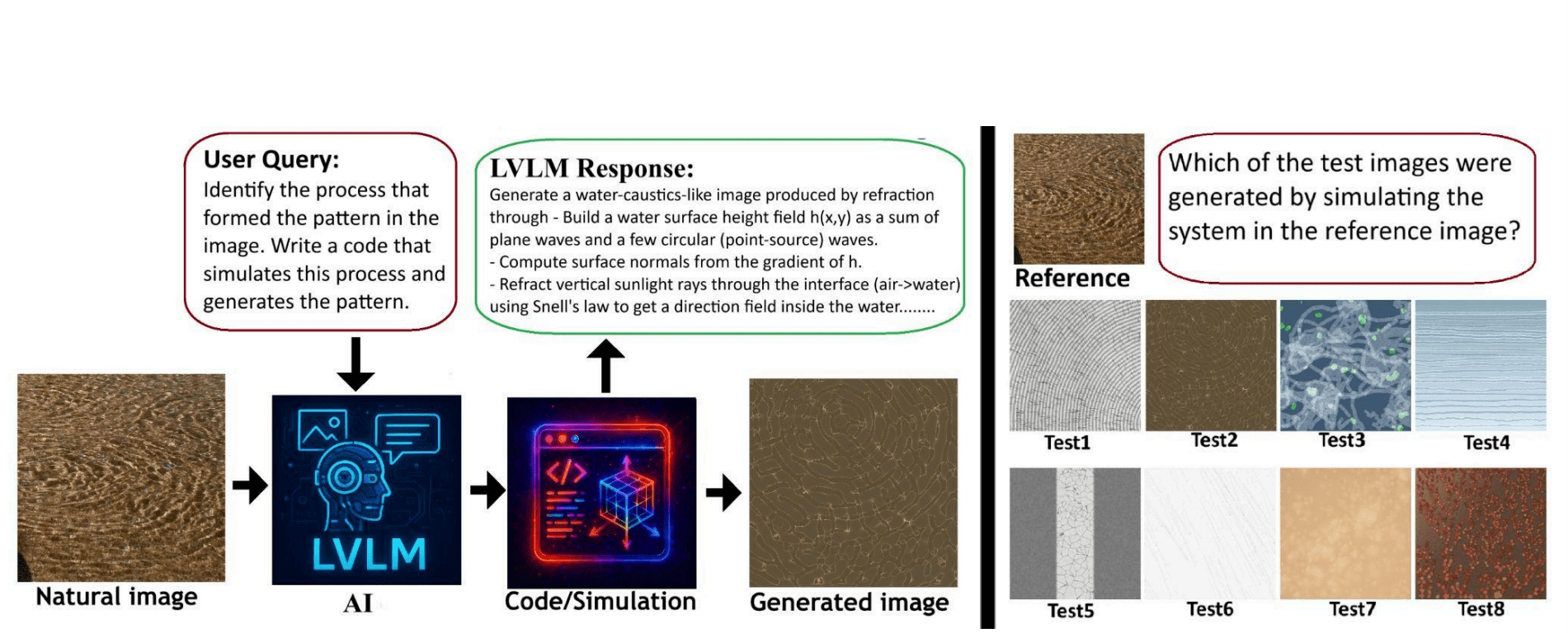

This research addresses a key limitation of current vision-language models (VLMs), which excel at recognizing objects in images but struggle to understand the underlying rules and processes that create those images. To address this, the authors introduce a new benchmark and methodology to test VLMs on their ability to understand and generate procedural content, moving beyond simple image recognition. The research focuses on procedural content, specifically textures, materials, and simulations, evaluating how well VLMs can infer the program or process that generates these visuals. The authors created a dataset containing images of procedural content alongside descriptions of the rules or programs that generate them, such as shader code or simulation setups. They defined metrics to assess how well VLMs can infer the program from an image and generate content from a program description. The work builds upon related fields including VLMs, procedural content generation, texture synthesis, material representation, inverse graphics, and program synthesis.

SciTextures Dataset Captures Diverse Scientific Visualizations

Scientists have created a comprehensive dataset, SciTextures, containing over 100,000 images representing patterns and textures from over 1,200 different models across diverse scientific, technological, and artistic domains. This work establishes a resource for exploring the connection between visual patterns and the underlying mechanisms that generate them, encompassing fields from physics and chemistry to biology, sociology, mathematics, and art. The dataset was constructed using an agentic AI pipeline that autonomously collects and implements models in a standardized format, ensuring consistency and scalability. The team tested the ability of leading vision-language models (VLMs) to link visual patterns to their generating models and code, introducing three novel tasks to assess this capability. In one task, the AI matches images to their generative code or descriptions, while another requires identifying images produced by the same generative process. Results demonstrate the potential of VLMs to understand and simulate physical systems beyond surface-level visual recognition, with visual similarity between original and AI-generated images serving as a proxy for model accuracy.

SciTextures Dataset Links Vision and Generation

This research presents a substantial advance in visual understanding by establishing a large-scale dataset, SciTextures, encompassing over 100,000 images of textures and patterns sourced from diverse scientific, technological, and artistic domains. Crucially, this dataset uniquely pairs each visual pattern with the underlying models and code used to generate it, enabling detailed investigation into the relationship between appearance and formative processes. The creation of SciTextures involved an agentic AI pipeline, autonomously collecting and standardizing models to facilitate comprehensive analysis. The team developed novel evaluation methods to assess the ability of vision-language models to connect visual patterns with their generating systems, and to infer the mechanisms behind observed patterns.

Results demonstrate that these models can successfully identify and recreate the processes responsible for forming visual patterns, exhibiting an understanding that extends beyond simple image recognition and description. Notably, smaller vision-language models, employing less physically accurate but more tunable simulations, outperformed larger counterparts in replicating pattern appearance. The authors acknowledge that further research is needed to determine whether less accurate, highly tunable models represent a superior approach compared to more physically accurate but less adaptable simulations.

👉 More information

🗞 SciTextures: Collecting and Connecting Visual Patterns, Models, and Code Across Science and Art

🧠 ArXiv: https://arxiv.org/abs/2511.01817