Hateful videos represent a growing threat to online safety, fostering discrimination and potentially inciting violence. Shuonan Yang from the University of Exeter, Yuchen Zhang from the University of Essex, and Zeyu Fu demonstrate a significant step towards tackling this problem with their novel, training-free approach to hateful video detection. Their research introduces MARS, a Multi-stage Adversarial Reasoning framework which uniquely avoids reliance on large labelled datasets and provides clear, interpretable justifications for its decisions , a crucial element often missing in current automated content moderation systems. By reasoning through both supportive and counter-evidence, MARS not only achieves up to a 10% performance improvement over existing training-free methods, but also surpasses state-of-the-art trained models on one benchmark dataset, offering a powerful and transparent solution for identifying harmful online content.

MARS framework detects video hate speech with high

Scientists have developed a novel framework, MARS, Multi-stage Adversarial ReaSoning, to reliably and interpretably detect hateful content in videos without requiring extensive training data. This breakthrough addresses critical limitations of existing methods, which often struggle with limited datasets and a lack of transparency in their decision-making processes. Subsequently, MARS constructs evidence-based reasoning supporting potential hateful interpretations, simultaneously generating counter-evidence to consider non-hateful perspectives.

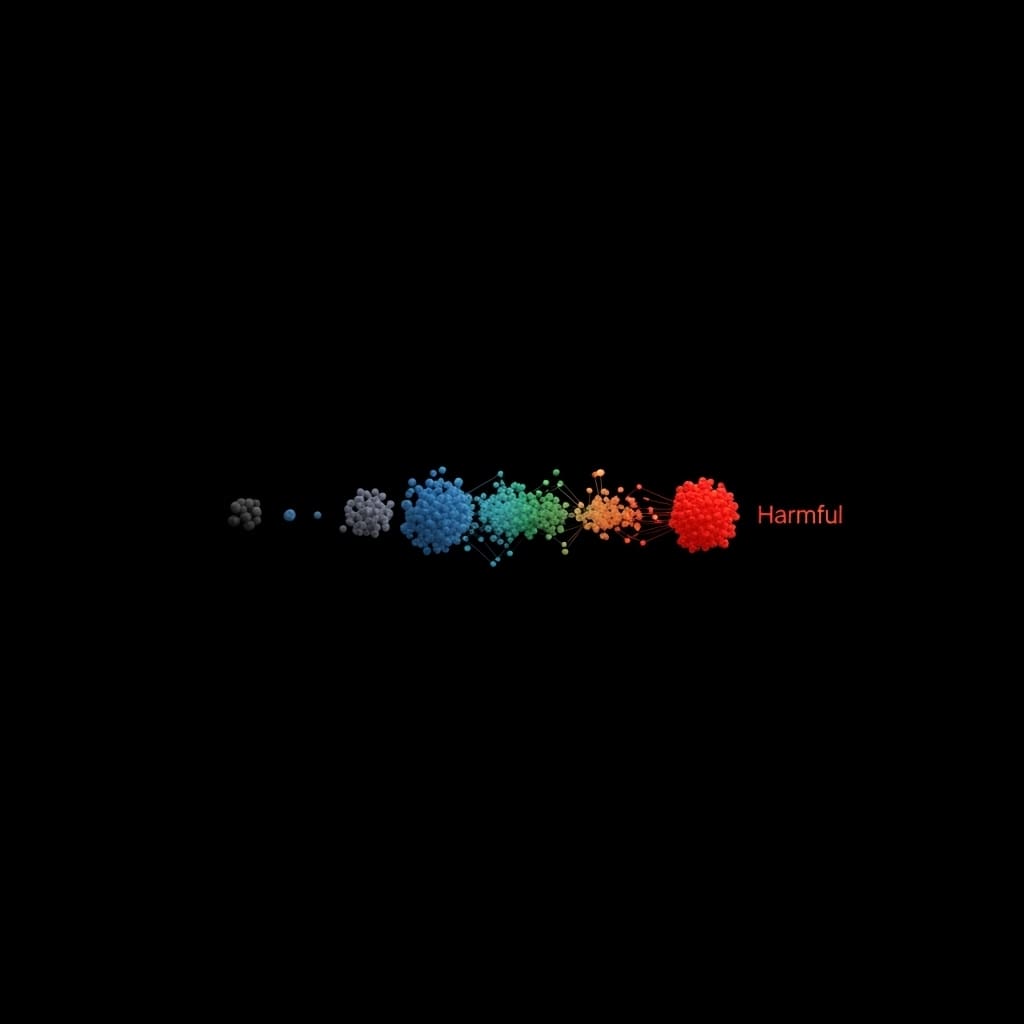

These contrasting viewpoints are then synthesised to arrive at a conclusive and explainable decision, offering a significant advancement in content moderation technology. The core innovation lies in MARS’s training-free approach, circumventing the need for large, labelled video datasets which are costly and difficult to obtain. Unlike traditional training-based methods, MARS leverages the inherent reasoning capabilities of large vision-language models (VLMs), allowing for robust detection even with limited data, a crucial advantage given the rapid proliferation of online video content. The team’s methodology involves formulating the problem as a task of determining whether a video contains hateful content, prompting the VLM to output a label based on sampled video frames and audio transcriptions.

Extensive evaluation on two real-world datasets, HateMM and MultiHateClip (MHC), demonstrates MARS’s superior performance. The study reveals that MARS achieves up to a 10% improvement in accuracy compared to other training-free approaches, and even outperforms state-of-the-art training-based methods on one dataset. Crucially, MARS doesn’t simply provide a ‘hateful’ or ‘non-hateful’ label; it generates human-understandable justifications, detailing the evidence used to reach its conclusion. This transparency is vital for compliance oversight and enhances the accountability of content moderation workflows, aligning with emerging regulations like the EU AI Act.

Furthermore, the research addresses the shortcomings of previous attempts to improve interpretability, delivering robust and auditable hateful video detection. By requiring the model to provide the strongest evidence for both hateful and non-hateful interpretations before making a judgement, the framework ensures a more comprehensive and reliable analysis. The research team began by objectively describing video content, establishing a neutral baseline for subsequent analysis of potentially hateful elements. Following this initial step, they engineered evidence-based reasoning to support interpretations suggesting hateful intent, simultaneously incorporating counter-evidence reasoning to explore plausible non-hateful perspectives.

Experiments employed a unique multi-stage approach, first generating objective descriptions using a vision-language model, then constructing adversarial reasoning chains for both hateful and non-hateful interpretations. The team harnessed these contrasting perspectives, synthesising them into a conclusive and explainable decision regarding the video’s content. This method achieves a crucial balance between accuracy and transparency, providing human-understandable justifications for each determination. Extensive evaluation was conducted on two real-world datasets, HateMM containing 1,083 videos and MultiHateClip (MHC) comprising approximately 2,000 videos, to rigorously assess performance.

The study pioneered a training-free approach, circumventing the need for large annotated datasets which are prohibitively expensive to create for video content. Results demonstrate that MARS achieves up to a 10% improvement in performance compared to other training-free techniques. Furthermore, MARS outperformed state-of-the-art training-based methods on the MHC dataset, showcasing its superior capabilities. The technique reveals not only whether a video is hateful, but also why, supporting compliance oversight and enhancing the transparency of content moderation workflows, a critical advancement given increasing regulatory demands for explainable AI. The code implementing MARS is publicly available at https://github.com/Multimodal-Intelligence-Lab-MIL/MARS, facilitating further research and development in this vital area.

MARS framework boosts video hate speech detection significantly

Scientists have developed a novel framework, MARS, Multi-stage Adversarial ReaSoning, for reliable and interpretable hateful content detection in videos, achieving up to a 10% performance improvement in certain configurations compared to existing training-free methods. The research addresses limitations of current approaches, which often struggle with limited training data and a lack of transparency, by introducing a training-free system that leverages the reasoning capabilities of large vision-language models (VLMs). Experiments revealed that MARS consistently outperforms other training-free methods in both accuracy and precision metrics, with maximum improvements exceeding 10% under specific backbones and settings. The team measured performance on two real-world datasets, HateMM and MultiHateClip, demonstrating the framework’s effectiveness across different languages and content types.

On the English dataset, HateMM, MARS achieved an accuracy of 75.8±0.021%, a Macro-F1 score of 75.8±0.021%, and a hate class F1 score of 75.8±0.014, utilising the Qwen2.5 backbone. Correspondingly, on the Chinese dataset, MultiHateClip, the framework recorded an accuracy of 75.9±0.032%, a Macro-F1 score of 71.3±0.036, and a hate class F1 score of 59.8±0.049, again with the Qwen2.5 backbone. These results demonstrate a significant advancement in hateful video detection capabilities. Results demonstrate that MARS operates through a four-stage process, beginning with an objective description of video content to establish a neutral baseline.

Subsequently, the framework infers hate content hypotheses, identifying supporting evidence and confidence scores, while simultaneously developing counter-evidence reasoning to capture non-hateful perspectives. Data shows that the system generates human-understandable justifications, tracing how multimodal cues, visual frames and audio transcription, are weighed and synthesised to reach a conclusive decision. Specifically, the framework samples N video frames from a video V, where N is a defined hyperparameter, and prompts a VLM to output a label y ∈{0, 1}, indicating whether the video contains hateful content. Tests prove that the framework’s ability to provide auditable justifications enhances transparency and supports compliance requirements, a crucial aspect for content moderation systems.

Using the LLaMA4 backbone, MARS achieved an accuracy of 78.4±0.033% on HateMM and 70.3±0.025% on MultiHateClip, further highlighting its robust performance. The framework’s design allows for nuanced analysis, distinguishing between personal disputes and targeted group attacks, and considering contextual factors like satire or artistic usage. This detailed approach contributes to more accurate and responsible content moderation, offering a valuable tool for online platforms and researchers alike.

MARS reasoning improves hate speech detection significantly

Scientists have developed a new framework, MARS, for detecting hateful videos without requiring extensive labelled training data. This Multi-stage Adversarial ReaSoning system addresses key limitations of current methods, including the need for large datasets and a lack of transparency in decision-making. MARS functions by first objectively describing video content, then building evidence-based reasoning to support potential hateful interpretations, alongside counter-evidence to consider non-hateful perspectives. The research demonstrates that MARS achieves improvements of up to 10% compared to other training-free approaches, and performs competitively against existing training-based methods on at least one dataset.

Crucially, MARS generates human-understandable justifications for its decisions, which is vital for oversight and improving the transparency of content moderation processes. This work signifies a step towards more auditable and trustworthy content moderation systems by offering a training-free, interpretable solution for hateful video detection. By reducing reliance on labelled data and providing clear explanations, MARS facilitates human review and supports regulatory compliance. Future research could explore scaling MARS with even more powerful backbones to further enhance its performance and robustness, as model size was shown to significantly improve results. The authors also suggest further investigation into frame sampling strategies to optimise efficiency without compromising accuracy. Hateful videos pose a significant challenge for online platforms, and this new framework offers a promising solution.

👉 More information

🗞 Training-Free and Interpretable Hateful Video Detection via Multi-stage Adversarial Reasoning

🧠 ArXiv: https://arxiv.org/abs/2601.15115