Adapting large language models to specific tasks typically introduces a hidden performance cost, despite the relatively small number of extra parameters involved. Dhananjaya Gowda, Seoha Song, and Harshith Goka, all from Samsung Research, along with Junhyun Lee, address this challenge with a new technique called zFLoRA, or zero-latency fused low-rank adapters. This innovation significantly reduces computational demands during use, offering performance comparable to, and often exceeding, established methods like traditional fine-tuning and other adapter techniques. Through rigorous testing on models ranging in size and across diverse tasks including reasoning and dialogue, the team demonstrates that zFLoRA introduces virtually no additional delay, representing a substantial step towards more efficient and responsive artificial intelligence systems.

Adapters Enhance Reasoning in Small LLMs

This research explores how different adapter methods, LoRA, zFLoRA, and variations focused on the Multi-Head Attention (MHA) block, improve the performance of small LLaMA language models (1 billion and 3 billion parameters) on reasoning tasks. The goal is to enhance performance on both common sense and mathematical reasoning while minimizing the number of adjustable parameters within the model. Experiments compare these adapters against a baseline model without any adapters. The study utilizes LLaMA models with 1 billion and 3 billion parameters, evaluating performance on common sense and math reasoning tasks.

Several adapter methods are investigated, including LoRA and zFLoRA, with a key focus on LoRA-MHA and zFLoRA-MHA, where adapters are applied only to the Multi-Head Attention blocks, aiming for strong performance with fewer adjustable parameters. Experiments varied the rank of the LoRA/zFLoRA adapters, influencing the number of adjustable parameters, with higher rank meaning more parameters. Different learning rates were used to optimize performance. For the 1 billion parameter model, adapters generally improved performance, with zFLoRA-MHA (rank 64) achieving the best common sense reasoning accuracy (74.

68%), closely followed by LoRA-MHA (rank 64, 74. 72%). Adapters targeting only the MHA blocks demonstrated competitive performance. On math reasoning, zFLoRA-MHA (rank 64, 67. 60 accuracy) performed best, closely matched by LoRA-MHA with rank 64.

The 3 billion parameter model showed similar trends, with adapters generally improving performance on common sense reasoning, zFLoRA-MHA (rank 64) achieving the best accuracy (85. 40%), closely followed by LoRA-MHA with rank 64. Again, adapters targeting only the MHA blocks performed competitively. For math reasoning, zFLoRA-MHA (rank 64, 67. 60 accuracy) performed best, closely matched by LoRA-MHA with rank 64. A key finding is that applying adapters only to the Multi-Head Attention blocks can achieve performance comparable to applying them to the entire model, significantly reducing the number of adjustable parameters. These experiments demonstrate the potential for creating parameter-efficient fine-tuning methods, crucial for deploying large language models in resource-constrained environments.

Zero-Latency Adapters for Efficient Language Models

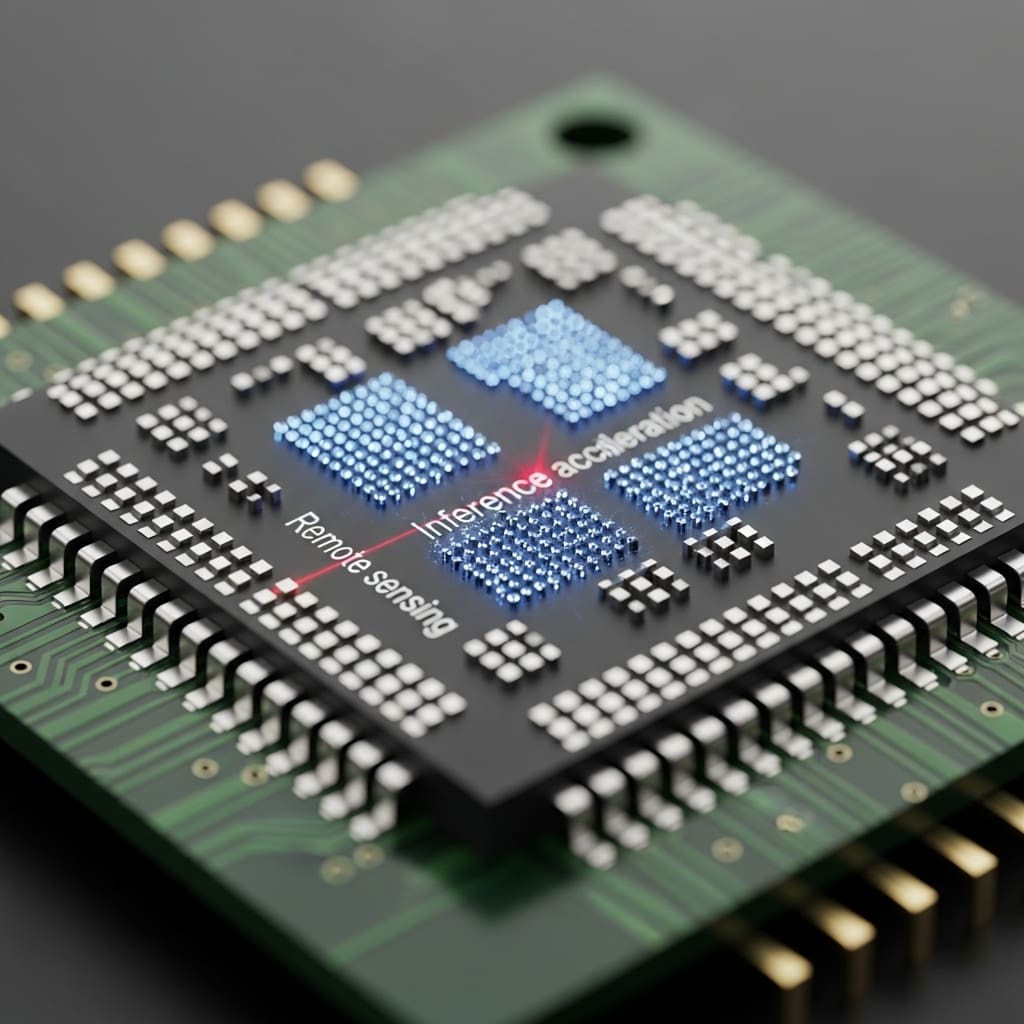

This study introduces zero-latency fused low-rank adapters, termed zFLoRA, designed to minimize computational overhead during the use of large language models. Researchers addressed the issue that even small adapter parameter sets can disproportionately increase inference time. The core of zFLoRA lies in a novel architecture that avoids introducing additional latency. The team developed a streamlined approach for models of size 1 billion, 3 billion, and 7 billion parameters. Conventional LoRA calculates output using a series of four operations, while researchers optimized this process by fusing these operations, reducing the number of discrete computational steps.

This involved re-arranging the order of matrix multiplications to minimize intermediate data transfers and maximize parallelization. The method utilizes low-rank approximation to efficiently process information within large hidden input dimensions using a small number of parameters. Simulations of single-layer adapter latency demonstrate the performance gains achieved through this fusion, highlighting the reduction in computational steps. Experiments were conducted across 18 diverse tasks spanning commonsense reasoning, math reasoning, and summary-dialogue to validate the effectiveness of zFLoRA. Latency measurements were performed on both NPU (Samsung Galaxy S25+) and GPU (H100) platforms, confirming zero to negligible latency overhead introduced by the proposed adapters.

Zero-Latency Adapters Accelerate Large Language Models

Scientists have developed a new zero-latency fused low-rank adapter, termed zFLoRA, that significantly reduces computational demands during the use of large language models. The research addresses a critical issue: even small numbers of adapter parameters can disproportionately increase inference time. Experiments conducted on language models of size 1 billion, 3 billion, and 7 billion parameters demonstrate that zFLoRA performs favorably when compared to established methods like low-rank adapters (LoRA) and full fine-tuning (FFT). Latency measurements, crucial for assessing real-time performance, were performed on both NPU (Samsung Galaxy S25+) and GPU (NVIDIA H100) platforms.

Results confirm that zFLoRA introduces zero to negligible latency overhead, a substantial improvement over existing techniques. Specifically, LoRA adapters can increase first-token prefill latencies by as much as 2. 5times, and per-token decode latencies by 1. 6times, compared to the base model. The breakthrough delivers a solution by fusing adapter blocks with the base model’s projection layers, enabling computations to be performed as a single matrix multiplication. This approach leverages the efficiency of modern GPU and NPU hardware, minimizing computational cost and eliminating the need for separate memory operations. The architecture of zFLoRA is carefully designed to avoid any additional operations that could introduce latency, ensuring optimal performance across a range of applications.

👉 More information

🗞 zFLoRA: Zero-Latency Fused Low-Rank Adapters

🧠 ArXiv: https://arxiv.org/abs/2510.25784