Current vision-language models (VLMs) excel at understanding and describing what they see, but their capacity to independently reason and act on visual information, much like a human, remains a significant challenge. Daoan Zhang, Pai Liu, and Xiaofei Zhou, along with colleagues, address this gap by introducing VisualActBench, a large-scale benchmark comprising over 1,000 videos and thousands of human-annotated actions across everyday scenarios. The team meticulously labels each action with details reflecting its importance and whether it’s a proactive or reactive response, allowing for a nuanced assessment of human-aligned reasoning in AI. Evaluating 29 VLMs with this new benchmark reveals that even the most advanced models, such as GPT4o, still fall short of human-level performance, particularly when generating proactive, high-priority actions, and establishes a crucial foundation for developing truly intelligent, vision-centric AI agents capable of navigating the real world.

Vision-Language Models and Performance Benchmarks

Researchers are developing increasingly sophisticated vision-language models (VLMs) capable of understanding and interacting with the visual world, and rigorous testing is crucial to track progress. Several prominent models are currently under investigation, including OpenAI’s GPT-4O and GPT-4, Alibaba’s Qwen2-VL, and open-source options like MiniCPM-V, Llava-OneVision, and Aria. These models vary in size and architecture, but all aim to bridge the gap between visual perception and language understanding. A significant portion of this research focuses on the datasets and benchmarks used to assess VLM performance.

Key datasets include ActivityNet-QA, Kinetics, Moments in Time, and MS-R VTT, which provide large-scale collections of videos and associated annotations. Benchmarks like TVQA, Winoground, and LvBench challenge models with complex tasks requiring understanding of events, relationships, and temporal reasoning. Researchers also utilize MS COCO for image and video captioning, and specialized datasets for anomaly detection in surveillance footage. Several key concepts and techniques underpin these advancements. Visual instruction tuning significantly improves performance, while the mixture-of-experts (MoE) architecture enhances efficiency and scalability.

Long-context modeling enables VLMs to process extended video sequences, and hierarchical compression reduces data size without sacrificing crucial information. Researchers are also exploring the use of AI feedback to refine model training and improve trustworthiness. Current research suggests that GPT-4O represents a state-of-the-art model, often used as a benchmark for comparison. MiniCPM-V aims to achieve comparable performance with a smaller model size, demonstrating progress towards more efficient VLMs. There is a growing emphasis on open-source VLMs, such as Qwen2-VL, Rlaif-V, and Aria, fostering collaboration and innovation. These models are increasingly designed to be efficient, allowing them to run on mobile devices or with limited resources.

Proactive Reasoning Benchmark for Vision-Language Models

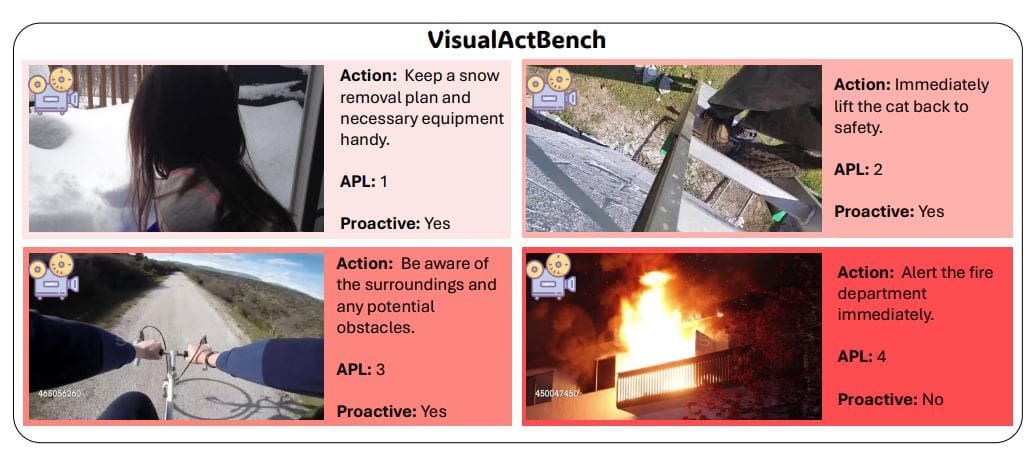

Researchers have introduced VisualActBench, a new benchmark designed to rigorously evaluate the ability of vision-language models (VLMs) to reason and act proactively based solely on visual input. This work moves beyond simply understanding what is happening in a video, and instead challenges models to anticipate future events and determine appropriate actions, mirroring human decision-making. The benchmark comprises over 1,074 videos and 3,733 human-annotated actions, carefully selected from existing datasets like Kinetics and Moments in Time. Each action is assigned an Action Prioritization Level (APL) and categorized as either proactive or reactive, allowing for a nuanced assessment of model behavior.

The methodology emphasizes action reasoning generation, differing from traditional video benchmarks focused on captioning or question-answering. Researchers deliberately avoided textual prompts, requiring models to autonomously analyze scenes and act accordingly. The dataset maintains a balanced distribution across four real-world scenarios, dynamic navigation, home service, safety and monitoring, and human-machine interaction, ensuring diverse contextual coverage. A comprehensive evaluation of 29 VLMs revealed significant gaps in their ability to exhibit proactiveness, value alignment, and abstract reasoning. This work establishes a foundation for assessing and improving the real-world readiness of vision-centric AI. By challenging VLMs to act proactively, researchers are pushing the boundaries of what these models can achieve in dynamic environments, paving the way for more intelligent and autonomous systems.

Visual Reasoning Benchmark Evaluates Action Prediction

This research introduces VisualActBench, a large-scale benchmark designed to assess the reasoning capabilities of Vision-Language Models (VLMs) based solely on visual inputs. Researchers developed the Visual Action Reasoning task, challenging models to generate appropriate actions from visual scenes without relying on textual prompts. This strictly visual-centric approach allows for evaluation of a VLM’s ability to interpret context and determine suitable responses based on visual cues alone. The benchmark comprises over 1,074 videos and 3,733 human-annotated actions, categorized by an Action Prioritization Level (APL) and whether they are proactive or reactive.

The benchmark defines four real-world scenarios to provide diverse testing conditions: dynamic navigation, home service, safety and monitoring, and human-machine interaction. Experiments evaluating 29 VLMs, including state-of-the-art models like GPT-4o, reveal a significant gap between current VLM performance and human-level reasoning, particularly in generating proactive, high-priority actions. Researchers measured performance based on the APL and proactive/reactive categorization, providing a detailed analysis of where VLMs fall short of human expectations. This work establishes a comprehensive foundation for assessing and improving the real-world readiness of proactive, vision-centric AI agents. By challenging VLMs to reason and act based solely on visual input, researchers are paving the way for more intuitive and effective AI systems capable of independent decision-making in complex environments.

Proactive Reasoning Benchmark For Vision-Language Models

This work presents VisualActBench, a new benchmark designed to rigorously evaluate the proactive reasoning abilities of Vision-Language Models (VLMs). Researchers introduced the Visual Action Reasoning task, challenging models to generate actions based solely on visual input, moving beyond simple visual understanding towards more human-like, initiative-driven decision-making. The benchmark comprises a large-scale dataset of over 3,700 annotated actions across diverse real-world scenarios, alongside a novel Action Prioritization Level (APL) metric to assess both the correctness and value-alignment of model outputs. Comprehensive evaluation of 29 state-of-the-art VLMs reveals that current models struggle with proactiveness, value-sensitive prioritization, and abstract reasoning, even the largest proprietary models like GPT-4o falling short of human-level performance.

While increasing model size and employing reinforcement learning techniques can improve action quality and prioritization, these gains diminish beyond a certain scale. The findings highlight critical limitations in the ability of current VLMs to operate autonomously and appropriately in complex, open-ended real-world contexts. Researchers anticipate that VisualActBench will facilitate the development of more aligned, robust, and contextually aware vision-language agents capable of independent action, mirroring human.

👉 More information

🗞 VisualActBench: Can VLMs See and Act like a Human?

🧠 ArXiv: https://arxiv.org/abs/2512.09907