Parallel optical computing promises revolutionary processing speeds, exploiting the inherent parallelism of light, but realising this potential requires overcoming significant technical hurdles. Ziqi Wei from Harvard, alongside Yuanjian Wan and Yuhu Cheng, and colleagues, now present a comprehensive modelling framework that addresses a critical limitation in these systems: dispersion. Their research extends existing theories to account for how different wavelengths of light spread and distort signals within cascaded optical circuits, specifically those based on Mach-Zehnder interferometers. The team validates their model experimentally and proposes a computationally efficient method to calibrate and compensate for dispersion, reducing error across a 40nm range, and establishing a fundamental advance towards reliable, multi-wavelength optical processors.

Dispersion Limits Mach-Zehnder Interferometer Scaling

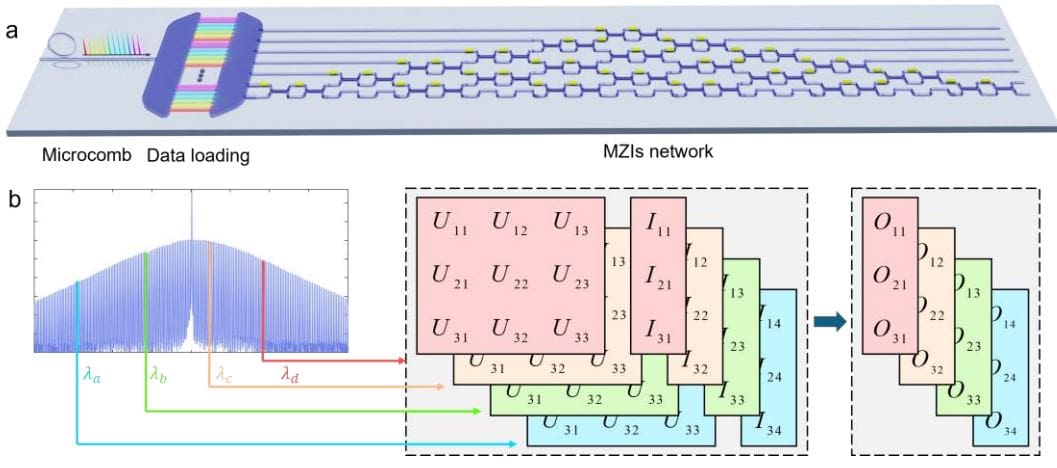

Scientists investigated the challenges of scaling parallel optical computing systems based on cascaded Mach-Zehnder interferometers (MZIs). These systems utilize light to perform computations, offering potential speed and energy efficiency advantages, but are limited by dispersion, where light pulses spread as they travel through the circuit. This dispersion introduces errors, particularly when using multiple wavelengths of light to increase data throughput. Researchers developed a comprehensive theoretical model to predict and analyze these wavelength-dependent dispersion effects within MZI meshes.

The team rigorously analyzed how dispersion-induced errors scale with the size of the MZI mesh and the range of wavelengths used, deriving a formula to quantify the maximum error. To address these errors, scientists proposed a first-order interpolation method, using calibration data at specific wavelengths to correct signals at intermediate wavelengths, significantly improving the accuracy and reliability of optical computations. The research incorporates wavelength multiplexing, a technique that encodes independent data streams onto different wavelengths to linearly scale computational throughput without duplicating hardware. The use of soliton microcombs, which generate multiple wavelengths, is also explored. This work is relevant to developing photonic neural networks that can be trained using forward-only algorithms, avoiding computationally expensive backpropagation, and contributes to more accurate, reliable, and efficient parallel optical computing systems with potential applications in artificial intelligence, machine learning, optimization, and image processing.

Multi-wavelength Parallel Computing with Dispersion Modelling

Scientists engineered a novel methodology for multi-wavelength parallel optical computing, addressing limitations in scalability and accuracy. They extended existing theories to develop a generalized model tailored for wavelength-multiplexed parallel optical computation, incorporating component dispersion characteristics into a wavelength-dependent transfer matrix framework. This model was rigorously validated through experimentation, confirming its accuracy in predicting system behavior. The team employed a microcomb-based multiwavelength source, enabling over 100 parallel computing channels and increasing computility significantly.

Wavelength division multiplexing was central to this advancement, encoding independent data streams onto different wavelengths to achieve linear scaling of computational throughput without duplicating hardware. To mitigate dispersion-induced errors, scientists proposed a computationally efficient compensation strategy, reducing global dispersion error within a 40nm range from 0. 22 to 0. 039 using a technique called edge-spectrum calibration. This calibration process precisely characterizes the spectral response of each MZI, allowing for targeted correction of phase shifts.

Dispersion Compensation Boosts Optical Computing Performance

This work presents a groundbreaking advancement in parallel optical computing, establishing a comprehensive model for dispersion-aware systems and a method for error correction within cascaded Mach-Zehnder interferometer (MZI) chips. Researchers developed a generalized model incorporating component dispersion characteristics into a wavelength-dependent transfer matrix framework, experimentally validating its accuracy and effectiveness. The team demonstrated a computationally efficient compensation strategy that reduces global dispersion error within a 40nm range, improving performance from 0. 22 to 0.

- The study systematically quantifies dispersion errors arising in phase shifters, which exhibit wavelength-dependent variations in their response function, while demonstrating negligible dispersion from well-designed broadband beam splitters. Researchers established a mathematical framework describing the overall operation of an MZI mesh as a 2×2 matrix, extending this to represent the entire chip as an n x n unitary matrix, describing how light signals are transformed as they propagate. Experiments revealed that dispersion causes variations in the angles defining the output vector, impacting the accuracy of computations.

To address this, the team isolated the dispersion term, enabling detailed analysis and correction. They demonstrated that the modulus of the output vector remains constant regardless of wavelength, a crucial prerequisite for their developed error correction theorem. By defining the upper limits of phase deviations, researchers established a foundation for minimizing errors and achieving high-fidelity parallel optical computing. The results establish a fundamental framework for building reliable, multi-wavelength processors, paving the way for more powerful and efficient optical computing systems.

Broadband MZI Error Correction via Modelling

This work presents a generalized theoretical model for cascaded Mach-Zehnder interferometer (MZI) systems, extending existing theories to encompass multi-wavelength parallel optical computing. Researchers developed a wavelength-dependent transfer matrix framework that incorporates the dispersion characteristics of MZI components, enabling rigorous analysis of dispersion-induced errors within these systems. Experimental validation confirms the model’s accuracy in predicting phase-related spectral dispersion, establishing a foundation for understanding and mitigating errors in broadband MZI parallel computing. Building upon this theoretical framework, the team proposes a computationally efficient error correction strategy that significantly reduces global dispersion error.

Through edge-spectrum calibration, they demonstrate a reduction in error from 0. 22 to 0. 039 within a 40nm range, achieving effective mitigation with minimal computational overhead. While acknowledging that the residual error is proportional to the square of the initial dispersion error, the results demonstrate the effectiveness of minimizing uncompensated dispersion. These advances contribute to the analysis, calibration, and optimization of fidelity in parallel optical computing systems and may guide the design of more spectrally consistent chips, potentially broadening the functionality of these systems in areas such as artificial intelligence and image processing.

👉 More information

🗞 Dispersion-Aware Modeling Framework for Parallel Optical Computing

🧠 ArXiv: https://arxiv.org/abs/2511.18897