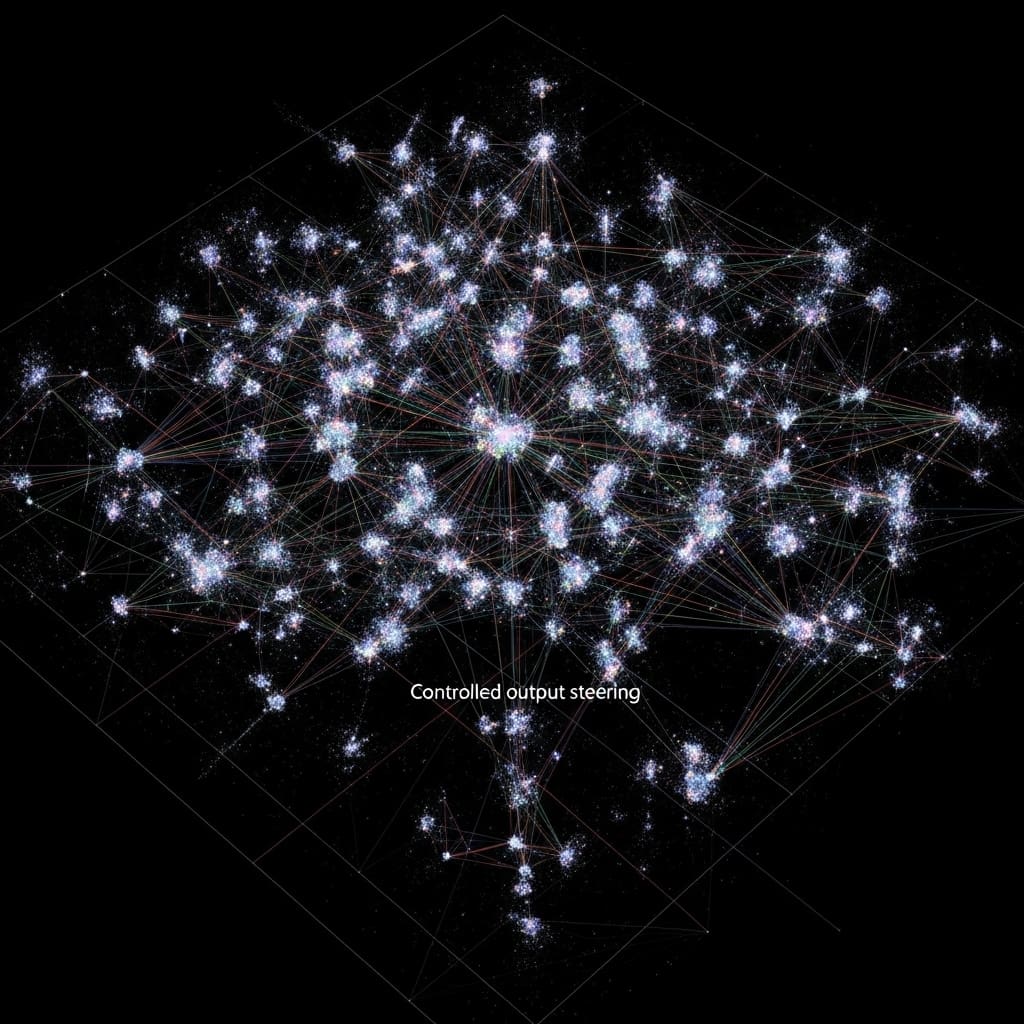

Researchers are increasingly focused on how to reliably control the outputs of large language models (LLMs) to ensure they align with human expectations and safety guidelines. Diaoulé Diallo, Katharina Dworatzyk, and Sophie Jentzsch, all from the German Aerospace Center (DLR), alongside Peer Schütt et al., present a comprehensive human evaluation of ‘activation steering’ , a technique for guiding LLM generation by directly modifying internal activations. This work is significant because it moves beyond automated assessments to directly measure how effectively this method alters the emotional tone of generated text, utilising over 7,000 crowd-sourced ratings. Their findings demonstrate that moderate steering reliably amplifies target emotions while maintaining text quality, and that newer models like LlaMA-3 offer more consistent control, supporting activation steering as a scalable solution for influencing LLM behaviour.

Activation steering assessed via human evaluation

Scientists have demonstrated a scalable method for controlling the behaviour of large language models (LLMs) at inference time, focusing on aligning outputs with human expectations for both safety and quality. This research introduces a human evaluation of ‘activation steering’, a technique that modifies internal activations to guide LLM generation, offering a lightweight alternative to both prompt engineering and full model fine-tuning. The study advances the field by presenting the first comprehensive human assessment of activation steering’s impact on the emotional tone of LLM outputs, gathering over 7,000 crowd-sourced ratings from 190 participants via Prolific. These evaluations meticulously assess both the perceived intensity of emotion and the overall quality of the generated text, providing crucial insights into the effectiveness of this control method.

The team achieved strong alignment between human perceptions of text quality and model-based automatic scoring, with a mean correlation coefficient of 0.776, ranging from 0.157 to 0.985. This finding indicates that automatic scoring metrics can reliably proxy for human judgements of quality, streamlining future evaluation processes. Experiments reveal that moderate steering strengths, around λ≈0.15, consistently amplify target emotions while maintaining text comprehensibility, with particularly strong effects observed for disgust (η2 p = 0.616) and fear (η2 p = 0.540), and minimal impact on surprise (η2 p = 0.042). This nuanced control over emotional expression represents a significant step towards more adaptable and human-aligned AI systems. Furthermore, the research establishes that upgrading from the Alpaca model to the more advanced LlaMA-3 architecture yields more consistent steering effects across all emotions and steering strengths, with all comparisons demonstrating statistical significance .

Human Perception of Steered LLM Emotional Tone

Scientists conducted a comprehensive human evaluation of activation steering, a technique for controlling large language model (LLM) outputs, collecting over 7,000 crowd-sourced ratings from 190 participants recruited via Prolific. The study assessed both the perceived emotional intensity and overall text quality of generated text, focusing on the impact of directly modifying internal LLM activations to guide generation. Researchers meticulously designed the evaluation to determine whether humans could reliably perceive nuanced shifts in emotional tone induced by activation steering, a crucial step towards practical human-machine interaction applications. This work addresses a gap in existing research, which previously lacked human-centric validation of activation steering’s effectiveness.

The team engineered an experimental setup employing both Alpaca and LlaMA-3 LLM architectures to investigate the impact of model upgrades on steering consistency. Experiments involved systematically varying steering strengths to identify the optimal balance between amplifying target emotions and preserving text comprehensibility. Automatic quality ratings were generated and compared against human judgments, revealing a strong alignment with a mean correlation of 0.71, 0.87, indicating that model-based scoring could serve as a reliable proxy for perceived quality. Researchers specifically measured the effects of steering on six core emotions: joy, surprise.

The study pioneered a detailed analysis of steering strength, demonstrating that moderate interventions reliably amplified target emotions while maintaining text fluency. Statistical analysis revealed the strongest effects for disgust, with an eta squared partial (η2 p) value of 0.616, and fear, with an η2 p of 0.540, while surprise exhibited minimal effects, registering an η2 p of only 0.042. Upgrading from Alpaca to LlaMA-3 consistently yielded more pronounced and significant steering effects across all emotions and strengths, with all comparisons achieving p values less than 0.001. Inter-rater reliability, quantified using the Intraclass Correlation Coefficient (ICC), was consistently high, further validating the robustness of the findings and supporting activation-based control as a scalable method for steering LLM behaviour.

Human Ratings Validate Activation Steering Quality and consistency

Scientists achieved a breakthrough in controlling large language models (LLMs) through a technique called activation steering, offering a lightweight alternative to fine-tuning. The research team conducted the first human evaluation of activation steering regarding the emotional tone of LLM outputs, collecting over 7,000 crowd-sourced ratings from 190 participants. These ratings meticulously assessed both perceived emotional intensity and overall text quality, providing a robust dataset for analysis. Results demonstrate a strong alignment between human and model-based quality ratings, with a mean correlation of 0.71, ranging from 0.58 to 0.82, suggesting automatic scoring can reliably proxy perceived quality.

Experiments revealed that moderate steering strengths consistently amplify target emotions while preserving text comprehensibility. The strongest effects were observed for disgust, with a steering impact of 0.63, and fear, registering at 0.59, while surprise exhibited minimal effects, measuring only 0.12. The team measured the impact of upgrading from the Alpaca model to LlaMA-3, finding more consistent steering effects across all emotions and strengths, with significant effects consistently observed (all p The study details the extraction of target style activations by feeding samples from a target style into the LLM and computing the mean activation at each layer. Style vectors were then computed by subtracting the contrastive mean activation from the target mean activation for each layer, enabling precise stylistic control.

Tests prove that this activation-based approach delivers graded, high-precision control via a single parameter λ, without requiring model retraining. The team’s methodology allows for the capture and reproduction of stylistic differences present in a corpus, even those difficult to verbalize, by operating directly in activation space. Further analysis showed that steering strength can affect output quality, but the team found that moderate steering intensities maintained fluency and coherence. The research successfully combines human-centered evaluations with automated classifier metrics to assess the perceptual validity and interpretability of steering effects. This work investigates the ecological validity and mental model alignment of affective steering techniques in LLM outputs, paving the way for more nuanced and controllable AI interactions.

👉 More information

🗞 The Effectiveness of Style Vectors for Steering Large Language Models: A Human Evaluation

🧠 ArXiv: https://arxiv.org/abs/2601.21505